Foundation Models Need a Foundation Tool

LLMs don’t—and can’t—do everything. What they do is very impressive—and useful. It’s broad. And in many ways it’s human-like. But it’s not precise. And in the end it’s not about deep computation.

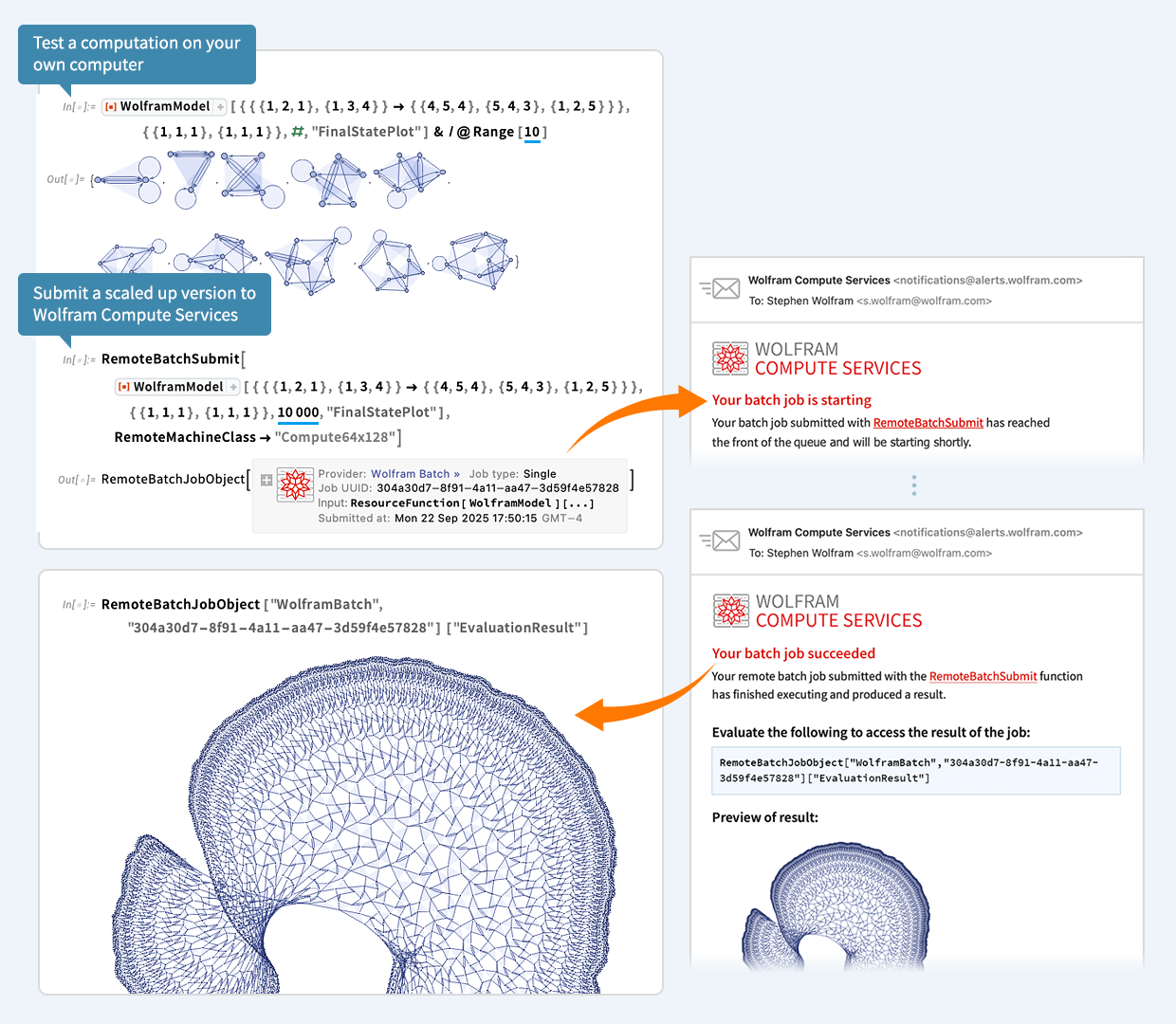

So how can we supplement LLM foundation models? We need a foundation tool: a tool that’s broad and general and does what LLMs themselves don’t: provides deep computation and precise knowledge.

And, conveniently enough, that’s exactly what I’ve been building for the past 40 years! My goal with Wolfram Language has always been to make everything we can about the world computable. To bring together in a coherent and unified way the algorithms, the methods and the data to do precise computation whenever it’s possible. It’s been a huge undertaking, but I think it’s fair to say it’s been a hugely successful one—that’s fueled countless discoveries and inventions (including my own) across a remarkable range of areas of science, technology and beyond. Continue reading