The Most-Used Mathematical Algorithm Idea in History

An octillion. A billion billion billion. That’s a fairly conservative estimate of the number of times a cellphone or other device somewhere in the world has generated a bit using a maximum-length linear-feedback shift register sequence. It’s probably the single most-used mathematical algorithm idea in history. And the main originator of this idea was Solomon Golomb, who died on May 1—and whom I knew for 35 years.

Solomon Golomb’s classic book Shift Register Sequences, published in 1967—based on his work in the 1950s—went out of print long ago. But its content lives on in pretty much every modern communications system. Read the specifications for 3G, LTE, Wi-Fi, Bluetooth, or for that matter GPS, and you’ll find mentions of polynomials that determine the shift register sequences these systems use to encode the data they send. Solomon Golomb is the person who figured out how to construct all these polynomials.

He also was in charge when radar was first used to find the distance to Venus, and of working out how to encode images to be sent from Mars. He introduced the world to what he called polyominoes, which later inspired Tetris (“tetromino tennis”). He created and solved countless math and wordplay puzzles. And—as I learned about 20 years ago—he came very close to discovering my all-time-favorite rule 30 cellular automaton all the way back in 1959, the year I was born.

How I Met Sol Golomb

Most of the scientists and mathematicians I know I met first through professional connections. But not Sol Golomb. It was 1981, and I was at Caltech, a 21-year-old physicist who’d just received some media attention from being the youngest in the first batch of MacArthur award recipients. I get a knock at my office door—and a young woman is there. Already this was unusual, because in those days there were hopelessly few women to be found around a theoretical high-energy physics group. I was a sheltered Englishman who’d been in California a couple of years, but hadn’t really ventured outside the university—and was ill prepared for the burst of Southern Californian energy that dropped in to see me that day. She introduced herself as Astrid, and said that she’d been visiting Oxford and knew someone I’d been at kindergarten with. She explained that she had a personal mandate to collect interesting acquaintances around the Pasadena area. I think she considered me a difficult case, but persisted nevertheless. And one day when I tried to explain something about the work I was doing she said, “You should meet my father. He’s a bit old, but he’s still as sharp as a tack.” And so it was that Astrid Golomb, oldest daughter of Sol Golomb, introduced me to Sol Golomb.

The Golombs lived in a house perched in the hills near Pasadena. I learned that they had two daughters—Astrid, a little older than me, an aspiring Hollywood person, and Beatrice, about my age, a high-powered science type. The Golomb sisters often had parties, usually at their family’s house. There were themes, like the flamingoes & hedgehogs croquet garden party (“recognition will be given to the person who appears most appropriately attired”), or the Stonehenge party with instructions written using runes. The parties had an interesting cross-section of young and not-so-young people, including various local luminaries. And always there, hanging back a little, was Sol Golomb, a small man with a large beard and a certain elf-like quality to him, typically wearing a dark suit coat.

I gradually learned a little about Sol Golomb. That he was involved in “information theory”. That he worked at USC (the University of Southern California). That he had various unspecified but apparently high-level government and other connections. I’d heard of shift registers, but didn’t really know anything much about them.

Then in the fall of 1982, I visited Bell Labs in New Jersey and gave a talk about my latest results on cellular automata. One topic I discussed was what I called “additive” or “linear” cellular automata—and their behavior with limited numbers of cells. Whenever a cellular automaton has a limited number of cells, it’s inevitable that its behavior will eventually repeat. But as the size increases, the maximum repetition period—say for the rule 90 additive cellular automaton—bounces around seemingly quite randomly: 1, 1, 3, 2, 7, 1, 7, 6, 31, 4, 63, …. A few days before my talk, however, I’d noticed that these periods actually seemed to follow a formula that depended on things like the prime factorization of the number of cells. But when I mentioned this during the talk, someone at the back put up their hand and asked, “Do you know if it works for the case n=37?” My experiments hadn’t gotten as far as the size-37 case yet, so I didn’t know. But why would someone ask that?

The person who asked turned out to be a certain Andrew Odlyzko, a number theorist at Bell Labs. I asked him, “What on earth makes you think there might be something special about n=37?” “Well,” he said, “I think what you’re doing is related to the theory of linear-feedback shift registers,” and he suggested that I look at Sol Golomb’s book (“Oh yes,” I said, “I know his daughters…”). Andrew was indeed correct: there is a very elegant theory of additive cellular automata based on polynomials that is similar to the theory Sol developed for linear-feedback shift registers. Andrew and I ended up writing a now-rather-well-cited paper about it (it’s interesting because it’s a rare case where traditional mathematical methods let one say things about nontrivial cellular automaton behavior). And for me, a side effect was that I learned something about what the somewhat mysterious Sol Golomb actually did. (Remember, this was before the web, so one couldn’t just instantly look everything up.)

The Story of Sol Golomb

Solomon Golomb was born in Baltimore, Maryland in 1932. His family came from Lithuania. His grandfather had been a rabbi; his father moved to the US when he was young, and got a master’s degree in math before switching to medieval Jewish philosophy and also becoming a rabbi. Sol’s mother came from a prominent Russian family that had made boots for the Tsar’s army and then ran a bank. Sol did well in school, notably being a force in the local debating scene. Encouraged by his father, he developed an interest in mathematics, publishing a problem he invented about primes when he was 17. After high school, Sol enrolled at Johns Hopkins University to study math, narrowly avoiding a quota on Jewish students by promising he wouldn’t switch to medicine—and took twice the usual course load, graduating in 1951 after half the usual time.

From there he would go to Harvard for graduate school in math. But first he took a summer job at the Glenn L. Martin Company, an aerospace firm founded in 1912 that had moved to Baltimore from Los Angeles in the 1920s and mostly become a defense contractor—and that would eventually merge into Lockheed Martin. At Harvard, Sol specialized in number theory, and in particular in questions about characterizations of sets of prime numbers. But every summer he would return to the Martin Company. As he later described it, he found that at Harvard “the question of whether anything that was taught or studied in the mathematics department had any practical applications could not even be asked, let alone discussed”. But at the Martin Company, he discovered that the pure mathematics he knew—even about primes and things—did indeed have practical applications, and very interesting ones, especially to shift registers.

The first summer he was at the Martin Company, Sol was assigned to a control theory group. But by his second summer, he’d been put in a group studying communications. And in June 1954 it so happened that his supervisor had just gone to a conference where he’d heard about strange behavior observed in linear-feedback shift registers (he called them “tapped delay lines with feedback”)—and he asked Sol if he could investigate. It didn’t take Sol long to realize that what was going on could be very elegantly studied using the pure mathematics he knew about polynomials over finite fields. Over the year that followed, he split his time between graduate school at Harvard and consulting for the Martin Company, and in June 1955 he wrote his final report, “Sequences with Randomness Properties”—which would basically become the foundational document of the theory of shift register sequences.

Sol liked math puzzles, and in the process of thinking about a puzzle involving arranging dominoes on a checkerboard, he ended up inventing what he called “polyominoes”. He gave a talk about them in November 1953 at the Harvard Mathematics Club, published a paper about them (his first research publication), won a Harvard math prize for his work on them, and, as he later said, then “found [himself] irrevocably committed to their care and feeding” for the rest of his life.

In June 1955, Sol went to spend a year at the University of Oslo on a Fulbright Fellowship—partly so he could work with some distinguished number theorists there, and partly so he could add Norwegian, Swedish and Danish (and some runic scripts) to his collection of language skills. While he was there, he finished a long paper on prime numbers, but also spent time traveling around Scandinavia, and in Denmark met a young woman named Bo (Bodil Rygaard)—who came from a large family in a rural area mostly known for its peat moss, but had managed to get into university and was studying philosophy. Sol and Bo apparently hit it off, and within months, they were married.

When they returned to the US in July 1956, Sol interviewed in a few places, then accepted a job at JPL—the Jet Propulsion Lab that had spun off from Caltech, initially to do military work. Sol was assigned to the Communications Research Group, as a Senior Research Engineer. It was a time when the people at JPL were eager to try launching a satellite. At first, the government wouldn’t let them do it, fearing it would be viewed as a military act. But that all changed in October 1957 when the Soviet Union launched Sputnik, ostensibly as part of the International Geophysical Year. Amazingly, it took only 3 months for the US to launch Explorer 1. JPL built much of it, and Sol’s lab (where he had technicians building electronic implementations of shift registers) was diverted into doing things like making radiation detectors (including, as it happens, the ones that discovered the Van Allen radiation belts)—while Sol himself worked on using radar to determine the orbit of the satellite when it was launched, taking a little time out to go back to Harvard for his final PhD exam.

It was a time of great energy around JPL and the space program. In May 1958 a new Information Processing Group was formed, and Sol was put in charge—and in the same month, Sol’s first child, the aforementioned Astrid, was born. Sol continued his research on shift register sequences—particularly as applied to jamming-resistant radio control of missiles. In May 1959, Sol’s second child arrived—and was named Beatrice, forming a nice A, B sequence. In the fall of 1959, Sol took a sabbatical at MIT, where he got to know Claude Shannon and a number of other MIT luminaries, and got involved in information theory and the theory of algebraic codes.

As it happens, he’d already done some work on coding theory—in the area of biology. The digital nature of DNA had been discovered by Jim Watson and Francis Crick in 1953, but it wasn’t yet clear just how sequences of the four possible base pairs encoded the 20 amino acids. In 1956, Max Delbrück—Jim Watson’s former postdoc advisor at Caltech—asked around at JPL if anyone could figure it out. Sol and two colleagues analyzed an idea of Francis Crick’s and came up with “comma-free codes” in which overlapping triples of base pairs could encode amino acids. The analysis showed that exactly 20 amino acids could be encoded this way. It seemed like an amazing explanation of what was seen—but unfortunately it isn’t how biology actually works (biology uses a more straightforward encoding, where some of the 64 possible triples just don’t represent anything).

In addition to biology, Sol was also pulled into physics. His shift register sequences were useful for doing range finding with radar (much as they’re used now in GPS), and at Sol’s suggestion, he was put in charge of trying to use them to find the distance to Venus. And so it was that in early 1961—when the Sun, Venus, and Earth were in alignment—Sol’s team used the 85-foot Goldstone radio dish in the Mojave Desert to bounce a radar signal off Venus, and dramatically improve our knowledge of the Earth-Venus and Earth-Sun distances.

With his interest in languages, coding and space, it was inevitable that Sol would get involved in the question of communications with extraterrestrials. In 1961 he wrote a paper for the Air Force entitled “A Short Primer for Extraterrestrial Linguistics”, and over the next several years wrote several papers on the subject for broader audiences. He said that “There are two questions involved in communication with Extraterrestrials. One is the mechanical issue of discovering a mutually acceptable channel. The other is the more philosophical problem (semantic, ethic, and metaphysical) of the proper subject matter for discourse. In simpler terms, we first require a common language, and then we must think of something clever to say.” He continued, with a touch of his characteristic humor: “Naturally, we must not risk telling too much until we know whether the Extraterrestrials’ intentions toward us are honorable. The Government will undoubtedly set up a Cosmic Intelligence Agency (CIA) to monitor Extraterrestrial Intelligence. Extreme security precautions will be strictly observed. As H. G. Wells once pointed out [or was it an episode of The Twilight Zone?], even if the Aliens tell us in all truthfulness that their only intention is ‘to serve mankind,’ we must endeavor to ascertain whether they wish to serve us baked or fried.”

While at JPL, Sol had also been teaching some classes at the nearby universities: Caltech, USC and UCLA. In the fall of 1962, following some changes at JPL—and perhaps because he wanted to spend more time with his young children—he decided to become a full-time professor. He got offers from all three schools. He wanted to go somewhere where he could “make a difference”. He was told that at Caltech “no one has any influence if they don’t at least have a Nobel Prize”, while at UCLA “the UC bureaucracy is such that no one ever has any ability to affect anything”. The result was that—despite its much-inferior reputation at the time—Sol chose USC. He went there in the spring of 1963 as a Professor of Electrical Engineering—and ended up staying for 53 years.

Shift Registers

Before going on with the story of Sol’s life, I should explain what a linear-feedback shift register (LFSR) actually is. The basic idea is simple. Imagine a row of squares, each containing either 1 or 0 (say, black or white). In a pure shift register all that happens is that at each step all values shift one position to the left. The leftmost value is lost, and a new value is “shifted in” from the right. The idea of a feedback shift register is that the value that’s shifted in is determined (or “fed back”) from values at other positions in the shift register. In a linear-feedback shift register, the values from “taps” at particular positions in the register are combined by being added mod 2 (so that 1⊕1=0 instead of 2), or equivalently XOR’ed (“exclusive or”, true if either is true, but not both).

If one runs this for a while, here’s what happens:

Obviously the shift register is always shifting bits to the left. And it has a very simple rule for how bits should be added at the right. But if one looks at the sequence of these bits, it seems rather random—though, as the picture shows, it does eventually repeat. What Sol Golomb did was to find an elegant mathematical way to analyze such sequences, and how they repeat.

If a shift register has size n, then it has 2n possible states altogether (corresponding to all possible sequences of 0s and 1s of length n). Since the rules for the shift register are deterministic, any given state must always go to the same next state. And that means the maximum possible number of steps the shift register could conceivably go through before it repeats is 2n (actually, it’s 2n–1, because the state with all 0s can’t evolve into anything else).

In the example above, the shift register is of size 7, and it turns out to repeat after exactly 27–1=127 steps. But which shift registers—with which particular arrangements of taps—will produce sequences with maximal lengths? This is the first question Sol Golomb set out to investigate in the summer of 1954. His answer was simple and elegant.

The shift register above has taps at positions 7, 6 and 1. Sol represented this algebraically, using the polynomial x7+x6+1. Then what he showed was that the sequence that would be generated would be of maximal length if this polynomial is “irreducible modulo 2”, so that it can’t be factored, making it sort of the analog of a prime among polynomials—as well as having some other properties that make it a so-called “primitive polynomial”. Nowadays, with Mathematica and the Wolfram Language, it’s easy to test things like this:

![Irreducible polynomial in the Wolfram Language: IrreduciblePolynomialQ[x^7 + x^6 + 1, Modulus ->2] Irreducible polynomial in the Wolfram Language: IrreduciblePolynomialQ[x^7 + x^6 + 1, Modulus ->2]](https://content.wolfram.com/sites/43/2016/05/4-irreducible.png)

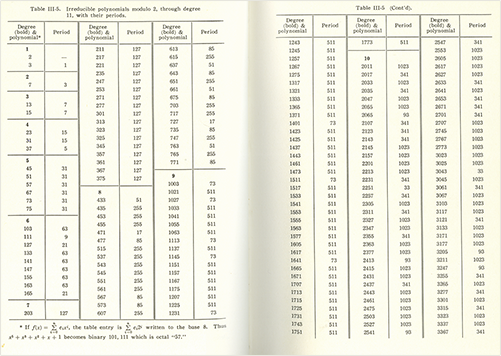

Back in 1954, Sol had to do all this by hand, but came up with a fairly long table of primitive polynomials corresponding to shift registers that give maximal length sequences:

The Prehistory of Shift Registers

The idea of maintaining short-term memory by having “delay lines” that circulate digital pulses (say in an actual column of mercury) goes back to the earliest days of electronic computers. By the late 1940s such delay lines were routinely being implemented purely digitally, using sequences of vacuum tubes, and were being called “shift registers”. It’s not clear when the first feedback shift registers were built. Perhaps it was at the end of the 1940s. But it’s still shrouded in mystery—because the first place they seem to have been used was in military cryptography.

The basic idea of cryptography is to take meaningful messages, and then randomize them so they can’t be recognized, but in such a way that the randomization can always be reversed if you know the key that was used to create it. So-called stream ciphers work by generating long sequences of seemingly random bits, then combining these with some representation of the message—then decoding by having the receiver independently generate the same sequence of seemingly random bits, and “backing this out” of the encoded message received.

Linear-feedback shift registers seem at first to have been prized for cryptography because of their long repetition periods. As it turns out, the mathematical analysis Sol used to find things like these periods also makes clear that such shift registers aren’t good for secure cryptography. But in the early days, they seemed pretty good—particularly compared to, say, successive rotor positions in an Enigma machine—and there’s been a persistent rumor that, for example, Soviet military cryptosystems were long based on them.

Back in 2001, when I was working on history notes for my book A New Kind of Science, I had a long phone conversation with Sol about shift registers. Sol told me that when he started out, he didn’t know anything about cryptographic work on shift registers. He said that people at Bell Labs, Lincoln Labs and JPL had also started working on shift registers around the same time he did—though perhaps through knowing more pure mathematics, he managed to get further than they did, and in the end his 1955 report basically defined the field.

Over the years that followed, Sol gradually heard about various precursors of his work in the pure mathematical literature. Way back in the year 1202 Fibonacci was already talking about what are now called Fibonacci numbers—and which are generated by a recurrence relation that can be thought of as an analog of a linear-feedback shift register, but working with arbitrary integers rather than 0s and 1s. There was a little work on recurrences with 0s and 1s done in the early 1900s, but the first large-scale study seems to have been by Øystein Ore, who, curiously, came from the University of Oslo, though was by then at Yale. Ore had a student named Marshall Hall—who Sol told me he knew had consulted for the predecessor of the National Security Agency in the late 1940s—possibly about shift registers. But whatever he may have done was kept secret, and so it fell to Sol to discover and publish the story of linear-feedback shift registers—even though Sol did dedicate his 1967 book on shift registers to Marshall Hall.

What Are Shift Register Sequences Good For?

Over the years I’ve noticed the principle that systems defined by sufficiently simple rules always eventually end up having lots of applications. Shift registers follow this principle in spades. And for example modern hardware (and software) systems are bristling with shift registers: a typical cellphone probably has a dozen or two, implemented usually in hardware but sometimes in software. (When I say “shift register” here, I mean linear-feedback shift register, or LFSR.)

Most of the time, the shift registers that are used are ones that give maximum-length sequences (otherwise known as “m-sequences”). And the reasons they’re used are typically related to some very special properties that Sol discovered about them. One basic property they always have is that they contain the same total number of 0s and 1s (actually, there’s always exactly one extra 1). Sol then showed that they also have the same number of 00s, 01s, 10s and 11s—and the same holds for larger blocks too. This “balance” property is on its own already very useful, for example if one’s trying to efficiently test all possible bit patterns as input to a circuit.

But Sol discovered another, even more important property. Replace each 0 in a sequence by –1, then imagine multiplying each element in a shifted version of the sequence by the corresponding element in the original. What Sol showed is that if one adds up these products, they’ll always sum to zero, except when there’s no shift at all. Said more technically, he showed that the sequence has no correlation with shifted versions of itself.

Both this and the balance property will be approximately true for any sufficiently long random sequence of 0s and 1s. But the surprising thing about maximum-length shift register sequences is that these properties are always exactly true. The sequences in a sense have some of the signatures of randomness—but in a very perfect way, made possible by the fact that they’re not random at all, but instead have a very definite, organized structure.

It’s this structure that makes linear-feedback shift registers ultimately not suitable for strong cryptography. But they’re great for basic “scrambling” and “cheap cryptography”—and they’re used all over the place for these purposes. A very common objective is just to “whiten” (as in “white noise”) a signal. It’s pretty common to want to transmit data that’s got long sequences of 0s in it. But the electronics that pick these up can get confused if they see what amounts to “silence” for too long. One can avoid the problem by scrambling the original data by combining it with a shift register sequence, so there’s always some kind of “chattering” going on. And that’s indeed what’s done in Wi-Fi, Bluetooth, USB, digital TV, Ethernet and lots of other places.

It’s often a nice side effect that the shift register scrambling makes the signal harder to decode—and this is sometimes used to provide at least some level of security. (DVDs use a combination of a size-16 and a size-24 shift register to attempt to encode their data; many GSM phones use a combination of three shift registers to encode all their signals, in a way that was at first secret.)

GPS makes crucial use of shift register sequences too. Each GPS satellite continuously transmits a shift register sequence (from a size-10 shift register, as it happens). A receiver can tell at exactly what time a signal it’s just received was transmitted from a particular satellite by seeing what part of the sequence it got. And by comparing delay times from different satellites, the receiver can triangulate its position. (There’s also a precision mode of GPS, that uses a size-1024 shift register.)

A quite different use of shift registers is for error detection. Say one’s transmitting a block of bits, but each one has a small probability of error. A simple way to let one check for a single error is to include a “parity bit” that says whether there should be an odd or even number of 1s in the block of bits. There are generalizations of this called CRCs (cyclic redundancy checks) that can check for a larger number of errors—and that are computed essentially by feeding one’s data into none other than a linear-feedback shift register. (There are also error-correcting codes that let one not only detect but also correct a certain number of errors, and some of these, too, can be computed with shift register sequences—and in fact Sol Golomb used a version of these called Reed–Solomon codes to design the video encoding for Mars spacecraft.)

The list of uses for shift register sequences goes on and on. A fairly exotic example—more popular in the past than now—was to use shift register sequences to jitter the clock in a computer to spread out the frequency at which the CPU would potentially generate radio interference (“select Enable Spread Spectrum in the BIOS”).

One of the single most prominent uses of shift register sequences is in cellphones, for what’s called CDMA (code division multiple access). Cellphones got their name because they operate in “cells”, with all phones in a given cell being connected to a particular tower. But how do different cellphones in a cell not interfere with each other? In the first systems, each phone just negotiated with the tower to use a slightly different frequency. Later, they used different time slices (TDMA, or time division multiple access). But CDMA uses maximum-length shift register sequences to provide a clever alternative.

The idea is to have all phones essentially operate on the same frequency, but to have each phone encode its signal using (in the simplest case) a differently shifted version of a shift register sequence. And because of Sol’s mathematical results, these differently shifted versions have no correlation—so the cellphone signals don’t interfere. And this is how, for example, most 3G cellphone networks operate.

Sol created the mathematics for this, but he also brought some of the key people together. Back in 1959, he’d gotten to know a certain Irwin Jacobs, who’d recently gotten a PhD at MIT. Meanwhile, he knew Andy Viterbi, who worked at JPL. Sol introduced the two of them—and by 1968 they’d formed a company called Linkabit which did work on coding systems, mostly for the military.

Linkabit had many spinoffs and descendents, and in 1985 Jacobs and Viterbi started a new company called Qualcomm. It didn’t immediately do especially well, but by the early 1990s it began a meteoric rise when it started making the components to deploy CDMA in cellphones—and in 1999 Sol became the “Viterbi Professor of Communications” at USC.

Where Are There Shift Registers?

It’s sort of amazing that—although most people have never heard of them—shift register sequences are actually used in one way or another almost whenever bits are moved around in modern communication systems, computers and elsewhere. It’s quite confusing sometimes, because there are lots of things with different names and acronyms that all turn out to be linear-feedback shift register sequences (PN, pseudonoise, M-, FSR, LFSR sequences, spread spectrum communications, MLS, SRS, PRBS, …).

If one looks at cellphones, shift register sequence usage has gone up and down over the years. 2G networks are based on TDMA, so don’t use shift register sequences to encode their data—but still often use CRCs to validate blocks of data. 3G networks are big users of CDMA—so there are shift register sequences involved in pretty much every bit that’s transmitted. 4G networks typically use a combination of time and frequency slots which don’t directly involve shift register sequences—though there are still CRCs used, for example to deal with data integrity when frequency windows overlap. 5G is designed to be more elaborate—with large arrays of antennas dynamically adapting to use optimal time and frequency slots. But half their channels are typically allocated to “pilot signals” that are used to infer the local radio environment—and work by transmitting none other than shift register sequences.

Throughout most kinds of electronics it’s common to want to use the highest data rates and the lowest powers that still get bits transmitted correctly above the “noise floor”. And typically the way one pushes to the edge is to do automatic error detection—using CRCs and therefore shift register sequences. And in fact pretty much every kind of bus (PCIe, SATA, etc.) inside a computer does this: whether it’s connecting parts of CPUs, getting data off devices, or connecting to a display with HDMI. And on disks and in memory, for example, CRCs and other shift-register-sequence-based codes are pretty much universally used to operate at the highest possible rates and densities.

Shift registers are so ubiquitous, it’s a little difficult to estimate just how many of them are in use, and how many bits are being generated by them. There are perhaps 10 billion computers, slightly fewer cellphones, and an increasing number of billions of embedded and IoT (“Internet of Things”) devices. (Even many of the billion cars in the world, for example, have at least 10 microprocessors in them.)

At what rate are the shift registers running? Here, again, things are complicated. In communications systems, for example, there’s a basic carrier frequency—usually in the GHz range—and then there’s what’s called a “chipping rate” (or, confusingly, “chip rate”) that says how fast something like CDMA is done, and this is usually in the MHz range. On the other hand, in buses inside computers, or in connections to a display, all the data is going through shift registers, at the full data rate, which is well into the GHz range.

So it seems safe to estimate that there are at least 10 billion communications links, running for at least 1/10 billion seconds (which is 3 years), that use at least 1 billion bits from a shift register every second—meaning that to date Sol’s algorithm has been used at least an octillion times.

Is it really the most-used mathematical algorithm idea in history? I think so. I suspect the main potential competition would be from arithmetic operations. These days processors are doing perhaps a trillion arithmetic operations per second—and such operations are needed for pretty much every bit that’s generated by a computer. But how is arithmetic done? At some level it’s just a digital electronics implementation of the way people have done arithmetic forever.

But there are some wrinkles—some “algorithmic ideas”—though they’re quite obscure, except to microprocessor designers. Just as when Babbage was making his Difference Engine, carries are a big nuisance in doing arithmetic. (One can actually think of a linear-feedback shift register as being a system that does something like arithmetic, but doesn’t do carries.) There are “carry propagation trees” that optimize carrying. There are also little tricks (“Booth encoding”, “Wallace trees”, etc.) that reduce the number of bit operations needed to do the innards of arithmetic. But unlike with LFSRs, there doesn’t seem to be one algorithmic idea that’s universally used—and so I think it’s still likely that Sol’s maximum-length LFSR sequence idea is the winner for most used.

Cellular Automata and Nonlinear Shift Registers

Even though it’s not obvious at first, it turns out there’s a very close relationship between feedback shift registers and something I’ve spent many years studying: cellular automata. The basic setup for a feedback shift register involves computing one bit at a time. In a cellular automaton, one has a line of cells, and at each step all the cells are updated in parallel, based on a rule that depends, say, on the values of their nearest neighbors.

To see how these are related, think about running a feedback shift register of size n, but displaying its state only every n steps—in other words, letting all the bits be rewritten before one displays again. If one displays every step of a linear-feedback shift register (here with two taps next to each other), as in the first two panels below, nothing much happens at each step, except that things shift to the left. But if one makes a compressed picture, showing only every n steps, suddenly a pattern emerges.

It’s a nested pattern, and it’s very close to being the exact same pattern that one gets with a cellular automaton that takes a cell and its neighbor, and adds them mod 2 (or XORs them). Here’s what happens with that cellular automaton, if one arranges its cells so they’re in a circle of the same size as the shift register above:

At the beginning, the cellular automaton and shift register patterns are exactly the same—though when they “hit the edge” they become slightly different because the edges are handled differently. But looking at these pictures it becomes less surprising that the math of shift registers should be relevant to cellular automata. And seeing the regularity of the nested patterns makes it clearer why there might be an elegant mathematical theory of shift registers in the first place.

Typical shift registers used in practice don’t tend to make such obviously regular patterns, though. Here are a few examples of shift registers that yield maximum-length sequences. When one’s doing math, like Sol did, it’s very much the same story as for the case of obvious nesting. But here the fact that the taps are far apart makes things get mixed up, leaving no obvious visual trace of nesting.

So how broad is the correspondence between shift registers and cellular automata? In cellular automata the rules for generating new values of cells can be anything one wants. In linear-feedback shift registers, however, they always have to be based on adding mod 2 (or XOR’ing). But that’s what the “linear” part of “linear-feedback shift register” means. And it’s also in principle possible to have nonlinear-feedback shift registers (NFSRs) that use whatever rule one wants for combining values.

And in fact, once Sol had worked out his theory for linear-feedback shift registers, he started in on the nonlinear case. When he arrived at JPL in 1956 he got an actual lab, complete with racks of little electronic modules. Sol told me each module was about the size of a cigarette pack—and was built from a Bell Labs design to perform a particular logic operation (AND, OR, NOT, …). The modules could be strung together to implement whatever nonlinear-feedback shift register one wanted, and they ran pretty fast—producing about a million bits per second. (Sol told me that someone tried doing the same thing with a general-purpose computer—and what took 1 second with the custom hardware modules took 6 weeks on the general-purpose computer.)

When Sol had looked at linear-feedback shift registers, the first big thing he’d managed to understand was their repetition periods. And with nonlinear ones he put most of his effort into trying to understand the same thing. He collected all sorts of experimental data. He told me he even tested sequences of length 245—which must have taken a year. He made summaries, like the one below (notice the visualizations of sequences, shown as oscilloscope-like traces). But he never managed to come up with any kind of general theory as he had with linear-feedback shift registers.

It’s not surprising he couldn’t do it. Because when one looks at nonlinear-feedback shift registers, one’s effectively sampling the whole richness of the computational universe of possible simple programs. Back in the 1950s there were already theoretical results—mostly based on Turing’s ideas of universal computation—about what programs could in principle do. But I don’t think Sol or anyone else ever thought they would apply to the very simple—if nonlinear—functions in NFSRs.

And in the end it basically took until my work around 1981 for it to become clear just how complicated the behavior of even very simple programs could be. My all-time favorite example is rule 30—a cellular automaton in which the values of neighboring cells are combined using a function that can be represented as p+q+r+qr mod 2 (or p XOR (q OR r)). And, amazingly, Sol looked at nonlinear-feedback shift registers that were based on incredibly similar functions—like, in 1959, p+r+s+qr+qs+rs mod 2. Here’s what Sol’s function (which can be thought of as “rule 29070”), rule 30, and a couple of other similar rules look like in a shift register:

And here’s what they look like as cellular automata, without being constrained to a fixed-size register:

Of course, Sol never made pictures like this (and it would, realistically, have been almost impossible to do so in the 1950s). Instead, he concentrated on a kind of aggregate feature: the overall repetition period.

Sol wondered whether nonlinear-feedback shift registers might make good sources of randomness. From what we now know about cellular automata, it’s clear they can. And for example the rule 30 cellular automaton is what we used to generate randomness for Mathematica for 25 years (though we recently retired it in favor of a more efficient rule that we found by searching trillions of possibilities).

Sol didn’t talk about cryptography much—though I suspect he did quite a bit of government work on it. He did tell me though that in 1959 he’d found a “multi-dimensional correlation attack on nonlinear sequences”, though he said that at the time he “carefully avoided stating that the application was to cryptanalysis”. The fact is that cellular automata like rule 30 (and presumably also nonlinear-feedback shift registers) do seem to be good cryptosystems—though partly because of confusions about whether they’re somehow equivalent to linear-feedback shift registers (they’re not), they’ve never been used as much as they should.

Being a history enthusiast, I’ve tried over the past few decades to identify all precursors to my work on 1D cellular automata. 2D cellular automata had been studied a bit, but there was only quite theoretical work on the 1D case, together with a few specific investigations in the cryptography community (that I’ve never fully found out about). And in the end, of all the things I’ve seen, I think Sol Golomb’s nonlinear-feedback shift registers were in a sense closest to what I actually ended up doing a quarter century later.

Polyominoes

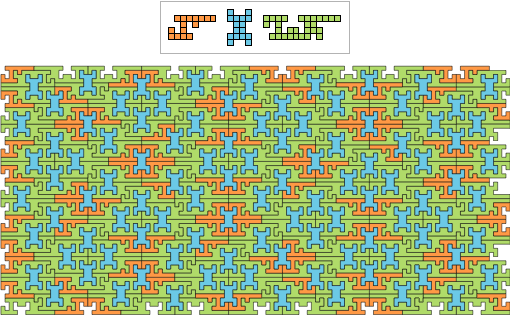

Mention the name “Golomb” and some people will think of shift registers. But many more will think of polyominoes. Sol didn’t invent polyominoes—though he did invent the name. But what he did was to make systematic what had appeared only in isolated puzzles before.

The main question Sol was interested in was how and when collections of polyominoes can be arranged to tile particular (finite or infinite) regions. Sometimes it’s fairly obvious, but often it’s very tricky to figure out. Sol published his first paper on polyominoes in 1954, but what really launched polyominoes into the public consciousness was Martin Gardner’s 1957 Mathematical Games column on them in Scientific American. As Sol explained in the introduction to his 1964 book, the effect was that he acquired “a steady stream of correspondents from around the world and from every stratum of society—board chairmen of leading universities, residents of obscure monasteries, inmates of prominent penitentiaries…”

Game companies took notice too, and within months, for example, the “New Sensational Jinx Jigsaw Puzzle” had appeared—followed over the course of decades by a long sequence of other polyomino-based puzzles and games (no, the sinister bald guy doesn’t look anything like Sol):

Sol was still publishing papers about polyominoes 50 years after he first discussed them. In 1961 he introduced general subdividable “rep-tiles”, which it later became clear can make nested, fractal (“infin-tile”), patterns. But almost everything Sol did with polyominoes involved solving specific tiling problems with them.

For me, polyominoes are most interesting not for their specifics but for the examples they provide of more-general phenomena. One might have thought that given a few simple shapes it would be easy to decide whether they can tile the whole plane. But the example of polyominoes—with all the games and puzzles they support—makes it clear that it’s not necessarily so easy. And in fact it was proved in the 1960s that in general it’s a theoretically undecidable problem.

If one’s only interested in a finite region, then in principle one can just enumerate all conceivable arrangements of the original shapes, and see whether any of them correspond to successful tilings. But if one’s interested in the whole, infinite plane then one can’t do this. Maybe one will find a tiling of size one million, but there’s no guarantee how far the tiling can be extended.

It turns out it can be like running a Turing machine—or a cellular automaton. You start from a line of tiles. Then the question of whether there’s an infinite tiling is equivalent to the question of whether there’s a setup for some Turing machine that makes it never halt. And the point then is that if the Turing machine is universal (so that it can in effect be programmed to do any possible computation) then the halting problem for it can be undecidable, which means that the tiling problem is also undecidable.

Of course, whether a tiling problem is undecidable depends on the original set of shapes. And for me an important question is how complicated the shapes have to be so that they can encode universal computation, and yield an undecidable tiling problem. Sol Golomb knew the literature on this kind of question, but wasn’t especially interested in it. But I start thinking about materials formed from polyominoes whose pattern of “crystallization” can in effect do an arbitrary computation, or occur at a “melting point” that seems “random” because its value is undecidable.

Complicated, carefully crafted sets of polyominoes are known that in effect support universal computation. But what’s the simplest set—and is it simple enough that one might run across by accident? My guess is that—just like with other kinds of systems I’ve studied in the computational universe—the simplest set is in fact simple. But finding it is very difficult.

A considerably easier problem is to find polyominoes that successfully tile the plane, but can’t do so periodically. Roger Penrose (of Penrose tiles fame) found an example in 1994. My book A New Kind of Science gave a slightly simpler example with 3 polyominoes:

The Rest of the Story

By the time Sol was in his early thirties, he’d established his two most notable pursuits—shift registers and polyominoes—and he’d settled into life as a university professor. He was constantly active, though. He wrote what ended up being a couple of hundred papers, some extending his earlier work, some stimulated by questions people would ask him, and some written, it seems, for the sheer pleasure of figuring out interesting things about numbers, sequences, cryptosystems, or whatever.

Shift registers and polyominoes are both big subjects (they even each have their own category in the AMS classification of mathematical publication topics). Both have had a certain injection of energy in the past decade or two as modern computer experiments started to be done on them—and Sol collaborated with people doing these. But both fields still have many unanswered questions. Even for linear-feedback shift registers there are bigger Hadamard matrices to be found. And very little is known even now about nonlinear-feedback shift registers. Not to mention all the issues about nonperiodic and otherwise exotic polyomino tilings.

Sol was always interested in puzzles, both with math and with words. For a while he wrote a puzzle column for the Los Angeles Times—and for 32 years he wrote “Golomb’s Gambits” for the Johns Hopkins alumni magazine. He participated in MegaIQ tests—earning himself a trip to the White House when he and its chief of staff happened to both score in the top five in the country.

He poured immense effort into his work at the university, not only teaching undergraduate courses and mentoring graduate students but also ascending the ranks of university administration (president of the faculty senate, vice provost for research, etc.)—and occasionally opining more generally about university governance (for example writing a paper entitled “Faculty Consulting: Should It Be Curtailed?”; answer: no, it’s good for the university!). At USC, he was particularly involved in recruiting—and over his time at USC he helped it ascend from a school essentially unknown in electrical engineering to one that makes it onto lists of top programs.

And then there was consulting. He was meticulous at not disclosing what he did for government agencies, though at one point he did lament that some newly published work had been anticipated by a classified paper he had written 40 years earlier. In the late 1960s—frustrated that everyone but him seemed to be selling polyomino games—Sol started a company called Recreational Technology, Inc. It didn’t go particularly well, but one side effect was that he got involved in business with Elwyn Berlekamp—a Berkeley professor and fellow enthusiast of coding theory and puzzles—whom he persuaded to start a company called Cyclotomics (in honor of cyclotomic polynomials of the form xn–1) which was eventually sold to Kodak for a respectable sum. (Berlekamp also created an algorithmic trading system that he sold to Jim Simons and that became a starting point for Renaissance Technologies, now one of the world’s largest hedge funds.)

More than 10,000 patents refer to Sol’s work, but Sol himself got only one patent: on a cryptosystem based on quasigroups—and I don’t think he ever did much to directly commercialize his work.

Sol was for many years involved with the Technion (Israel Institute of Technology) and quite devoted to Israel. He characterized himself as an “non-observant orthodox Jew”—but occasionally did things like teach a freshman seminar on the Book of Genesis, as well as working on decoding parts of the Dead Sea Scrolls.

Sol and his wife traveled extensively, but the center of Sol’s world was definitely Los Angeles—his office at USC, and the house in which he and his wife lived for nearly 60 years. He had a circle of friends and students who relied on him for many things. And he had his family. His daughter Astrid remained a local personality, even being portrayed in fiction a few times—as a student in a play about Richard Feynman (she sat as a drawing model for him many times), and as a character in a novel by a friend of mine. Beatrice became an MD/PhD who’s spent her career applying an almost mathematical level of precision to various kinds of medical reasoning and diagnosis (Gulf War illness, statin effects, hiccups, etc.)—even as she often quotes “Beatrice’s Law”, that “everything in biology is more complicated than you think, even taking into account Beatrice’s Law”. (I’m happy to have made at least one contribution to Beatrice’s life: introducing her to her husband, now of 26 years, Terry Sejnowski, one of the founders of modern computational neuroscience.)

In the years I knew Sol, there was always a quiet energy to him. He seemed to be involved in lots of things, even if he often wasn’t particularly forthcoming about the details. Occasionally I would talk to him about actual science and mathematics; usually he was more interested in telling stories (often very engaging ones) about personalities and organizations (“Can you believe that [in 1985] after not going to conferences for years, Claude Shannon just showed up unannounced at the bar at the annual information theory conference?”, “Do you know how much they had to pay the president of Caltech to get him to move to Saudi Arabia?”, etc.)

In retrospect, I wish I’d done more to get Sol interested in some of the math questions brought up by my own work. I don’t think I properly internalized the extent to which he liked cracking problems suggested by other people. And then there was the matter of computers. Despite all his contributions to the infrastructure of the computational world, Sol himself basically never seriously used computers. He took particular pride in his own mental calculation capabilities. And he didn’t really use email until he was in his seventies, and never used a computer at home—though, yes, he did have a cellphone. (A typical email from him was short. I had mentioned last year that I was researching Ada Lovelace; he responded: “The story of Ada Lovelace as Babbage’s programmer is so widespread that everyone seems to accept it as factual, but I’ve never seen original source material on this.”)

Sol’s daughters organized a party for his 80th birthday a few years ago, creating an invitation with characteristic mathematical features:

Sol had a few medical problems, though they didn’t seem to be slowing him down much. His wife’s health, though, was failing, and a few weeks ago her condition suddenly worsened. Sol still went to his office as usual on Friday, but on Saturday night, in his sleep, he died. His wife Bo died just two weeks later, two days before what would have been their 60th wedding anniversary.

Though Sol himself is gone, the work he did lives on—responsible for an octillion bits (and counting) across the digital world. Farewell, Sol. And on behalf of all of us, thanks for all those cleverly created bits.