Last week I gave a talk (and did a panel discussion) at a conference entitled “Ethics of Artificial Intelligence” held at the NYU Philosophy Department’s Center for Mind, Brain and Consciousness. Here’s the video and a transcript:

Thanks for inviting me here today.

You know, it’s funny to be here. My mother was a philosophy professor in Oxford. And when I was a kid I always said the one thing I’d never do was do or talk about philosophy. But, well, here I am.

Before I really get into AI, I think I should say a little bit about my worldview. I’ve basically spent my life alternating between doing basic science and building technology. I’ve been interested in AI for about as long as I can remember. But as a kid I started out doing physics and cosmology and things. That got me into building technology to automate stuff like math. And that worked so well that I started thinking about, like, how to really know and compute everything about everything. That was in about 1980—and at first I thought I had to build something like a brain, and I was studying neural nets and so on. But I didn’t get too far.

And meanwhile I got interested in an even bigger problem in science: how to make the most general possible theories of things. The dominant idea for 300 years had been to use math and equations. But I wanted to go beyond them. And the big thing I realized was that the way to do that was to think about programs, and the whole computational universe of possible programs.

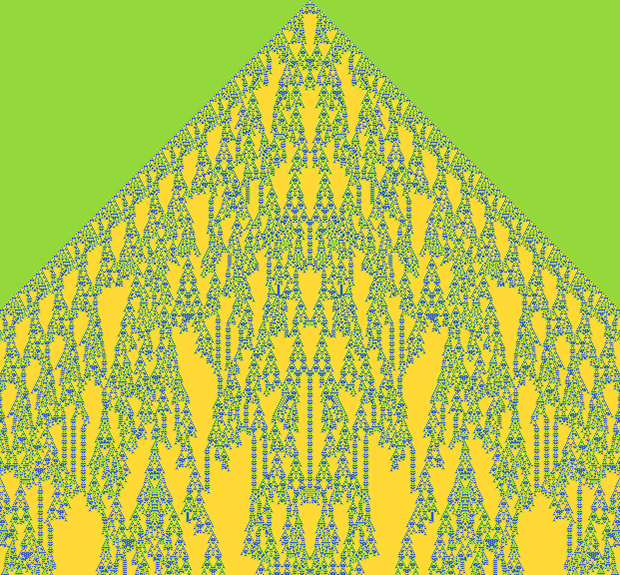

And that led to my personal Galileo-like moment. I just pointed my “computational telescope” at these simplest possible programs, and I saw this amazing one I called rule 30—that just seemed to go on producing complexity forever from essentially nothing.

Well, after I’d seen this, I realized this is actually something that happens all over the computational universe—and all over nature. It’s really the secret that lets nature make all the complicated stuff we see. But it’s something else too: it’s a window into what raw, unfettered computation is like. At least traditionally when we do engineering we’re always building things that are simple enough that we can foresee what they’ll do.

But if we just go out into the computational universe, things can be much wilder. Our company has done a lot of mining out there, finding programs that are useful for different purposes, like rule 30 is for randomness. And modern machine learning is kind of part way from traditional engineering to this kind of free-range mining.

But, OK, what can one say in general about the computational universe? Well, all these programs can be thought of as doing computations. And years ago I came up with what I call the Principle of Computational Equivalence—that says that if behavior isn’t obviously simple, it typically corresponds to a computation that’s maximally sophisticated. There are lots of predictions and implications of this. Like that universal computation should be ubiquitous. As should undecidability. And as should what I call computational irreducibility.

Can you predict what it’s going to do? Well, it’s probably computationally irreducible, which means you can’t figure out what it’s going to do without effectively tracing every step and going through the same computational effort it does. It’s completely deterministic. But to us it’s got what seems like free will—because we can never know what it’s going to do.

Here’s another thing: what’s intelligence? Well, our big unifying principle says that everything—from a tiny program, to our brains, is computationally equivalent. There’s no bright line between intelligence and mere computation. The weather really does have a mind of its own: it’s doing computations just as sophisticated as our brains. To us, though, it’s pretty alien computation. Because it’s not connected to our human goals and experiences. It’s just raw computation that happens to be going on.

So how do we tame computation? We have to mold it to our goals. And the first step there is to describe our goals. And for the past 30 years what I’ve basically been doing is creating a way to do that.

I’ve been building a language—that’s now called the Wolfram Language—that allows us to express what we want to do. It’s a computer language. But it’s not really like other computer languages. Because instead of telling a computer what to do in its terms, it builds in as much knowledge as possible about computation and the world, so that we humans can describe in our terms what we want, and then it’s up to the language to get it done as automatically as possible.

This basic idea has worked really well, and in the form of Mathematica it’s been used to make endless inventions and discoveries over the years. It’s also what’s inside Wolfram|Alpha. Where the idea is to take pure natural language questions, understand them, and use the kind of curated knowledge and algorithms of our civilization to answer them. And, yes, it’s a very classic AIish thing. And of course it’s computed answers to billions and billions of questions from humans, for example inside Siri.

I had an interesting experience recently, figuring out how to use what we’ve built to teach computational thinking to kids. I was writing exercises for a book. At the beginning, it was easy: “make a program to do X”. But later on, it was like “I know what to say in the Wolfram Language, but it’s really hard to express in English”. And of course that’s why I just spent 30 years building the Wolfram Language.

English has maybe 25,000 common words; the Wolfram Language has about 5000 carefully designed built-in constructs—including all the latest machine learning—together with millions of things based on curated data. And the idea is that once one can think about something in the world computationally, it should be as easy as possible to express it in the Wolfram Language. And the cool thing is, it really works. Humans, including kids, can read and write the language. And so can computers. It’s a kind of high-level bridge between human thinking, in its cultural context, and computation.

OK, so what about AI? Technology has always been about finding things that exist, and then taming them to automate the achievement of particular human goals. And in AI the things we’re taming exist in the computational universe. Now, there’s a lot of raw computation seething around out there—just as there’s a lot going on in nature. But what we’re interested in is computation that somehow relates to human goals.

So what about ethics? Well, maybe we want to constrain the computation, the AI, to only do things we consider ethical. But somehow we have to find a way to describe what we mean by that.

Well, in the human world, one way we do this is with laws. But so how do we connect laws to computations? We may call them “legal codes”, but today laws and contracts are basically written in natural language. There’ve been simple computable contracts in areas like financial derivatives. And now one’s talking about smart contracts around cryptocurrencies.

But what about the vast mass of law? Well, Leibniz—who died 300 years ago next month—was always talking about making a universal language to, as we would say now, express it all in a computable way. He was a few centuries too early, but I think now we’re finally in a position to do this.

I just posted a long blog about all this last week, but let me try to summarize. With the Wolfram Language we’ve managed to express a lot of kinds of things in the world—like the ones people ask Siri about. And I think we’re now within sight of what Leibniz wanted: to have a general symbolic discourse language that represents everything involved in human affairs.

I see it basically as a language design problem. Yes, we can use natural language to get clues, but ultimately we have to build our own symbolic language. It’s actually the same kind of thing I’ve done for decades in the Wolfram Language. Take even a word like “plus”. Well, in the Wolfram Language there’s a function called Plus, but it doesn’t mean the same thing as the word. It’s a very specific version, that has to do with adding things mathematically. And as we design a symbolic discourse language, it’s the same thing. The word “eat” in English can mean lots of things. But we need a concept—that we’ll probably refer to as “eat”—that’s a specific version, that we can compute with.

So let’s say we’ve got a contract written in natural language. One way to get a symbolic version is to use natural language understanding—just like we do for billions of Wolfram|Alpha inputs, asking humans about ambiguities. Another way might be to get machine learning to describe a picture. But the best way is just to write in symbolic form in the first place, and actually I’m guessing that’s what lawyers will be doing before too long.

And of course once you have a contract in symbolic form, you can start to compute about it, automatically seeing if it’s satisfied, simulating different outcomes, automatically aggregating it in bundles, and so on. Ultimately the contract has to get input from the real world. Maybe that input is “born digital”, like data about accessing a computer system, or transferring bitcoin. Often it’ll come from sensors and measurements—and it’ll take machine learning to turn into something symbolic.

Well, if we can express laws in computable form maybe we can start telling AIs how we want them to act. Of course it might be better if we could boil everything down to simple principles, like Asimov’s Laws of Robotics, or utilitarianism or something.

But I don’t think anything like that is going to work. What we’re ultimately trying to do is to find perfect constraints on computation, but computation is something that’s in some sense infinitely wild. The issue already shows up in Gödel’s Theorem. Like let’s say we’re looking at integers and we’re trying to set up axioms to constrain them to just work the way we think they do. Well, what Gödel showed is that no finite set of axioms can ever achieve this. With any set of axioms you choose, there won’t just be the ordinary integers; there’ll also be other wild things.

And the phenomenon of computational irreducibility implies a much more general version of this. Basically, given any set of laws or constraints, there’ll always be “unintended consequences”. This isn’t particularly surprising if one looks at the evolution of human law. But the point is that there’s theoretically no way around it. It’s ubiquitous in the computational universe.

Now I think it’s pretty clear that AI is going to get more and more important in the world—and is going to eventually control much of the infrastructure of human affairs, a bit like governments do now. And like with governments, perhaps the thing to do is to create an AI Constitution that defines what AIs should do.

What should the constitution be like? Well, it’s got to be based on a model of the world, and inevitably an imperfect one, and then it’s got to say what to do in lots of different circumstances. And ultimately what it’s got to do is provide a way of constraining the computations that happen to be ones that align with our goals. But what should those goals be? I don’t think there’s any ultimate right answer. In fact, one can enumerate goals just like one can enumerate programs out in the computational universe. And there’s no abstract way to choose between them.

But for us there’s a way to choose. Because we have particular biology, and we have a particular history of our culture and civilization. It’s taken us a lot of irreducible computation to get here. But now we’re just at some point in the computational universe, that corresponds to the goals that we have.

Human goals have clearly evolved through the course of history. And I suspect they’re about to evolve a lot more. I think it’s pretty inevitable that our consciousness will increasingly merge with technology. And eventually maybe our whole civilization will end up as something like a box of a trillion uploaded human souls.

But then the big question is: “what will they choose to do?”. Well, maybe we don’t even have the language yet to describe the answer. If we look back even to Leibniz’s time, we can see all sorts of modern concepts that hadn’t formed yet. And when we look inside a modern machine learning or theorem proving system, it’s humbling to see how many concepts it effectively forms—that we haven’t yet absorbed in our culture.

Maybe looked at from our current point of view, it’ll just seem like those disembodied virtual souls are playing videogames for the rest of eternity. At first maybe they’ll operate in a simulation of our actual universe. Then maybe they’ll start exploring the computational universe of all possible universes.

But at some level all they’ll be doing is computation—and the Principle of Computational Equivalence says it’s computation that’s fundamentally equivalent to all other computation. It’s a bit of a letdown. Our proud future ending up being computationally equivalent just to plain physics, or to little rule 30.

Of course, that’s just an extension of the long story of science showing us that we’re not fundamentally special. We can’t look for ultimate meaning in where we’ve reached. We can’t define an ultimate purpose. Or ultimate ethics. And in a sense we have to embrace the details of our existence and our history.

There won’t be a simple principle that encapsulates what we want in our AI Constitution. There’ll be lots of details that reflect the details of our existence and history. And the first step is just to understand how to represent those things. Which is what I think we can do with a symbolic discourse language.

And, yes, conveniently I happen to have just spent 30 years building the framework to create such a thing. And I’m keen to understand how we can really use it to create an AI Constitution.

So I’d better stop talking about philosophy, and try to answer some questions.

After the talk there was a lively Q&A (followed by a panel discussion), included on the video. Some questions were:

- When will AI reach human-level intelligence?

- What are the difficulties you foresee in developing a symbolic discourse language?

- Do we live in a deterministic universe?

- Is our present reality a simulation?

- Does free will exist, and how does consciousness arise from computation?

- Can we separate rules and principles in a way that is computable for AI?

- How can AI navigate contradictions in human ethical systems?