It All Started with Feynman Diagrams

Any serious calculation in particle physics takes a lot of algebra. Maybe it doesn’t need to. But with the methods based on Feynman diagrams that we know so far, it does. And in fact it was these kinds of calculations that first led me to use computers for symbolic computation. That was in 1976, which by now is a long time ago. But actually the idea of doing Feynman diagram calculations by computer is even older.

So far as I know it all started from a single conversation on the terrace outside the cafeteria of the CERN particle physics lab near Geneva in 1962. Three physicists were involved. And out of that conversation there emerged three early systems for doing algebraic computation. One was written in Fortran. One was written in LISP. And one was written in assembly language.

I’ve told this story quite a few times, often adding “And which of those physicists do you suppose later won a Nobel Prize?” “Of course,” I explain, “it was the one who wrote their system in assembly language!”

That physicist was Martinus (Tini) Veltman, who died a couple of weeks ago, and who I knew for several decades. His system was called SCHOONSCHIP, and he wrote the first version of it in IBM 7000 series assembly language. A few years later he rewrote it in CDC 6000 series assembly language.

The emphasis was always on speed. And for many years SCHOONSCHIP was the main workhorse system for doing very large-scale Feynman diagram calculations—which could take months of computer time.

Back in the early 1960s when SCHOONSCHIP was first written, Feynman diagrams—and the quantum field theory from which they came—were out of fashion. Feynman diagrams had been invented in the 1940s for doing calculations in quantum electrodynamics (the quantum theory of electrons and photons)—and that had gone well. But attention had turned to the strong interactions which hold nuclei together, and the weak interactions responsible for nuclear beta decay, and in neither case did Feynman diagrams seem terribly useful.

There was, however, a theory of the weak interactions that involved as-yet-unobserved “intermediate vector bosons” (that were precursors of what we now call W particles). And in 1961—as part of his PhD thesis—Tini Veltman took on the problem of computing how photons would interact with intermediate vector bosons. And for this he needed elaborate Feynman diagram calculations.

I’m not sure if Tini already knew how to program, or whether he learned it for the purpose of creating SCHOONSCHIP—though I do know that he’d been an electronics buff since childhood.

I think I was first exposed to SCHOONSCHIP in 1976, and I used it for a few calculations. In my archives now, I can find only a single example of running it: a sample calculation someone did for me, probably in 1978, in connection with something I was writing (though never published):

By modern standards it looks a bit obscure. But it’s a fairly typical “old-style line printer output”. There’s a version of the input at the top. Then some diagnostics in the middle. And then the result appears at the bottom. And the system reports that this took .12 seconds to generate.

This particular result is for a very simple Feynman diagram involving the interaction of a photon and an electron—and involves just 9 terms. But SCHOONSCHIP could handle results involving millions of terms too (which allowed computations in QED to be done to 8-digit precision).

1979

Within days after finishing my PhD in theoretical physics at Caltech in November 1979, I flew to Geneva, Switzerland, to visit CERN for a couple of weeks. And it was during that visit that I started designing SMP (“Symbolic Manipulation Program”)—the system that would be the forerunner of Mathematica and the Wolfram Language.

And when I mentioned what I was doing to people at CERN they said “You should talk to Tini Veltman”.

And so it was that in December 1979 I flew to Amsterdam, and went to see Tini Veltman. The first thing that struck me was how incongruous the name “Tini” (pronounced “teeny”) seemed. (At the time, I didn’t even know why he was called Tini; I’d only seen his name as “M. Veltman”, and didn’t know “Tini” was short for “Martinus”.) But Tini was a large man, with a large beard—not “teeny” at all. He reminded me of pictures of European academics of old—and, for some reason, particularly of Ludwig Boltzmann.

He was 48 years old; I was 20. He was definitely a bit curious about the “newfangled computer ideas” I was espousing. But generally he took on the mantle of an elder statesman who was letting me in on the secrets of how to build a computer system like SCHOONSCHIP.

I pronounced SCHOONSCHIP “scoon-ship”. He got a twinkle in his eye, and corrected it to a very guttural “scohwn-scchhip” (IPA: [sxon][sxɪp]), explaining that, yes, he’d given it a Dutch name that was hard for non-Dutch people to say. (The Dutch word “schoonschip” means, roughly, “shipshape”—like SCHOONSCHIP was supposed to make one’s algebraic expressions.) Everything in SCHOONSCHIP was built for efficiency. The commands were short. Tini was particularly pleased with YEP for generating intermediate results, which, he said, was mnemonic in Dutch (yes, SCHOONSCHIP may be the only computer system with keywords derived from Dutch).

If you look at the sample SCHOONSCHIP output above, you might notice something a little strange in it. Every number in the (exact) algebraic expression result that’s generated has a decimal point after it. And there’s even a +0. at the end. What’s going on there? Well, that was one of the big secrets Tini was very keen to tell me.

“Floating-point computation is so much faster than integer”, he said. “You should do everything you can in floating point. Only convert it back to exact numbers at the end.” And, yes, it was true that the scientific computers of the time—like the CDC machines he used—had very much been optimized for floating-point arithmetic. He quoted instruction times. He explained that if you do all your arithmetic in “fast floating point”, and then get a 64-bit floating point number out at the end, you can always reverse engineer what rational number it was supposed to be—and that it’s much faster to do this than to keep track of rational numbers exactly through the computation.

It was a neat hack. And I bought it. And in fact when we implemented SMP its default “exact” arithmetic worked exactly this way. I’m not sure if it really made computations more efficient. In the end, it got quite tangled up with the rather abstract and general design of SMP, and became something of a millstone. But actually we use somewhat similar ideas in modern Wolfram Language (albeit now with formally verified interval arithmetic) for doing exact computations with things like algebraic numbers.

Tini as Physicist

I’m not sure I ever talked much to Tini about the content of physics; somehow we always ended up discussing computers (or the physics community) instead. But I certainly made use of Tini’s efforts to streamline not just the computer implementation but also the underlying theory of Feynman diagram calculation. I expect—as I have seen so often—that his efforts to streamline the underlying theory were driven by thinking about things in computational terms. But I made particular use of the “Diagrammar” he produced in 1972:

One of the principal methods that was introduced here was what’s called dimensional regularization: the concept of formally computing results in d-dimensional space (with d a continuous variable), then taking the limit d → 4 at the end. It’s an elegant approach, and when I was doing particle physics in the late 1970s, I became quite an enthusiast of it. (In fact, I even came up with an extension of it—based on looking at the angular structure of Gegenbauer functions as d-dimensional spherical functions—that was further developed by Tony Terrano, who worked with me on Feynman diagram computation, and later on SMP.)

Back in the 1970s, “continuing to d dimensions” was just thought of as a formal trick. But, curiously enough, in our Physics Project, where dimension is an emergent property, one’s interested in “genuinely d-dimensional” space. And, quite possibly, there are experimentally observable signatures of d ≠ 3 dimensions of space. And in thinking about that, quite independent of Tini, I was just about to pull out my copy of “Diagrammar” again.

Tini’s original motivation for writing SCHOONSCHIP had been a specific calculation involving the interaction of photons and putative “intermediate vector bosons”. But by the late 1960s, there was an actual theoretical candidate for what the “intermediate vector boson” might be: a “gauge boson” basically associated with the “gauge symmetry group” SU(2)—and given mass through “spontaneous symmetry breaking” and the “Higgs mechanism”.

But what would happen if one did Feynman diagram calculations in a “gauge theory” like this? Would they have the renormalizability property that Richard Feynman had identified in QED, and that allowed one to not worry about infinities that were nominally generated in calculations? Tini Veltman wanted to figure this out, and soon suggested the problem to his student Gerard ’t Hooft (his coauthor on “Diagrammar”).

Tini had defined the problem and formalized what was needed, but it was ’t Hooft who figured out the math and in the end presented a rather elaborate proof of renormalizability of gauge theories in his 1972 PhD thesis. It was a major and much-heralded result—providing what was seen as key theoretical validation for the first part of what became the Standard Model of particle physics. And it launched ’t Hooft’s career.

Tini Veltman always gave me the impression of someone who wanted to interact—and collaborate—with people. Gerard ’t Hooft has always struck me as being more in the “lone wolf” model of doing physics. I’ve interacted with Gerard from time to time for years (in fact I first met him several years before I met Tini). And it’s been very impressive to see him invent a long sequence of some of the most creative ideas in physics over the past half century. And though it’s not my focus here, I should mention that Gerard got interested in cellular automata in the late 1980s, and a few years ago even wrote a book called The Cellular Automaton Interpretation of Quantum Mechanics. I’d never quite understood what he was talking about, and I suspected that—despite his use of Mathematica—he’d never explored the computational universe enough to develop a true intuition for what goes on there. But actually, quite recently, it looks as if there’s a limiting case of our Physics Project that may just correspond to what Gerard has been talking about—which would be very cool…

But I digress. Starting in 1966 Tini was a professor at Utrecht. And in 1974 Gerard became a professor there too. And even by the time I met Tini in 1979 there were already rumors of a falling-out. Gerard was reported as saying that Tini didn’t understand stuff. Tini was reported as saying that Gerard was “a monster”. And then there was the matter of the Nobel Prize.

As the Standard Model gained momentum, and was increasingly validated by experiments, the proof of renormalizability of gauge theories started to seem more and more like it would earn a Nobel Prize. But who would actually get the prize? Gerard was clear. But what about Tini? There were rumors of letters arguing one way and the other, and stories of scurrilous campaigning.

Prizes are always a complicated matter. They’re usually created to incentivize something, though realistically they’re often as much as anything for the benefit of the giver. But if they’re successful, they tend to come to represent objectives in themselves. Years ago I remember the wife of a well-known physicist advising me to “do something you can win a prize for”. It didn’t make sense to me, and then I realized why. “I want to do things”, I said, “for which nobody’s thought to invent a prize yet”.

Well, the good news is that in 1999, the Nobel Committee decided to award the Nobel Prize to both Gerard and Tini. “Thank goodness” was the general sentiment.

Tini Goes to Michigan

When I visited Tini in Utrecht in 1979 I got the impression that he and his family were very deeply rooted in the Netherlands and would always be there. I knew that Tini had spent time at CERN, and I think I vaguely knew that he’d been quite involved with the neutrino experiments there. But I didn’t know that SCHOONSCHIP wasn’t originally written when Tini was at Utrecht or at CERN: despite the version in the CERN Program Library saying it was “Written in 1967 by M. Veltman at CERN” the first version was actually written right in the heart of what would become Silicon Valley, during the time Tini worked at the then-very-new Stanford Linear Accelerator Center, in 1963.

He’d gone there along with John Bell (of Bell’s inequalities fame), whose “day job” was working on theoretical aspects of neutrino experiments. (Thinking about the foundations of quantum mechanics was not well respected by other physicists at the time.) Curiously, another person at Stanford at the time was Tony Hearn, who was one of the physicists in the discussion on the terrace at CERN. But unlike Tini, he fell into the computer science and John McCarthy orbit at Stanford, and wrote his REDUCE program in LISP.

By the way, in piecing together the story of Tini’s life and times, I just discovered another “small world” detail. It turns out back in 1961 an early version of the intermediate boson calculations that Tini was interested in had been done by two famous physicists, T. D. Lee and C. N. Yang—with the aid of a computer. And they’d been helped by a certain Peter Markstein at IBM—who, along with his wife Vicky Markstein, would be instrumental nearly 30 years later in getting Mathematica to run on IBM RISC systems. But in any case, back in 1961, Lee and Yang apparently wouldn’t give Tini access to the programs Peter Markstein had created—which was why Tini decided to make his own, and to write SCHOONSCHIP to do it.

But back to the main story. I suspect it was a result of the rift with Gerard ’t Hooft. But in 1980, at the age of 50, Tini transplanted himself and his family from Utrecht to the University of Michigan in Ann Arbor, Michigan. He spent quite a bit of his time at Fermilab near Chicago, in and around neutrino experiments.

But it was in Michigan that I had my next major interaction with Tini. I had started building SMP right after I saw Tini in 1979—and after a somewhat tortuous effort to choose between CERN and Caltech—I had accepted a faculty position at Caltech. In early 1981 Version 1.0 of SMP was released. And in the effort to figure out how to develop it further—with the initial encouragement of Caltech—I ended up starting my first company. But soon (through a chain of events I’ve described elsewhere) Caltech had a change of heart, and in June 1982 I decided I was going to quit Caltech.

I wrote to Tini—and, somewhat to my surprise, he quickly began to aggressively try to recruit me to Michigan. He wrote me asking what it would take to get me there. Third on his list was the type of position, “research or faculty”. Second was “salary”. But first was “computers”—adding parenthetically “I understand you want a VAX; this needs some detailing”. The University of Michigan did indeed offer me a nice professorship, but—choosing among several possibilities—I ended up going to the Institute for Advanced Study in Princeton, with the result that I never had the chance to interact with Tini at close quarters.

A few years later, I was working on Mathematica, and what would become the Wolfram Language. And, no, I didn’t use a floating-point representation for algebraic coefficients again. Mathematica 1.0 was released in 1988, and shortly after that Tini told me he was writing a new version of SCHOONSCHIP, in a different language. “What language?”, I asked. “68000 assembler”, he said. “You can’t be serious!” I said. But he was, and soon thereafter a new version of SCHOONSCHIP appeared, written in 68000 assembler.

I think Tini somehow never really fully trusted anything higher level than assembler—proudly telling me things he could do by writing right down “at the metal”. I talked about portability. I talked about compiler optimizers. But he wasn’t convinced. And at the time, perhaps he was still correct. But just last week, for example, I got the latest results from benchmarking the symbolic compiler that we have under development for the Wolfram Language: the compiled versions of some pieces of top-level code run 30x faster than custom-written C code. Yes, the machine is probably now smarter even than Tini at being able to create fast code.

At Michigan, alongside his more directly experimentally related work (which, as I now notice, even included a paper related to a particle physics result of mine from 1978), Tini continued his longtime interest in Feynman diagrams. In 1989, he wrote a paper called “Gammatrica”, about the Dirac gamma matrix computations that are the core of many Feynman diagram calculations. And then in 1994 a textbook called Diagrammatica—kind of like “Diagrammar” but with a Mathematica-rhyming ending.

Tini didn’t publish all that many papers but spent quite a bit of time helping set directions for the US particle physics community. Looking at his list of publications, though, one that stands out is a 1991 paper written in collaboration with his daughter Hélène, who had just got her physics PhD at Berkeley (she subsequently went into quantitative finance): “On the Possibility of Resonances in Longitudinally Polarized Vector Boson Scattering”. It’s a nice paper, charmingly “resonant” with things Tini was thinking about in 1961, even comparing the interactions of W particles with interactions between pions of the kind that were all the rage in 1961.

The Later Tini

Tini retired from Michigan in 1996, returned to the Netherlands and set about building a house. The long-awaited Nobel Prize arrived in 1999.

In 2003 Tini published a book, Facts and Mysteries in Elementary Particle Physics, presenting particle physics and its history for a general audience. Interspersed through the book are one-page summaries of various physicists—often with charming little “gossip” tidbits that Tini knew from personal experience, or picked up from his time in the physics community.

One such page describing some experimental physicists ends:

“The CERN terrace, where you can see the Mont Blanc on the horizon, is very popular among high-energy physicists. You can meet there just about everybody in the business. Many initiatives were started there, and many ideas were born in that environment. So far you can still smoke a cigar there.”

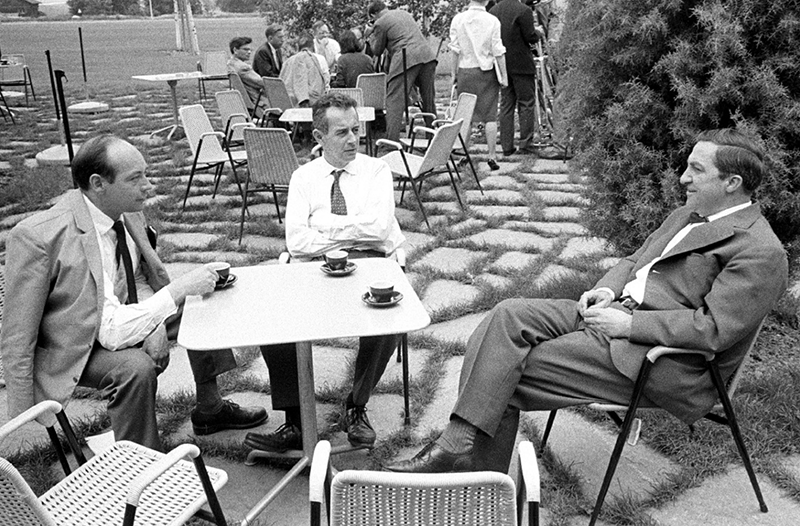

The page has a picture, taken in June 1962—that I rather imagine must mirror what that “symbolic computation origin discussion” looked like (yes, physicists wore ties back then):

Just after Tini won the Nobel Prize, he ran into Rolf Mertig, who was continuing Tini’s tradition of Feynman diagram computation by creating the FeynCalc system for the Wolfram Language. Tini apparently explained that had he not gone into physics, he would have gone into “business”.

I’m not sure if it was before Mathematica 1.0 or after, but I remember Tini telling me that he thought that maybe he should get into the software business. I think Tini felt in some ways frustrated with physics. I remember when I first met him back in 1979 he spent several hours telling me about issues at CERN. One of the important predictions of what became the Standard Model were so-called neutral currents (associated with the Z boson). In the end, neutral currents were discovered in 1973. But Tini explained that many years earlier he started telling people at CERN that they should be able to see neutral currents in their experiments. But for years they didn’t listen to him, and when they finally did, it turned out that—expensive as their earlier experiments had been—they’d thrown out the bubble chamber film that had been produced, and on which neutral currents should have been visible perhaps 15 years earlier.

When Tini won his Nobel Prize, I sent him a congratulations card. He sent a slightly stiff letter in response:

When Tini met me in 1979, I’m not sure he expected me to just take off and ultimately build something like Mathematica. But his input—and encouragement—back in 1979 was important in giving me the confidence to start down that road. So, thanks Tini for all you did for me, and for the advice—even though your PS advice I think I still haven’t taken….