I Never Expected This

It’s unexpected, surprising—and for me incredibly exciting. To be fair, at some level I’ve been working towards this for nearly 50 years. But it’s just in the last few months that it’s finally come together. And it’s much more wonderful, and beautiful, than I’d ever imagined.

In many ways it’s the ultimate question in natural science: How does our universe work? Is there a fundamental theory? An incredible amount has been figured out about physics over the past few hundred years. But even with everything that’s been done—and it’s very impressive—we still, after all this time, don’t have a truly fundamental theory of physics.

Back when I used do theoretical physics for a living, I must admit I didn’t think much about trying to find a fundamental theory; I was more concerned about what we could figure out based on the theories we had. And somehow I think I imagined that if there was a fundamental theory, it would inevitably be very complicated.

But in the early 1980s, when I started studying the computational universe of simple programs I made what was for me a very surprising and important discovery: that even when the underlying rules for a system are extremely simple, the behavior of the system as a whole can be essentially arbitrarily rich and complex.

And this got me thinking: Could the universe work this way? Could it in fact be that underneath all of this richness and complexity we see in physics there are just simple rules? I soon realized that if that was going to be the case, we’d in effect have to go underneath space and time and basically everything we know. Our rules would have to operate at some lower level, and all of physics would just have to emerge.

By the early 1990s I had a definite idea about how the rules might work, and by the end of the 1990s I had figured out quite a bit about their implications for space, time, gravity and other things in physics—and, basically as an example of what one might be able to do with science based on studying the computational universe, I devoted nearly 100 pages to this in my book A New Kind of Science.

I always wanted to mount a big project to take my ideas further. I tried to start around 2004. But pretty soon I got swept up in building Wolfram|Alpha, and the Wolfram Language and everything around it. From time to time I would see physicist friends of mine, and I’d talk about my physics project. There’d be polite interest, but basically the feeling was that finding a fundamental theory of physics was just too hard, and only kooks would attempt it.

It didn’t help that there was something that bothered me about my ideas. The particular way I’d set up my rules seemed a little too inflexible, too contrived. In my life as a computational language designer I was constantly thinking about abstract systems of rules. And every so often I’d wonder if they might be relevant for physics. But I never got anywhere. Until, suddenly, in the fall of 2018, I had a little idea.

It was in some ways simple and obvious, if very abstract. But what was most important about it to me was that it was so elegant and minimal. Finally I had something that felt right to me as a serious possibility for how physics might work. But wonderful things were happening with the Wolfram Language, and I was busy thinking about all the implications of finally having a full-scale computational language.

But then, at our annual Summer School in 2019, there were two young physicists (Jonathan Gorard and Max Piskunov) who were like, “You just have to pursue this!” Physics had been my great passion when I was young, and in August 2019 I had a big birthday and realized that, yes, after all these years I really should see if I can make something work.

So—along with the two young physicists who’d encouraged me—I began in earnest in October 2019. It helped that—after a lifetime of developing them—we now had great computational tools. And it wasn’t long before we started finding what I might call “very interesting things”. We reproduced, more elegantly, what I had done in the 1990s. And from tiny, structureless rules out were coming space, time, relativity, gravity and hints of quantum mechanics.

We were doing zillions of computer experiments, building intuition. And gradually things were becoming clearer. We started understanding how quantum mechanics works. Then we realized what energy is. We found an outline derivation of my late friend and mentor Richard Feynman’s path integral. We started seeing some deep structural connections between relativity and quantum mechanics. Everything just started falling into place. All those things I’d known about in physics for nearly 50 years—and finally we had a way to see not just what was true, but why.

I hadn’t ever imagined anything like this would happen. I expected that we’d start exploring simple rules and gradually, if we were lucky, we’d get hints here or there about connections to physics. I thought maybe we’d be able to have a possible model for the first ![]() seconds of the universe, but we’d spend years trying to see whether it might actually connect to the physics we see today.

seconds of the universe, but we’d spend years trying to see whether it might actually connect to the physics we see today.

In the end, if we’re going to have a complete fundamental theory of physics, we’re going to have to find the specific rule for our universe. And I don’t know how hard that’s going to be. I don’t know if it’s going to take a month, a year, a decade or a century. A few months ago I would also have said that I don’t even know if we’ve got the right framework for finding it.

But I wouldn’t say that anymore. Too much has worked. Too many things have fallen into place. We don’t know if the precise details of how our rules are set up are correct, or how simple or not the final rules may be. But at this point I am certain that the basic framework we have is telling us fundamentally how physics works.

It’s always a test for scientific models to compare how much you put in with how much you get out. And I’ve never seen anything that comes close. What we put in is about as tiny as it could be. But what we’re getting out are huge chunks of the most sophisticated things that are known about physics. And what’s most amazing to me is that at least so far we’ve not run across a single thing where we’ve had to say “oh, to explain that we have to add something to our model”. Sometimes it’s not easy to see how things work, but so far it’s always just been a question of understanding what the model already says, not adding something new.

At the lowest level, the rules we’ve got are about as minimal as anything could be. (Amusingly, their basic structure can be expressed in a fraction of a line of symbolic Wolfram Language code.) And in their raw form, they don’t really engage with all the rich ideas and structure that exist, for example, in mathematics. But as soon as we start looking at the consequences of the rules when they’re applied zillions of times, it becomes clear that they’re very elegantly connected to a lot of wonderful recent mathematics.

There’s something similar with physics, too. The basic structure of our models seems alien and bizarrely different from almost everything that’s been done in physics for at least the past century or so. But as we’ve gotten further in investigating our models something amazing has happened: we’ve found that not just one, but many of the popular theoretical frameworks that have been pursued in physics in the past few decades are actually directly relevant to our models.

I was worried this was going to be one of those “you’ve got to throw out the old” advances in science. It’s not. Yes, the underlying structure of our models is different. Yes, the initial approach and methods are different. And, yes, a bunch of new ideas are needed. But to make everything work we’re going to have to build on a lot of what my physicist friends have been working so hard on for the past few decades.

And then there’ll be the physics experiments. If you’d asked me even a couple of months ago when we’d get anything experimentally testable from our models I would have said it was far away. And that it probably wouldn’t happen until we’d pretty much found the final rule. But it looks like I was wrong. And in fact we’ve already got some good hints of bizarre new things that might be out there to look for.

OK, so what do we need to do now? I’m thrilled to say that I think we’ve found a path to the fundamental theory of physics. We’ve built a paradigm and a framework (and, yes, we’ve built lots of good, practical, computational tools too). But now we need to finish the job. We need to work through a lot of complicated computation, mathematics and physics. And see if we can finally deliver the answer to how our universe fundamentally works.

It’s an exciting moment, and I want to share it. I’m looking forward to being deeply involved. But this isn’t just a project for me or our small team. This is a project for the world. It’s going to be a great achievement when it’s done. And I’d like to see it shared as widely as possible. Yes, a lot of what has to be done requires top-of-the-line physics and math knowledge. But I want to expose everything as broadly as possible, so everyone can be involved in—and I hope inspired by—what I think is going to be a great and historic intellectual adventure.

Today we’re officially launching our Physics Project. From here on, we’ll be livestreaming what we’re doing—sharing whatever we discover in real time with the world. (We’ll also soon be releasing more than 400 hours of video that we’ve already accumulated.) I’m posting all my working materials going back to the 1990s, and we’re releasing all our software tools. We’ll be putting out bulletins about progress, and there’ll be educational programs around the project.

Oh, yes, and we’re putting up a Registry of Notable Universes. It’s already populated with nearly a thousand rules. I don’t think any of the ones in there yet are our own universe—though I’m not completely sure. But sometime—I hope soon—there might just be a rule entered in the Registry that has all the right properties, and that we’ll slowly discover that, yes, this is it—our universe finally decoded.

How It Works

OK, so how does it all work? I’ve written a 448-page technical exposition (yes, I’ve been busy the past few months!). Another member of our team (Jonathan Gorard) has written two 60-page technical papers. And there’s other material available at the project website. But here I’m going to give a fairly non-technical summary of some of the high points.

It all begins with something very simple and very structureless. We can think of it as a collection of abstract relations between abstract elements. Or we can think of it as a hypergraph—or, in simple cases, a graph.

We might have a collection of relations like

{{1, 2}, {2, 3}, {3, 4}, {2, 4}}

that can be represented by a graph like

✕

ResourceFunction[

"WolframModelPlot"][{{1, 2}, {2, 3}, {3, 4}, {2, 4}},

VertexLabels -> Automatic]

|

All we’re specifying here are the relations between elements (like {2,3}). The order in which we state the relations doesn’t matter (although the order within each relation does matter). And when we draw the graph, all that matters is what’s connected to what; the actual layout on the page is just a choice made for visual presentation. It also doesn’t matter what the elements are called. Here I’ve used numbers, but all that matters is that the elements are distinct.

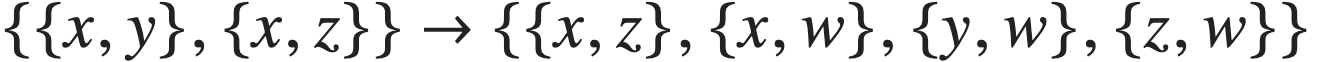

OK, so what do we do with these collections of relations, or graphs? We just apply a simple rule to them, over and over again. Here’s an example of a possible rule:

What this rule says is to pick up two relations—from anywhere in the collection—and see if the elements in them match the pattern {{x,y},{x,z}} (or, in the Wolfram Language, {{x_,y_},{x_,z_}}), where the two x’s can be anything, but both have to be the same, and the y and z can be anything. If there’s a match, then replace these two relations with the four relations on the right. The w that appears there is a new element that’s being created, and the only requirement is that it’s distinct from all other elements.

We can represent the rule as a transformation of graphs:

✕

RulePlot[ResourceFunction[

"WolframModel"][{{x, y}, {x, z}} -> {{x, z}, {x, w}, {y, w}, {z,

w}}], VertexLabels -> Automatic, "RulePartsAspectRatio" -> 0.5]

|

Now let’s apply the rule once to:

{{1, 2}, {2, 3}, {3, 4}, {2, 4}}

The {2,3} and {2,4} relations get matched, and the rule replaces them with four new relations, so the result is:

{{1, 2}, {3, 4}, {2, 4}, {2, 5}, {3, 5}, {4, 5}}

We can represent this result as a graph (which happens to be rendered flipped relative to the graph above):

✕

ResourceFunction[

"WolframModel"][{{x, y}, {x, z}} -> {{x, z}, {x, w}, {y, w}, {z,

w}}, {{1, 2}, {2, 3}, {3, 4}, {2, 4}}, 1]["FinalStatePlot",

VertexLabels -> Automatic]

|

OK, so what happens if we just keep applying the rule over and over? Here’s the result:

✕

ResourceFunction[

"WolframModel"][{{x, y}, {x, z}} -> {{x, z}, {x, w}, {y, w}, {z,

w}}, {{1, 2}, {2, 3}, {3, 4}, {2, 4}}, 10, "StatesPlotsList"]

|

Let’s do it a few more times, and make a bigger picture:

✕

ResourceFunction[

"WolframModel"][{{x, y}, {x, z}} -> {{x, z}, {x, w}, {y, w}, {z,

w}}, {{1, 2}, {2, 3}, {3, 4}, {2, 4}}, 14, "FinalStatePlot"]

|

What happened here? We have such a simple rule. Yet applying this rule over and over again produces something that looks really complicated. It’s not what our ordinary intuition tells us should happen. But actually—as I first discovered in the early 1980s—this kind of intrinsic, spontaneous generation of complexity turns out to be completely ubiquitous among simple rules and simple programs. And for example my book A New Kind of Science is about this whole phenomenon and why it’s so important for science and beyond.

But here what’s important about it is that it’s what’s going to make our universe, and everything in it. Let’s review again what we’ve seen. We started off with a simple rule that just tells us how to transform collections of relations. But what we get out is this complicated-looking object that, among other things, seems to have some definite shape.

We didn’t put in anything about this shape. We just gave a simple rule. And using that simple rule a graph was made. And when we visualize that graph, it comes out looking like it has a definite shape.

If we ignore all matter in the universe, our universe is basically a big chunk of space. But what is that space? We’ve had mathematical idealizations and abstractions of it for two thousand years. But what really is it? Is it made of something, and if so, what?

Well, I think it’s very much like the picture above. A whole bunch of what are essentially abstract points, abstractly connected together. Except that in the picture there are 6704 of these points, whereas in our real universe there might be more like 10400 of them, or even many more.

All Possible Rules

We don’t (yet) know an actual rule that represents our universe—and it’s almost certainly not the one we just talked about. So let’s discuss what possible rules there are, and what they typically do.

One feature of the rule we used above is that it’s based on collections of “binary relations”, containing pairs of elements (like {2,3}). But the same setup lets us also consider relations with more elements. For example, here’s a collection of two ternary relations:

{{1, 2, 3}, {3, 4, 5}}

We can’t use an ordinary graph to represent things like this, but we can use a hypergraph—a construct where we generalize edges in graphs that connect pairs of nodes to “hyperedges” that connect any number of nodes:

✕

ResourceFunction["WolframModelPlot"][{{1, 2, 3}, {3, 4, 5}},

VertexLabels -> Automatic]

|

(Notice that we’re dealing with directed hypergraphs, where the order in which nodes appear in a hyperedge matters. In the picture, the “membranes” are just indicating which nodes are connected to the same hyperedge.)

We can make rules for hypergraphs too:

{{x, y, z}} → {{w, w, y}, {w, x, z}}

✕

RulePlot[ResourceFunction[

"WolframModel"][{{1, 2, 3}} -> {{4, 4, 2}, {4, 1, 3}}]]

|

And now here’s what happens if we run this rule starting from the simplest possible ternary hypergraph—the ternary self-loop {{0,0,0}}:

✕

ResourceFunction[

"WolframModel"][{{1, 2, 3}} -> {{4, 4, 2}, {4, 1, 3}}, {{0, 0, 0}},

8]["StatesPlotsList", "MaxImageSize" -> 180]

|

Alright, so what happens if we just start picking simple rules at random? Here are some of the things they do:

✕

urules24 =

Import["https://www.wolframcloud.com/obj/wolframphysics/Data/22-24-\

2x0-unioned-summary.wxf"]; SeedRandom[6783]; GraphicsGrid[

Partition[

ResourceFunction["WolframModelPlot"][List @@@ EdgeList[#]] & /@

Take[Select[

ParallelMap[

UndirectedGraph[

Rule @@@

ResourceFunction["WolframModel"][#, {{0, 0}, {0, 0}}, 8,

"FinalState"],

GraphLayout -> "SpringElectricalEmbedding"] &, #Rule & /@

RandomSample[urules24, 150]],

EdgeCount[#] > 10 && ConnectedGraphQ[#] &], 60], 10],

ImageSize -> Full]

|

Somehow this looks very zoological (and, yes, these models are definitely relevant for things other than fundamental physics—though probably particularly molecular-scale construction). But basically what we see here is that there are various common forms of behavior, some simple, and some not.

Here are some samples of the kinds of things we see:

✕

GraphicsGrid[

Partition[

ParallelMap[

ResourceFunction["WolframModel"][#[[1]], #[[2]], #[[3]],

"FinalStatePlot"] &, {{{{1, 2}, {1, 3}} -> {{1, 2}, {1, 4}, {2,

4}, {4, 3}}, {{0, 0}, {0, 0}},

12}, {{{1, 2}, {1, 3}} -> {{1, 4}, {1, 4}, {2, 4}, {3, 2}}, {{0,

0}, {0, 0}},

10}, {{{1, 2}, {1, 3}} -> {{2, 2}, {2, 4}, {1, 4}, {3, 4}}, {{0,

0}, {0, 0}},

10}, {{{1, 2}, {1, 3}} -> {{2, 3}, {2, 4}, {3, 4}, {1, 4}}, {{0,

0}, {0, 0}},

10}, {{{1, 2}, {1, 3}} -> {{2, 3}, {2, 4}, {3, 4}, {4, 1}}, {{0,

0}, {0, 0}},

12}, {{{1, 2}, {1, 3}} -> {{2, 4}, {2, 1}, {4, 1}, {4, 3}}, {{0,

0}, {0, 0}},

9}, {{{1, 2}, {1, 3}} -> {{2, 4}, {2, 4}, {1, 4}, {3, 4}}, {{0,

0}, {0, 0}},

10}, {{{1, 2}, {1, 3}} -> {{2, 4}, {2, 4}, {2, 1}, {3, 4}}, {{0,

0}, {0, 0}},

10}, {{{1, 2}, {1, 3}} -> {{4, 1}, {1, 4}, {4, 2}, {4, 3}}, {{0,

0}, {0, 0}},

12}, {{{1, 2}, {2, 3}} -> {{1, 2}, {2, 1}, {4, 1}, {4, 3}}, {{0,

0}, {0, 0}},

10}, {{{1, 2}, {2, 3}} -> {{1, 3}, {1, 4}, {3, 4}, {3, 2}}, {{0,

0}, {0, 0}},

10}, {{{1, 2}, {2, 3}} -> {{2, 3}, {2, 4}, {3, 4}, {1, 2}}, {{0,

0}, {0, 0}}, 9}}], 4], ImageSize -> Full]

|

And the big question is: if we were to run rules like these long enough, would they end up making something that reproduces our physical universe? Or, put another way, out in this computational universe of simple rules, can we find our physical universe?

A big question, though, is: How would we know? What we’re seeing here are the results of applying rules a few thousand times; in our actual universe they may have been applied 10500 times so far, or even more. And it’s not easy to bridge that gap. And we have to work it from both sides. First, we have to use the best summary of the operation of our universe that what we’ve learned in physics over the past few centuries has given us. And second, we have to go as far as we can in figuring out what our rules actually do.

And here there’s potentially a fundamental problem: the phenomenon of computational irreducibility. One of the great achievements of the mathematical sciences, starting about three centuries ago, has been delivering equations and formulas that basically tell you how a system will behave without you having to trace each step in what the system does. But many years ago I realized that in the computational universe of possible rules, this very often isn’t possible. Instead, even if you know the exact rule that a system follows, you may still not be able to work out what the system will do except by essentially just tracing every step it takes.

One might imagine that—once we know the rule for some system—then with all our computers and brainpower we’d always be able to “jump ahead” and work out what the system would do. But actually there’s something I call the Principle of Computational Equivalence, which says that almost any time the behavior of a system isn’t obviously simple, it’s computationally as sophisticated as anything. So we won’t be able to “outcompute” it—and to work out what it does will take an irreducible amount of computational work.

Well, for our models of the universe this is potentially a big problem. Because we won’t be able to get even close to running those models for as long as the universe does. And at the outset it’s not clear that we’ll be able to tell enough from what we can do to see if it matches up with physics.

But the big recent surprise for me is that we seem to be lucking out. We do know that whenever there’s computational irreducibility in a system, there are also an infinite number of pockets of computational reducibility. But it’s completely unclear whether in our case those pockets will line up with things we know from physics. And the surprise is that it seems a bunch of them do.

What Is Space?

Let’s look at a particular, simple rule from our infinite collection:

{{x, y, y}, {z, x, u}} → {{y, v, y}, {y, z, v}, {u, v, v}}

✕

RulePlot[ResourceFunction[

"WolframModel"][{{1, 2, 2}, {3, 1, 4}} -> {{2, 5, 2}, {2, 3,

5}, {4, 5, 5}}]]

|

Here’s what it does:

✕

ResourceFunction["WolframModelPlot"][#, ImageSize -> 50] & /@

ResourceFunction[

"WolframModel"][{{{1, 2, 2}, {3, 1, 4}} -> {{2, 5, 2}, {2, 3,

5}, {4, 5, 5}}}, {{0, 0, 0}, {0, 0, 0}}, 20, "StatesList"]

|

And after a while this is what happens:

✕

Row[Append[

Riffle[ResourceFunction[

"WolframModel"][{{1, 2, 2}, {3, 1, 4}} -> {{2, 5, 2}, {2, 3,

5}, {4, 5, 5}}, {{0, 0, 0}, {0, 0, 0}}, #,

"FinalStatePlot"] & /@ {200, 500}, " ... "], " ..."]]

|

It’s basically making us a very simple “piece of space”. If we keep on going longer and longer it’ll make a finer and finer mesh, to the point where what we have is almost indistinguishable from a piece of a continuous plane.

Here’s a different rule:

{{x, x, y}, {z, u, x}} → {{u, u, z}, {v, u, v}, {v, y, x}}

✕

RulePlot[ResourceFunction[

"WolframModel"][{{x, x, y}, {z, u, x}} -> {{u, u, z}, {v, u,

v}, {v, y, x}}]]

|

✕

ResourceFunction["WolframModelPlot"][#, ImageSize -> 50] & /@

ResourceFunction[

"WolframModel"][{{1, 1, 2}, {3, 4, 1}} -> {{4, 4, 3}, {5, 4,

5}, {5, 2, 1}}, {{0, 0, 0}, {0, 0, 0}}, 20, "StatesList"]

|

✕

ResourceFunction[

"WolframModel"][{{1, 1, 2}, {3, 4, 1}} -> {{4, 4, 3}, {5, 4, 5}, {5,

2, 1}}, {{0, 0, 0}, {0, 0, 0}}, 2000, "FinalStatePlot"]

|

It looks it’s “trying to make” something 3D. Here’s another rule:

{{x, y, z}, {u, y, v}} → {{w, z, x}, {z, w, u}, {x, y, w}}

✕

RulePlot[ResourceFunction[

"WolframModel"][{{1, 2, 3}, {4, 2, 5}} -> {{6, 3, 1}, {3, 6,

4}, {1, 2, 6}}]]

|

✕

ResourceFunction["WolframModelPlot"][#, ImageSize -> 50] & /@

ResourceFunction[

"WolframModel"][{{x, y, z}, {u, y, v}} -> {{w, z, x}, {z, w,

u}, {x, y, w}}, {{0, 0, 0}, {0, 0, 0}}, 20, "StatesList"]

|

✕

ResourceFunction[

"WolframModel"][{{1, 2, 3}, {4, 2, 5}} -> {{6, 3, 1}, {3, 6, 4}, {1,

2, 6}}, {{0, 0, 0}, {0, 0, 0}}, 1000, "FinalStatePlot"]

|

Isn’t this strange? We have a rule that’s just specifying how to rewrite pieces of an abstract hypergraph, with no notion of geometry, or anything about 3D space. And yet it produces a hypergraph that’s naturally laid out as something that looks like a 3D surface.

Even though the only thing that’s really here is connections between points, we can “guess” where a surface might be, then we can show the result in 3D:

✕

ResourceFunction["GraphReconstructedSurface"][

ResourceFunction[

"WolframModel"][ {{1, 2, 3}, {4, 2, 5}} -> {{6, 3, 1}, {3, 6,

4}, {1, 2, 6}}, {{0, 0, 0}, {0, 0, 0}}, 2000, "FinalState"]]

|

If we keep going, then like the example of the plane, the mesh will get finer and finer, until basically our rule has grown us—point by point, connection by connection—something that’s like a continuous 3D surface of the kind you might study in a calculus class. Of course, in some sense, it’s not “really” that surface: it’s just a hypergraph that represents a bunch of abstract relations—but somehow the pattern of those relations gives it a structure that’s a closer and closer approximation to the surface.

And this is basically how I think space in the universe works. Underneath, it’s a bunch of discrete, abstract relations between abstract points. But at the scale we’re experiencing it, the pattern of relations it has makes it seem like continuous space of the kind we’re used to. It’s a bit like what happens with, say, water. Underneath, it’s a bunch of discrete molecules bouncing around. But to us it seems like a continuous fluid.

Needless to say, people have thought that space might ultimately be discrete ever since antiquity. But in modern physics there was never a way to make it work—and anyway it was much more convenient for it to be continuous, so one could use calculus. But now it’s looking like the idea of space being discrete is actually crucial to getting a fundamental theory of physics.

The Dimensionality of Space

A very fundamental fact about space as we experience it is that it is three-dimensional. So can our rules reproduce that? Two of the rules we just saw produce what we can easily recognize as two-dimensional surfaces—in one case flat, in the other case arranged in a certain shape. Of course, these are very bland examples of (two-dimensional) space: they are effectively just simple grids. And while this is what makes them easy to recognize, it also means that they’re not actually much like our universe, where there’s in a sense much more going on.

So, OK, take a case like:

✕

ResourceFunction[

"WolframModel"][{{1, 2, 3}, {4, 3, 5}} -> {{3, 5, 2}, {5, 2, 4}, {2,

1, 6}}, {{0, 0, 0}, {0, 0, 0}}, 22, "FinalStatePlot"]

|

If we were to go on long enough, would this make something like space, and, if so, with how many dimensions? To know the answer, we have to have some robust way to measure dimension. But remember, the pictures we’re drawing are just visualizations; the underlying structure is a bunch of discrete relations defining a hypergraph—with no information about coordinates, or geometry, or even topology. And, by the way, to emphasize that point, here is the same graph—with exactly the same connectivity structure—rendered four different ways:

✕

GridGraph[{10, 10}, GraphLayout -> #,

VertexStyle ->

ResourceFunction["WolframPhysicsProjectStyleData"]["SpatialGraph",

"VertexStyle"],

EdgeStyle ->

ResourceFunction["WolframPhysicsProjectStyleData"]["SpatialGraph",

"EdgeLineStyle"] ] & /@ {"SpringElectricalEmbedding",

"TutteEmbedding", "RadialEmbedding", "DiscreteSpiralEmbedding"}

|

But getting back to the question of dimension, recall that the area of a circle is πr2; the volume of a sphere is ![]() . In general, the “volume” of the d-dimensional analog of a sphere is a constant multiplied by rd. But now think about our hypergraph. Start at some point in the hypergraph. Then follow r hyperedges in all possible ways. You’ve effectively made the analog of a “spherical ball” in the hypergraph. Here are examples for graphs corresponding to 2D and 3D lattices:

. In general, the “volume” of the d-dimensional analog of a sphere is a constant multiplied by rd. But now think about our hypergraph. Start at some point in the hypergraph. Then follow r hyperedges in all possible ways. You’ve effectively made the analog of a “spherical ball” in the hypergraph. Here are examples for graphs corresponding to 2D and 3D lattices:

✕

MakeBallPicture[g_, rmax_] :=

Module[{gg = UndirectedGraph[g], cg}, cg = GraphCenter[gg];

Table[HighlightGraph[gg, NeighborhoodGraph[gg, cg, r]], {r, 0,

rmax}]];

Graph[#, ImageSize -> 60,

VertexStyle ->

ResourceFunction["WolframPhysicsProjectStyleData"]["SpatialGraph",

"VertexStyle"],

EdgeStyle ->

ResourceFunction["WolframPhysicsProjectStyleData"]["SpatialGraph",

"EdgeLineStyle"] ] & /@ MakeBallPicture[GridGraph[{11, 11}], 7]

|

✕

MakeBallPicture[g_, rmax_] :=

Module[{gg = UndirectedGraph[g], cg}, cg = GraphCenter[gg];

Table[HighlightGraph[gg, NeighborhoodGraph[gg, cg, r]], {r, 0,

rmax}]];

Graph[#, ImageSize -> 80,

VertexStyle ->

ResourceFunction["WolframPhysicsProjectStyleData"]["SpatialGraph",

"VertexStyle"],

EdgeStyle ->

ResourceFunction["WolframPhysicsProjectStyleData"]["SpatialGraph",

"EdgeLineStyle"] ] & /@ MakeBallPicture[GridGraph[{7, 7, 7}], 5]

|

And if you now count the number of points reached by going “graph distance r” (i.e. by following r connections in the graph) you’ll find in these two cases that they indeed grow like r2 and r3.

So this gives us a way to measure the effective dimension of our hypergraphs. Just start at a particular point and see how many points you reach by going r steps:

✕

gg = UndirectedGraph[

ResourceFunction["HypergraphToGraph"][

ResourceFunction[

"WolframModel"][{{x, y}, {x, z}} -> {{x, z}, {x, w}, {y,

w}, {z, w}}, {{1, 2}, {1, 3}}, 11, "FinalState"]]];

With[{cg = GraphCenter[gg]},

Table[HighlightGraph[gg, NeighborhoodGraph[gg, cg, r],

ImageSize -> 90], {r, 6}]]

|

Now to work out effective dimension, we in principle just have to fit the results to rd. It’s a bit complicated, though, because we need to avoid small r (where every detail of the hypergraph is going to matter) and large r (where we’re hitting the edge of the hypergraph)—and we also need to think about how our “space” is refining as the underlying system evolves. But in the end we can generate a series of fits for the effective dimension—and in this case these say that the effective dimension is about 2.7:

✕

HypergraphDimensionEstimateList[hg_] :=

ResourceFunction["LogDifferences"][

MeanAround /@

Transpose[

Values[ResourceFunction["HypergraphNeighborhoodVolumes"][hg, All,

Automatic]]]];

ListLinePlot[

Select[Length[#] > 3 &][

HypergraphDimensionEstimateList /@

Drop[ResourceFunction[

"WolframModel"][{{x, y}, {x, z}} -> {{x, z}, {x, w}, {y, w}, {z,

w}}, {{1, 2}, {1, 3}}, 16, "StatesList"], 4]], Frame -> True,

PlotStyle -> {Hue[0.9849884156577183, 0.844661839156126, 0.63801],

Hue[0.05, 0.9493847125498949, 0.954757], Hue[

0.0889039442504032, 0.7504362741954692, 0.873304], Hue[

0.06, 1., 0.8], Hue[0.12, 1., 0.9], Hue[0.08, 1., 1.], Hue[

0.98654716551403, 0.6728487861309527, 0.733028], Hue[

0.04, 0.68, 0.9400000000000001], Hue[

0.9945149844324427, 0.9892162267509705, 0.823529], Hue[

0.9908289627180552, 0.4, 0.9]}]

|

If we do the same thing for

✕

ResourceFunction[

"WolframModel"][{{1, 2, 2}, {3, 1, 4}} -> {{2, 5, 2}, {2, 3,

5}, {4, 5, 5}}, {{0, 0, 0}, {0, 0, 0}}, 200, "FinalStatePlot"]

|

it’s limiting to dimension 2, as it should:

✕

CenteredDimensionEstimateList[g_Graph] :=

ResourceFunction["LogDifferences"][

N[First[Values[

ResourceFunction["GraphNeighborhoodVolumes"][g,

GraphCenter[g]]]]]];

Show[ListLinePlot[

Table[CenteredDimensionEstimateList[

UndirectedGraph[

ResourceFunction["HypergraphToGraph"][

ResourceFunction[

"WolframModel"][{{1, 2, 2}, {3, 1, 4}} -> {{2, 5, 2}, {2, 3,

5}, {4, 5, 5}}, {{0, 0, 0}, {0, 0, 0}}, t,

"FinalState"]]]], {t, 500, 2500, 500}], Frame -> True,

PlotStyle -> {Hue[0.9849884156577183, 0.844661839156126, 0.63801],

Hue[0.05, 0.9493847125498949, 0.954757], Hue[

0.0889039442504032, 0.7504362741954692, 0.873304], Hue[

0.06, 1., 0.8], Hue[0.12, 1., 0.9], Hue[0.08, 1., 1.], Hue[

0.98654716551403, 0.6728487861309527, 0.733028], Hue[

0.04, 0.68, 0.9400000000000001], Hue[

0.9945149844324427, 0.9892162267509705, 0.823529], Hue[

0.9908289627180552, 0.4, 0.9]}],

Plot[2, {r, 0, 50}, PlotStyle -> Dotted]]

|

What does the fractional dimension mean? Well, consider fractals, which our rules can easily make:

{{x, y, z}} → {{x, u, w}, {y, v, u}, {z, w, v}}

✕

RulePlot[ResourceFunction[

"WolframModel"][{{1, 2, 3}} -> {{1, 4, 6}, {2, 5, 4}, {3, 6, 5}}]]

|

✕

ResourceFunction["WolframModelPlot"][#, "MaxImageSize" -> 100] & /@

ResourceFunction[

"WolframModel"][{{1, 2, 3}} -> {{1, 4, 6}, {2, 5, 4}, {3, 6,

5}}, {{0, 0, 0}}, 6, "StatesList"]

|

If we measure the dimension here we get 1.58—the usual fractal dimension ![]() for a Sierpiński structure:

for a Sierpiński structure:

✕

HypergraphDimensionEstimateList[hg_] :=

ResourceFunction["LogDifferences"][

MeanAround /@

Transpose[

Values[ResourceFunction["HypergraphNeighborhoodVolumes"][hg, All,

Automatic]]]]; Show[

ListLinePlot[

Drop[HypergraphDimensionEstimateList /@

ResourceFunction[

"WolframModel"][{{1, 2, 3}} -> {{1, 4, 6}, {2, 5, 4}, {3, 6,

5}}, {{0, 0, 0}}, 8, "StatesList"], 2],

PlotStyle -> {Hue[0.9849884156577183, 0.844661839156126, 0.63801],

Hue[0.05, 0.9493847125498949, 0.954757], Hue[

0.0889039442504032, 0.7504362741954692, 0.873304], Hue[

0.06, 1., 0.8], Hue[0.12, 1., 0.9], Hue[0.08, 1., 1.], Hue[

0.98654716551403, 0.6728487861309527, 0.733028], Hue[

0.04, 0.68, 0.9400000000000001], Hue[

0.9945149844324427, 0.9892162267509705, 0.823529], Hue[

0.9908289627180552, 0.4, 0.9]}, Frame -> True,

PlotRange -> {0, Automatic}],

Plot[Log[2, 3], {r, 0, 150}, PlotStyle -> {Dotted}]]

|

Our rule above doesn’t create a structure that’s as regular as this. In fact, even though the rule itself is completely deterministic, the structure it makes looks quite random. But what our measurements suggest is that when we keep running the rule it produces something that’s like 2.7-dimensional space.

Of course, 2.7 is not 3, and presumably this particular rule isn’t the one for our particular universe (though it’s not clear what effective dimension it’d have if we ran it 10100 steps). But the process of measuring dimension shows an example of how we can start making “physics-connectable” statements about the behavior of our rules.

By the way, we’ve been talking about “making space” with our models. But actually, we’re not just trying to make space; we’re trying to make everything in the universe. In standard current physics, there’s space—described mathematically as a manifold—and serving as a kind of backdrop, and then there’s everything that’s in space, all the matter and particles and planets and so on.

But in our models there’s in a sense nothing but space—and in a sense everything in the universe must be “made of space”. Or, put another way, it’s the exact same hypergraph that’s giving us the structure of space, and everything that exists in space.

So what this means is that, for example, a particle like an electron or a photon must correspond to some local feature of the hypergraph, a bit like in this toy example:

✕

Graph[EdgeAdd[

EdgeDelete[

NeighborhoodGraph[

IndexGraph@ResourceFunction["HexagonalGridGraph"][{6, 5}], {42,

48, 54, 53, 47, 41}, 4], {30 <-> 29, 42 <-> 41}], {30 <-> 41,

42 <-> 29}],

VertexSize -> {Small,

Alternatives @@ {30, 36, 42, 41, 35, 29} -> Large},

EdgeStyle -> {ResourceFunction["WolframPhysicsProjectStyleData"][

"SpatialGraph", "EdgeLineStyle"],

Alternatives @@ {30 \[UndirectedEdge] 24, 24 \[UndirectedEdge] 18,

18 \[UndirectedEdge] 17, 17 \[UndirectedEdge] 23,

23 \[UndirectedEdge] 29, 29 \[UndirectedEdge] 35,

35 \[UndirectedEdge] 34, 34 \[UndirectedEdge] 40,

40 \[UndirectedEdge] 46, 46 \[UndirectedEdge] 52,

52 \[UndirectedEdge] 58, 58 \[UndirectedEdge] 59,

59 \[UndirectedEdge] 65, 65 \[UndirectedEdge] 66,

66 \[UndirectedEdge] 60, 60 \[UndirectedEdge] 61,

61 \[UndirectedEdge] 55, 55 \[UndirectedEdge] 49,

49 \[UndirectedEdge] 54, 49 \[UndirectedEdge] 43,

43 \[UndirectedEdge] 37, 37 \[UndirectedEdge] 36,

36 \[UndirectedEdge] 30, 30 \[UndirectedEdge] 41,

42 \[UndirectedEdge] 29, 36 \[UndirectedEdge] 42,

35 \[UndirectedEdge] 41, 41 \[UndirectedEdge] 47,

47 \[UndirectedEdge] 53, 53 \[UndirectedEdge] 54,

54 \[UndirectedEdge] 48, 48 \[UndirectedEdge] 42} ->

Directive[AbsoluteThickness[2.5], Darker[Red, .2]]},

VertexStyle ->

ResourceFunction["WolframPhysicsProjectStyleData"]["SpatialGraph",

"VertexStyle"]]

|

To give a sense of scale, though, I have an estimate that says that 10200 times more “activity” in the hypergraph that represents our universe is going into “maintaining the structure of space” than is going into maintaining all the matter we know exists in the universe.

Curvature in Space & Einstein’s Equations

Here are a few structures that simple examples of our rules make:

✕

GraphicsRow[{ResourceFunction[

"WolframModel"][{{1, 2, 2}, {1, 3, 4}} -> {{4, 5, 5}, {5, 3,

2}, {1, 2, 5}}, {{0, 0, 0}, {0, 0, 0}}, 1000, "FinalStatePlot"],

ResourceFunction[

"WolframModel"][{{1, 1, 2}, {1, 3, 4}} -> {{4, 4, 5}, {5, 4,

2}, {3, 2, 5}}, {{0, 0, 0}, {0, 0, 0}}, 1000, "FinalStatePlot"],

ResourceFunction[

"WolframModel"][{{1, 1, 2}, {3, 4, 1}} -> {{3, 3, 5}, {2, 5,

1}, {2, 6, 5}}, {{0, 0, 0}, {0, 0, 0}}, 2000,

"FinalStatePlot"]}, ImageSize -> Full]

|

But while all of these look like surfaces, they’re all obviously different. And one way to characterize them is by their local curvature. Well, it turns out that in our models, curvature is a concept closely related to dimension—and this fact will actually be critical in understanding, for example, how gravity arises.

But for now, let’s talk about how one would measure curvature on a hypergraph. Normally the area of a circle is πr2. But let’s imagine that we’ve drawn a circle on the surface of a sphere, and now we’re measuring the area on the sphere that’s inside the circle:

✕

cappedSphere[angle_] :=

Module[{u, v},

With[{spherePoint = {Cos[u] Sin[v], Sin[u] Sin[v], Cos[v]}},

Graphics3D[{First@

ParametricPlot3D[spherePoint, {v, #1, #2}, {u, 0, 2 \[Pi]},

Mesh -> None, ##3] & @@@ {{angle, \[Pi],

PlotStyle -> Lighter[Yellow, .5]}, {0, angle,

PlotStyle -> Lighter[Red, .3]}},

First@ParametricPlot3D[

spherePoint /. v -> angle, {u, 0, 2 \[Pi]},

PlotStyle -> Darker@Red]}, Boxed -> False,

SphericalRegion -> False, Method -> {"ShrinkWrap" -> True}]]];

Show[GraphicsRow[Riffle[cappedSphere /@ {0.3, Pi/6, .8}, Spacer[30]]],

ImageSize -> 250]

|

This area is no longer πr2. Instead it’s π![]() , where a is the radius of the sphere. In other words, as the radius of the circle gets bigger, the effect of being on the sphere is ever more important. (On the surface of the Earth, imagine a circle drawn around the North Pole; once it gets to the equator, it can never get any bigger.)

, where a is the radius of the sphere. In other words, as the radius of the circle gets bigger, the effect of being on the sphere is ever more important. (On the surface of the Earth, imagine a circle drawn around the North Pole; once it gets to the equator, it can never get any bigger.)

If we generalize to d dimensions, it turns out the formula for the growth rate of the volume is ![]() , where R is a mathematical object known as the Ricci scalar curvature.

, where R is a mathematical object known as the Ricci scalar curvature.

So what this all means is that if we look at the growth rates of spherical balls in our hypergraphs, we can expect two contributions: a leading one of order rd that corresponds to effective dimension, and a “correction” of order r2 that represents curvature.

Here’s an example. Instead of giving a flat estimate of dimension (here equal to 2), we have something that dips down, reflecting the positive (“sphere-like”) curvature of the surface:

✕

res = CloudGet["https://wolfr.am/L1ylk12R"];

GraphicsRow[{ResourceFunction["WolframModelPlot"][

ResourceFunction[

"WolframModel"][{{1, 2, 3}, {4, 2, 5}} -> {{6, 3, 1}, {3, 6,

4}, {1, 2, 6}}, {{0, 0, 0}, {0, 0, 0}}, 800, "FinalState"]],

ListLinePlot[res, Frame -> True,

PlotStyle -> {Hue[0.9849884156577183, 0.844661839156126, 0.63801],

Hue[0.05, 0.9493847125498949, 0.954757], Hue[

0.0889039442504032, 0.7504362741954692, 0.873304], Hue[

0.06, 1., 0.8], Hue[0.12, 1., 0.9], Hue[0.08, 1., 1.], Hue[

0.98654716551403, 0.6728487861309527, 0.733028], Hue[

0.04, 0.68, 0.9400000000000001], Hue[

0.9945149844324427, 0.9892162267509705, 0.823529], Hue[

0.9908289627180552, 0.4, 0.9]}]}]

|

What is the significance of curvature? One thing is that it has implications for geodesics. A geodesic is the shortest distance between two points. In ordinary flat space, geodesics are just lines. But when there’s curvature, the geodesics are curved:

✕

(*https://www.wolframcloud.com/obj/wolframphysics/TechPaper-Programs/\

Section-04/Geodesics-01.wl*)

CloudGet["https://wolfr.am/L1PH6Rne"];

hyperboloidGeodesics = Table[

Part[

NDSolve[{Sinh[

2 u[t]] ((2 Derivative[1][u][t]^2 - Derivative[1][v][t]^2)/(

2 Cosh[2 u[t]])) + Derivative[2][u][t] == 0, ((2 Tanh[

u[t]]) Derivative[1][u][t]) Derivative[1][v][t] + Derivative[2][v][

t] == 0, u[0] == -0.9, v[0] == v0, u[1] == 0.9, v[1] == v0}, {

u[t],

v[t]}, {t, 0, 1}, MaxSteps -> Infinity], 1], {v0,

Range[-0.1, 0.1, 0.025]}];

{SphereGeodesics[Range[-.1, .1, .025]],

PlaneGeodesics[Range[-.1, .1, .025]],

Show[ParametricPlot3D[{Sinh[u], Cosh[u] Sin[v],

Cos[v] Cosh[u]}, {u, -1, 1}, {v, -\[Pi]/3, \[Pi]/3},

Mesh -> False, Boxed -> False, Axes -> False, PlotStyle -> color],

ParametricPlot3D[{Sinh[u[t]], Cosh[u[t]] Sin[v[t]],

Cos[v[t]] Cosh[u[t]]} /. #, {t, 0, 1}, PlotStyle -> Red] & /@

hyperboloidGeodesics, ViewAngle -> 0.3391233203265557`,

ViewCenter -> {{0.5`, 0.5`, 0.5`}, {0.5265689095305934`,

0.5477310383268459`}},

ViewPoint -> {1.7628482856617167`, 0.21653966523483362`,

2.8801868854502355`},

ViewVertical -> {-0.1654573174671554`, 0.1564093539158781`,

0.9737350718261054`}]}

|

In the case of positive curvature, bundles of geodesics converge; for negative curvature they diverge. But, OK, even though geodesics were originally defined for continuous space (actually, as the name suggests, for paths on the surface of the Earth), one can also have them in graphs (and hypergraphs). And it’s the same story: the geodesic is the shortest path between two points in the graph (or hypergraph).

Here are geodesics on the “positive-curvature surface” created by one of our rules:

✕

findShortestPath[edges_, endpoints : {{_, _} ...}] :=

FindShortestPath[

Catenate[Partition[#, 2, 1, 1] & /@ edges], #, #2] & @@@

endpoints;

pathEdges[edges_, path_] :=

Select[Count[Alternatives @@ path]@# >= 2 &]@edges;

plotGeodesic[edges_, endpoints : {{_, _} ...}, o : OptionsPattern[]] :=

With[{vertexPaths = findShortestPath[edges, endpoints]},

ResourceFunction["WolframModelPlot"][edges, o,

GraphHighlight -> Catenate[vertexPaths],

EdgeStyle -> <|

Alternatives @@ Catenate[pathEdges[edges, #] & /@ vertexPaths] ->

Directive[AbsoluteThickness[4], Red]|>]];

plotGeodesic[edges_, endpoints : {__ : Except@List},

o : OptionsPattern[]] := plotGeodesic[edges, {endpoints}, o];

plotGeodesic[

ResourceFunction[

"WolframModel"][{{1, 2, 3}, {4, 2, 5}} -> {{6, 3, 1}, {3, 6,

4}, {1, 2, 6}}, Automatic, 1000,

"FinalState"], {{123, 721}, {24, 552}, {55, 671}},

VertexSize -> 0.12]

|

And here they are for a more complicated structure:

✕

(*https://www.wolframcloud.com/obj/wolframphysics/TechPaper-Programs/\

Section-04/Geodesics-01.wl*)

CloudGet["https://wolfr.am/L1PH6Rne"];(*Geodesics*)

gtest = UndirectedGraph[

Rule @@@

ResourceFunction[

"WolframModel"][{{x, y}, {x, z}} -> {{x, z}, {x, w}, {y, w}, {z,

w}}, {{1, 2}, {1, 3}}, 10, "FinalState"], Sequence[

VertexStyle -> ResourceFunction["WolframPhysicsProjectStyleData"][

"SpatialGraph", "VertexStyle"],

EdgeStyle -> ResourceFunction["WolframPhysicsProjectStyleData"][

"SpatialGraph", "EdgeLineStyle"]] ];

Geodesics[gtest, #] & /@ {{{79, 207}}, {{143, 258}}}

|

Why are geodesics important? One reason is that in Einstein’s general relativity they’re the paths that light (or objects in “free fall”) follows in space. And in that theory gravity is associated with curvature in space. So when something is deflected going around the Sun, that happens because space around the Sun is curved, so the geodesic the object follows is also curved.

General relativity’s description of curvature in space turns out to all be based on the Ricci scalar curvature R that we encountered above (as well as the slightly more sophisticated Ricci tensor). But so if we want to find out if our models are reproducing Einstein’s equations for gravity, we basically have to find out if the Ricci curvatures that arise from our hypergraphs are the same as the theory implies.

There’s quite a bit of mathematical sophistication involved (for example, we have to consider curvature in space+time, not just space), but the bottom line is that, yes, in various limits, and subject to various assumptions, our models do indeed reproduce Einstein’s equations. (At first, we’re just reproducing the vacuum Einstein equations, appropriate when there’s no matter involved; when we discuss matter, we’ll see that we actually get the full Einstein equations.)

It’s a big deal to reproduce Einstein’s equations. Normally in physics, Einstein’s equations are what you start from (or sometimes they arise as a consistency condition for a theory): here they’re what comes out as an emergent feature of the model.

It’s worth saying a little about how the derivation works. It’s actually somewhat analogous to the derivation of the equations of fluid flow from the limit of the underlying dynamics of lots of discrete molecules. But in this case, it’s the structure of space rather than the velocity of a fluid that we’re computing. It involves some of the same kinds of mathematical approximations and assumptions, though. One has to assume, for example, that there’s enough effective randomness generated in the system that statistical averages work. There is also a whole host of subtle mathematical limits to take. Distances have to be large compared to individual hypergraph connections, but small compared to the whole size of the hypergraph, etc.

It’s pretty common for physicists to “hack through” the mathematical niceties. That’s actually happened for nearly a century in the case of deriving fluid equations from molecular dynamics. And we’re definitely guilty of the same thing here. Which in a sense is another way of saying that there’s lots of nice mathematics to do in actually making the derivation rigorous, and understanding exactly when it’ll apply, and so on.

By the way, when it comes to mathematics, even the setup that we have is interesting. Calculus has been built to work in ordinary continuous spaces (manifolds that locally approximate Euclidean space). But what we have here is something different: in the limit of an infinitely large hypergraph, it’s like a continuous space, but ordinary calculus doesn’t work on it (not least because it isn’t necessarily integer-dimensional). So to really talk about it well, we have to invent something that’s kind of a generalization of calculus, that’s for example capable of dealing with curvature in fractional-dimensional space. (Probably the closest current mathematics to this is what’s been coming out of the very active field of geometric group theory.)

It’s worth noting, by the way, that there’s a lot of subtlety in the precise tradeoff between changing the dimension of space, and having curvature in it. And while we think our universe is three-dimensional, it’s quite possible according to our models that there are at least local deviations—and most likely there were actually large deviations in the early universe.

Time

In our models, space is defined by the large-scale structure of the hypergraph that represents our collection of abstract relations. But what then is time?

For the past century or so, it’s been pretty universally assumed in fundamental physics that time is in a sense “just like space”—and that one should for example lump space and time together and talk about the “spacetime continuum”. And certainly the theory of relativity points in this direction. But if there’s been one “wrong turn” in the history of physics in the past century, I think it’s the assumption that space and time are the same kind of thing. And in our models they’re not—even though, as we’ll see, relativity comes out just fine.

So what then is time? In effect it’s much as we experience it: the inexorable process of things happening and leading to other things. But in our models it’s something much more precise: it’s the progressive application of rules, that continually modify the abstract structure that defines the contents of the universe.

The version of time in our models is in a sense very computational. As time progresses we are in effect seeing the results of more and more steps in a computation. And indeed the phenomenon of computational irreducibility implies that there is something definite and irreducible “achieved” by this process. (And, for example, this irreducibility is what I believe is responsible for the “encrypting” of initial conditions that is associated with the law of entropy increase, and the thermodynamic arrow of time.) Needless to say, of course, our modern computational paradigm did not exist a century ago when “spacetime” was introduced, and perhaps if it had, the history of physics might have been very different.

But, OK, so in our models time is just the progressive application of rules. But there is a subtlety in exactly how this works that might at first seem like a detail, but that actually turns out to be huge, and in fact turns out to be the key to both relativity and quantum mechanics.

At the beginning of this piece, I talked about the rule

{{x, y}, {x, z}} → {{x, z}, {x, w}, {y, w}, {z, w}}

✕

RulePlot[ResourceFunction[

"WolframModel"][{{x, y}, {x, z}} -> {{x, z}, {x, w}, {y, w}, {z,

w}}], VertexLabels -> Automatic, "RulePartsAspectRatio" -> 0.55]

|

and showed the “first few steps” in applying it

✕

ResourceFunction["WolframModelPlot"] /@

ResourceFunction[

"WolframModel"][{{x, y}, {x, z}} -> {{x, z}, {x, w}, {y, w}, {z,

w}}, {{1, 2}, {2, 3}, {3, 4}, {2, 4}}, 4, "StatesList"]

|

But how exactly did the rule get applied? What is “inside” these steps? The rule defines how to take two connections in the hypergraph (which in this case is actually just a graph) and transform them into four new connections, creating a new element in the process. So each “step” that we showed before actually consists of several individual “updating events” (where here newly added connections are highlighted, and ones that are about to be removed are dashed):

✕

With[{eo =

ResourceFunction[

"WolframModel"][{{x, y}, {x, z}} -> {{x, z}, {x, w}, {y, w}, {z,

w}}, {{1, 2}, {2, 3}, {3, 4}, {2, 4}}, 4]},

TakeList[eo["EventsStatesPlotsList", ImageSize -> 130],

eo["GenerationEventsCountList",

"IncludeBoundaryEvents" -> "Initial"]]]

|

But now, here is the crucial point: this is not the only sequence of updating events consistent with the rule. The rule just says to find two adjacent connections, and if there are several possible choices, it says nothing about which one. And a crucial idea in our model is in a sense just to do all of them.

We can represent this with a graph that shows all possible paths:

✕

CloudGet["https://wolfr.am/LmHho8Tr"]; (*newgraph*)newgraph[

Graph[ResourceFunction["MultiwaySystem"][

"WolframModel" -> {{{x, y}, {x, z}} -> {{x, z}, {x, w}, {y, w}, {z,

w}}}, {{{1, 2}, {2, 3}, {3, 4}, {2, 4}}}, 3, "StatesGraph",

VertexSize -> 3, PerformanceGoal -> "Quality"],

AspectRatio -> 1/2], {3, 0.7}]

|

For the very first update, there are two possibilities. Then for each of the results of these, there are four additional possibilities. But at the next update, something important happens: two of the branches merge. In other words, even though we have done a different sequence of updates, the outcome is the same.

Things rapidly get complicated. Here is the graph after one more update, now no longer trying to show a progression down the page:

✕

Graph[ResourceFunction["MultiwaySystem"][

"WolframModel" -> {{{x, y}, {x, z}} -> {{x, z}, {x, w}, {y, w}, {z,

w}}}, {{{1, 2}, {2, 3}, {3, 4}, {2, 4}}}, 4, "StatesGraph",

VertexSize -> 3, PerformanceGoal -> "Quality"]]

|

So how does this relate to time? What it says is that in the basic statement of the model there is not just one path of time; there are many paths, and many “histories”. But the model—and the rule that is used—determines all of them. And we have seen a hint of something else: that even if we might think we are following an “independent” path of history, it may actually merge with another path.

It will take some more discussion to explain how this all works. But for now let me say that what will emerge is that time is about causal relationships between things, and that in fact, even when the paths of history that are followed are different, these causal relationships can end up being the same—and that in effect, to an observer embedded in the system, there is still just a single thread of time.

The Graph of Causal Relationships

In the end it’s wonderfully elegant. But to get to the point where we can understand the elegant bigger picture we need to go through some detailed things. (It isn’t terribly surprising that a fundamental theory of physics—inevitably built on very abstract ideas—is somewhat complicated to explain, but so it goes.)

To keep things tolerably simple, I’m not going to talk directly about rules that operate on hypergraphs. Instead I’m going to talk about rules that operate on strings of characters. (To clarify: these are not the strings of string theory—although in a bizarre twist of “pun-becomes-science” I suspect that the continuum limit of the operations I discuss on character strings is actually related to string theory in the modern physics sense.)

OK, so let’s say we have the rule:

{A → BBB, BB → A}

This rule says that anywhere we see an A, we can replace it with BBB, and anywhere we see BB we can replace it with A. So now we can generate what we call the multiway system for this rule, and draw a “multiway graph” that shows everything that can happen:

✕

ResourceFunction["MultiwaySystem"][{"A" -> "BBB",

"BB" -> "A"}, {"A"}, 8, "StatesGraph"]

|

At the first step, the only possibility is to use A→BBB to replace the A with BBB. But then there are two possibilities: replace either the first BB or the second BB—and these choices give different results. On the next step, though, all that can be done is to replace the A—in both cases giving BBBB.

So in other words, even though we in a sense had two paths of history that diverged in the multiway system, it took only one step for them to converge again. And if you trace through the picture above you’ll find out that’s what always happens with this rule: every pair of branches that is produced always merges, in this case after just one more step.

This kind of balance between branching and merging is a phenomenon I call “causal invariance”. And while it might seem like a detail here, it actually turns out that it’s at the core of why relativity works, why there’s a meaningful objective reality in quantum mechanics, and a host of other core features of fundamental physics.

But let’s explain why I call the property causal invariance. The picture above just shows what “state” (i.e. what string) leads to what other one. But at the risk of making the picture more complicated (and note that this is incredibly simple compared to the full hypergraph case), we can annotate the multiway graph by including the updating events that lead to each transition between states:

✕

LayeredGraphPlot[

ResourceFunction["MultiwaySystem"][{"A" -> "BBB",

"BB" -> "A"}, {"A"}, 8, "EvolutionEventsGraph"], AspectRatio -> 1]

|

But now we can ask the question: what are the causal relationships between these events? In other words, what event needs to happen before some other event can happen? Or, said another way, what events must have happened in order to create the input that’s needed for some other event?

Let us go even further, and annotate the graph above by showing all the causal dependencies between events:

✕

LayeredGraphPlot[

ResourceFunction["MultiwaySystem"][{"A" -> "BBB",

"BB" -> "A"}, {"A"}, 7, "EvolutionCausalGraph"], AspectRatio -> 1]

|

The orange lines in effect show which event has to happen before which other event—or what all the causal relationships in the multiway system are. And, yes, it’s complicated. But note that this picture shows the whole multiway system—with all possible paths of history—as well as the whole network of causal relationships within and between these paths.

But here’s the crucial thing about causal invariance: it implies that actually the graph of causal relationships is the same regardless of which path of history is followed. And that’s why I originally called this property “causal invariance”—because it says that with a rule like this, the causal properties are invariant with respect to different choices of the sequence in which updating is done.

And if one traced through the picture above (and went quite a few more steps), one would find that for every path of history, the causal graph representing causal relationships between events would always be:

✕

ResourceFunction["SubstitutionSystemCausalGraph"][{"A" -> "BBB",

"BB" -> "A"}, "A", 10] // LayeredGraphPlot

|

or, drawn differently,

✕

ResourceFunction["SubstitutionSystemCausalGraph"][{"A" -> "BBB",

"BB" -> "A"}, "A", 12]

|

The Importance of Causal Invariance

To understand more about causal invariance, it’s useful to look at an even simpler example: the case of the rule BA→AB. This rule says that any time there’s a B followed by an A in a string, swap these characters around. In other words, this is a rule that tries to sort a string into alphabetical order, two characters at a time.

Let’s say we start with BBBAAA. Then here’s the multiway graph that shows all the things that can happen according to the rule:

✕

Graph[ResourceFunction["MultiwaySystem"][{"BA" -> "AB"}, "BBBAAA", 12,

"EvolutionEventsGraph"], AspectRatio -> 1.5] // LayeredGraphPlot

|

There are lots of different paths that can be followed, depending on which BA in the string the rule is applied to at each step. But the important thing we see is that at the end all the paths merge, and we get a single final result: the sorted string AAABBB. And the fact that we get this single final result is a consequence of the causal invariance of the rule. In a case like this where there’s a final result (as opposed to just evolving forever), causal invariance basically says: it doesn’t matter what order you do all the updates in; the result you’ll get will always be the same.

I’ve introduced causal invariance in the context of trying to find a model of fundamental physics—and I’ve said that it’s going to be critical to both relativity and quantum mechanics. But actually what amounts to causal invariance has been seen before in various different guises in mathematics, mathematical logic and computer science. (Its most common name is “confluence”, though there are some technical differences between this and what I call causal invariance.)

Think about expanding out an algebraic expression, like (x + (1 + x)2)(x + 2)2. You could expand one of the powers first, then multiply things out. Or you could multiply the terms first. It doesn’t matter what order you do the steps in; you’ll always get the same canonical form (which in this case Mathematica tells me is 4 + 16x + 17x2 + 7x3 + x4). And this independence of orders is essentially causal invariance.

Here’s one more example. Imagine you’ve got some recursive definition, say f[n_]:=f[n-1]+f[n-2] (with f[0]=f[1]=1). Now evaluate f[10]. First you get f[9]+f[8]. But what do you do next? Do you evaluate f[9], or f[8]? And then what? In the end, it doesn’t matter; you’ll always get 55. And this is another example of causal invariance.

When one thinks about parallel or asynchronous algorithms, it’s important if one has causal invariance. Because it means one can do things in any order—say, depth-first, breadth-first, or whatever—and one will always get the same answer. And that’s what’s happening in our little sorting algorithm above.

OK, but now let’s come back to causal relationships. Here’s the multiway system for the sorting process annotated with all causal relationships for all paths:

✕

Magnify[LayeredGraphPlot[

ResourceFunction["MultiwaySystem"][{"BA" -> "AB"}, "BBBAAA", 12,

"EvolutionCausalGraph"], AspectRatio -> 1.5], .6]

|

And, yes, it’s a mess. But because there’s causal invariance, we know something very important: this is basically just a lot of copies of the same causal graph—a simple grid:

✕

centeredRange[n_] := # - Mean@# &@Range@n;

centeredLayer[n_] := {#, n} & /@ centeredRange@n;

diamondLayerSizes[layers_?OddQ] :=

Join[#, Reverse@Most@#] &@Range[(layers + 1)/2];

diamondCoordinates[layers_?OddQ] :=

Catenate@MapIndexed[

Thread@{centeredRange@#, (layers - First@#2)/2} &,

diamondLayerSizes[layers]];

diamondGraphLayersCount[graph_] := 2 Sqrt[VertexCount@graph] - 1;

With[{graph =

ResourceFunction["SubstitutionSystemCausalGraph"][{"BA" -> "AB"},

"BBBBAAAA", 12]},

Graph[graph,

VertexCoordinates ->

diamondCoordinates@diamondGraphLayersCount@graph, VertexSize -> .2]]

|

(By the way—as the picture suggests—the cross-connections between these copies aren’t trivial, and later on we’ll see they’re associated with deep relations between relativity and quantum mechanics, that probably manifest themselves in the physics of black holes. But we’ll get to that later…)

OK, so every different way of applying the sorting rule is supposed to give the same causal graph. So here’s one example of how we might apply the rule starting with a particular initial string:

✕

evo = (SeedRandom[2424];

ResourceFunction[

"SubstitutionSystemCausalEvolution"][{"BA" -> "AB"},

"BBAAAABAABBABBBBBAAA", 15, {"Random", 4}]);

ResourceFunction["SubstitutionSystemCausalPlot"][evo,

EventLabels -> False, CellLabels -> True, CausalGraph -> False]

|

But now let’s show the graph of causal connections. And we see it’s just a grid:

✕

evo = (SeedRandom[2424];

ResourceFunction[

"SubstitutionSystemCausalEvolution"][{"BA" -> "AB"},

"BBAAAABAABBABBBBBAAA", 15, {"Random", 4}]);

ResourceFunction["SubstitutionSystemCausalPlot"][evo,

EventLabels -> False, CellLabels -> False, CausalGraph -> True]

|

Here are three other possible sequences of updates:

✕

SeedRandom[242444]; GraphicsRow[

Table[ResourceFunction["SubstitutionSystemCausalPlot"][

ResourceFunction[

"SubstitutionSystemCausalEvolution"][{"BA" -> "AB"},

"BBAAAABAABBABBBBBAAA", 15, {"Random", 4}], EventLabels -> False,

CellLabels -> False, CausalGraph -> True], 3], ImageSize -> Full]

|

But now we see causal invariance in action: even though different updates occur at different times, the graph of causal relationships between updating events is always the same. And having seen this—in the context of a very simple example—we’re ready to talk about special relativity.

Deriving Special Relativity

It’s a typical first instinct in thinking about doing science: you imagine doing an experiment on a system, but you—as the “observer”—are outside the system. Of course if you’re thinking about modeling the whole universe and everything in it, this isn’t ultimately a reasonable way to think about things. Because the “observer” is inevitably part of the universe, and so has to be modeled just like everything else.

In our models what this means is that the “mind of the observer”, just like everything else in the universe, has to get updated through a series of updating events. There’s no absolute way for the observer to “know what’s going on in the universe”; all they ever experience is a series of updating events, that may happen to be affected by updating events occurring elsewhere in the universe. Or, said differently, all the observer can ever observe is the network of causal relationships between events—or the causal graph that we’ve been talking about.

So as toy model let’s look at our BA→AB rule for strings. We might imagine that the string is laid out in space. But to our observer the only thing they know is the causal graph that represents causal relationships between events. And for the BA→AB system here’s one way we can draw that:

✕

CloudGet["https://wolfr.am/KVkTxvC5"]; (*regularCausalGraphPlot*)

CloudGet["https://wolfr.am/KVl97Tf4"];(*lorentz*)

\

regularCausalGraphPlot[10, {0, 0}, {0.0, 0.0}, lorentz[0]]

|

But now let’s think about how observers might “experience” this causal graph. Underneath, an observer is getting updated by some sequence of updating events. But even though that’s “really what’s going on”, to make sense of it, we can imagine our observers setting up internal “mental” models for what they see. And a pretty natural thing for observers like us to do is just to say “one set of things happens all across the universe, then another, and so on”. And we can translate this into saying that we imagine a series of “moments” in time, where things happen “simultaneously” across the universe—at least with some convention for defining what we mean by simultaneously. (And, yes, this part of what we’re doing is basically following what Einstein did when he originally proposed special relativity.)

Here’s a possible way of doing it:

✕

CloudGet["https://wolfr.am/KVkTxvC5"]; (*regularCausalGraphPlot*)

CloudGet["https://wolfr.am/KVl97Tf4"];(*lorentz*)

\

regularCausalGraphPlot[10, {1, 0}, {0.0, 0.0}, lorentz[0]]

|

One can describe this as a “foliation” of the causal graph. We’re dividing the causal graph into leaves or slices. And each slice our observers can consider to be a “successive moment in time”.

It’s important to note that there are some constraints on the foliation we can pick. The causal graph defines what event has to happen before what. And if our observers are going to have a chance of making sense of the world, it had better be the case that their notion of the progress of time aligns with what the causal graph says. So for example this foliation wouldn’t work—because basically it says that the time we assign to events is going to disagree with the order in which the causal graph says they have to happen:

✕

CloudGet["https://wolfr.am/KVkTxvC5"]; (*regularCausalGraphPlot*)

CloudGet["https://wolfr.am/KVl97Tf4"];(*lorentz*)

\

regularCausalGraphPlot[6, {.2, 0}, {5, 0.0}, lorentz[0]]

|

But, so given the foliation above, what actual order of updating events does it imply? It basically just says: as many events as possible happen at the same time (i.e. in the same slice of the foliation), as in this picture:

![ResourceFunction["SubstitutionSystemCausalPlot"] ResourceFunction["SubstitutionSystemCausalPlot"]](https://content.wolfram.com/sites/43/2020/04/0414img89.png)

✕

(*https://www.wolframcloud.com/obj/wolframphysics/TechPaper-Programs/\

Section-08/BoostedEvolution.wl*)

CloudGet["https://wolfr.am/LbaDFVSn"]; (*boostedEvolution*) \

ResourceFunction["SubstitutionSystemCausalPlot"][

boostedEvolution[

ResourceFunction[

"SubstitutionSystemCausalEvolution"][{"BA" -> "AB"},

StringRepeat["BA", 10], 10], 0], EventLabels -> False,

CellLabels -> True, CausalGraph -> False]

|

OK, now let’s connect this to physics. The foliation we had above is relevant to observers who are somehow “stationary with respect to the universe” (the “cosmological rest frame”). One can imagine that as time progresses, the events a particular observer experiences are ones in a column going vertically down the page:

✕

CloudGet["https://wolfr.am/KVkTxvC5"]; (*regularCausalGraphPlot*)

CloudGet["https://wolfr.am/KVl97Tf4"];(*lorentz*)

\

regularCausalGraphPlot[5, {1, 0.01}, {0.0, 0.0}, {1.5, 0}, {Red,

Directive[Dotted, Thick, Red]}, lorentz[0]]

|

But now let’s think about an observer who is uniformly moving in space. They’ll experience a different sequence of events, say:

✕

CloudGet["https://wolfr.am/KVkTxvC5"]; (*regularCausalGraphPlot*)

CloudGet["https://wolfr.am/KVl97Tf4"];(*lorentz*)

\

regularCausalGraphPlot[5, {1, 0.01}, {0.0, 0.3}, {0.6, 0}, {Red,

Directive[Dotted, Thick, Red]}, lorentz[0]]

|

And that means that the foliation they’ll naturally construct will be different. From the “outside” we can draw it on the causal graph like this:

✕

CloudGet["https://wolfr.am/KVkTxvC5"]; (*regularCausalGraphPlot*)

CloudGet["https://wolfr.am/KVl97Tf4"];(*lorentz*)

\

regularCausalGraphPlot[10, {1, 0.01}, {0.3, 0.3}, {0, 0}, {Red,

Directive[Dotted, Thick, Red]}, lorentz[0.]]

|

But to the observer each slice just represents a successive moment of time. And they don’t have any way to know how the causal graph was drawn. So they’ll construct their own version, where the slices are horizontal:

✕

CloudGet["https://wolfr.am/KVkTxvC5"]; (*regularCausalGraphPlot*)

CloudGet["https://wolfr.am/KVl97Tf4"];(*lorentz*)

\

regularCausalGraphPlot[10, {1, 0.01}, {0.3, 0.3}, {0, 0}, {Red,

Directive[Dotted, Thick, Red]}, lorentz[0.3]]

|

But now there’s a purely geometrical fact: to make this rearrangement, while preserving the basic structure (and here, angles) of the causal graph, each moment of time has to sample fewer events in the causal graph, by a factor of ![]() where β is the angle that represents the velocity of the observer.

where β is the angle that represents the velocity of the observer.

If you know about special relativity, you’ll recognize a lot of this. What we’ve been calling foliations correspond directly to relativity’s “reference frames”. And our foliations that represent motion are the standard inertial reference frames of special relativity.

But here’s the special thing that’s going on here: we can interpret all this discussion of foliations and reference frames in terms of the actual rules and evolution of our underlying system. So here now is the evolution of our string-sorting system in the “boosted reference frame” corresponding to an observer going at a certain speed:

✕

(*https://www.wolframcloud.com/obj/wolframphysics/TechPaper-Programs/\

Section-08/BoostedEvolution.wl*)

CloudGet["https://wolfr.am/LbaDFVSn"]; (*boostedEvolution*) \

ResourceFunction["SubstitutionSystemCausalPlot"][

boostedEvolution[

ResourceFunction[

"SubstitutionSystemCausalEvolution"][{"BA" -> "AB"},

StringRepeat["BA", 10], 10], 0.3], EventLabels -> False,

CellLabels -> True, CausalGraph -> False]

|

And here’s the crucial point: because of causal invariance it doesn’t matter that we’re in a different reference frame—the causal graph for the system (and the way it eventually sorts the string) is exactly the same.

In special relativity, the key idea is that the “laws of physics” work the same in all inertial reference frames. But why should that be true? Well, in our systems, there’s an answer: it’s a consequence of causal invariance in the underlying rules. In other words, from the property of causal invariance, we’re able to derive relativity.

Normally in physics one puts in relativity by the way one sets up the mathematical structure of spacetime. But in our models we don’t start from anything like this, and in fact space and time are not even at all the same kind of thing. But what we can now see is that—because of causal invariance—relativity emerges in our models, with all the relationships between space and time that that implies.

So, for example, if we look at the picture of our string-sorting system above, we can see relativistic time dilation. In effect, because of the foliation we picked, time operates slower. Or, said another way, in the effort to sample space faster, our observer experiences slower updating of the system in time.

The speed of light c in our toy system is defined by the maximum rate at which information can propagate, which is determined by the rule, and in the case of this rule is one character per step. And in terms of this, we can then say that our foliation corresponds to a speed 0.3 c. But now we can look at the amount of time dilation, and it’s exactly the amount ![]() that relativity says it should be.

that relativity says it should be.

By the way, if we imagine trying to make our observer go “faster than light”, we can see that can’t work. Because there’s no way to tip the foliation at more than 45° in our picture, and still maintain the causal relationships implied by the causal graph.

OK, so in our toy model we can derive special relativity. But here’s the thing: this derivation isn’t specific to the toy model; it applies to any rule that has causal invariance. So even though we may be dealing with hypergraphs, not strings, and we may have a rule that shows all kinds of complicated behavior, if it ultimately has causal invariance, then (with various technical caveats, mostly about possible wildness in the causal graph) it will exhibit relativistic invariance, and a physics based on it will follow special relativity.

What Is Energy? What Is Mass?

In our model, everything in the universe—space, matter, whatever—is supposed to be represented by features of our evolving hypergraph. So within that hypergraph, is there a way to identify things that are familiar from current physics, like mass, or energy?

I have to say that although it’s a widespread concept in current physics, I’d never thought of energy as something fundamental. I’d just thought of it as an attribute that things (atoms, photons, whatever) can have. I never really thought of it as something that one could identify abstractly in the very structure of the universe.

So it came as a big surprise when we recently realized that actually in our model, there is something we can point to, and say “that’s energy!”, independent of what it’s the energy of. The technical statement is: energy corresponds to the flux of causal edges through spacelike hypersurfaces. And, by the way, momentum corresponds to the flux of causal edges through timelike hypersurfaces.

OK, so what does this mean? First, what’s a spacelike hypersurface? It’s actually a standard concept in general relativity, for which there’s a direct analogy in our models. Basically it’s what forms a slice in our foliation. Why is it called what it’s called? We can identify two kinds of directions: spacelike and timelike.

A spacelike direction is one that involves just moving in space—and it’s a direction where one can always reverse and go back. A timelike direction is one that involves also progressing through time—where one can’t go back. We can mark spacelike (![]() ) and timelike (

) and timelike (![]() ) hypersurfaces in the causal graph for our toy model:

) hypersurfaces in the causal graph for our toy model:

✕

CloudGet["https://wolfr.am/KVkTxvC5"]; (*regularCausalGraphPlot*)

CloudGet["https://wolfr.am/KVl97Tf4"];(*lorentz*)

\

regularCausalGraphPlot[10, {1, 0.5}, {0., 0.}, {-0.5, 0}, {Red,

Directive[Dashed, Red]}, lorentz[0.]]

|

(They might be called “surfaces”, except that “surfaces” are usually thought of as 2-dimensional, and our 3-space + 1-time dimensional universe, these foliation slices are 3-dimensional: hence the term “hypersurfaces”.)

OK, now let’s look at the picture. The “causal edges” are the causal connections between events, shown in the picture as lines joining the events. So when we talk about a “flux of causal edges through spacelike hypersurfaces”, what we’re talking about is the net number of causal edges that go down through the horizontal slices in the pictures.

In the toy model that’s trivial to see. But here’s a causal graph from a simple hypergraph model, where it’s already considerably more complicated:

✕

Graph[ResourceFunction[

"WolframModel"][ {{x, y}, {z, y}} -> {{x, z}, {y, z}, {w,

z}}, {{0, 0}, {0, 0}}, 15, "LayeredCausalGraph"],

AspectRatio -> 1/2]

|