Last weekend I decided to have a short break from all the exciting technological things we’re doing… and to give a talk at the Singularity Summit in New York City about the implications of A New Kind of Science for the future, technological and otherwise. Here’s the transcript:

Well, what I wanted to do here was to have some fun—and talk about the future.

That’s something that’s kind of recreational for me. Because what I normally do is work in the trenches just trying to actually build the future… kind of one brick at a time—or at least one big project at a time.

I’ve been doing this now for a bit more than 30 years, and I guess I’ve built a fairly tall tower. From which it’s possible to do and see some pretty interesting things.

And actually things are moving real fast right now. Like here’s an example from yesterday. Here’s an iPhone 4S. If you press the button to call up Siri, you can talk to it. And you can ask it all kinds of questions. And quite often it’ll compute answers—sort of Star Trek computer style—using our Wolfram|Alpha knowledge engine.

There’s a lot to say about Wolfram|Alpha, about how it relates to artificial intelligence, about the whole idea of computational knowledge, and what it means for the future.

But let me start off sort of at a more conceptual level. Let’s talk about world views.

You know, a lot of the way we tend to think about the world, and particularly about science and technology, is very Newtonian, very Galilean. It’s a great tradition, that’s done amazing things over the past 300 years. All sort of rooted in physics—with math as kind of an aspiration for how to describe things.

And in a sense it all got started with one surprising discovery made in 1608—when Galileo turned his telescope to the sky and saw for the first time the moons of Jupiter. And began to realize that there really could be this universal physics that applies to everything… and from which we could ultimately build this whole edifice that is modern exact science and technology.

Well, I myself happened to start out early in life as a physicist. And so I was very steeped in this whole physics-math world view. But as I studied different kinds of things—particularly ones where there was obvious complexity in the behavior of a system—I kept on finding cases where I couldn’t make much progress. And I got to wondering whether there was sort of something fundamental that had to change.

Well, one of the big issues was this: when we look at some system in nature, how do we think about its mechanism?

Well, the big innovation of Galileo and Newton and friends was to have the idea of using mathematics to describe this mechanism. So that we get all these equations and math and calculus for the systems we study in science or build in technology.

But here’s the question: is that the only possible mechanism nature can be using?

Well, what I realized is that it’s certainly possible that there are other mechanisms. Rules that are precise, but aren’t captured by for example our standard mathematics. And the nice thing is that in our times we have a way to think about those more general rules: they’re like programs.

Well, when we think of programs, we usually think of these big things that we build for very specific purposes. But what about little tiny programs? Maybe just one line of code long, or something.

Well, you might have thought—as I did, actually—that programs like that would always be trivial; they’d never do anything interesting.

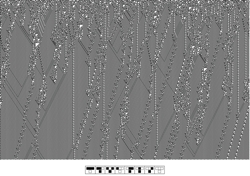

But one day, nearly 30 years ago now, I decided to actually test that idea. And I took my analog of a telescope—a computer—and pointed it not at the sky, but at the abstract computational universe of possible programs. And this is what I saw:

Each one of these pictures is the result of running a different simple program. And you can see that all kinds of different things happen. Mostly it’s quite trivial. But if you look carefully, you’ll see something quite remarkable.

This is the 30th of these programs—I call it rule 30. Here’s what it does.

Start it from just one black cell at the top. Use that little rule at the bottom. That looks like a trivial rule. But here you get all this stuff. Complex. In some ways random. No sign that it came from that simple little rule.

Well, when I first saw this, I didn’t believe it. I thought somehow there must be some regularity, some way to decode what we’re seeing. I mean, our usual intuition is that to make something complex, we have to go to lots of effort. It can’t just take a tiny little rule like that.

But I suppose this was my little Galileo moment. The beginning for me of a new world view. With new and different intuition, informed by what I’d seen out there in the computational universe.

Well, over the years since I discovered rule 30, I’ve gradually been understanding more and more about the world view it implies. I feel very slow. There are just so many layers to understand.

But it’s been tremendously exciting, and the more I understand, the more important I think it is for understanding the world, and for building the future. And, of course, there are some pretty interesting spinoffs, like Wolfram|Alpha for instance.

Well, so OK. We have this new kind of science that’s based on exploring the computational universe of possible programs.

What does it mean?

Here’s one immediate thing. There’s a really basic issue in science that I guess has been around forever.

How is it that nature manages to make all the complicated stuff it does?

You know, it’s sort of embarrassing. If you show someone two objects, one’s an artifact, one’s a natural system; it’s a good heuristic that the one that looks simpler is the artifact.

With all that we’ve achieved in our civilization and so on, nature still has some secret that lets it sort of effortlessly create stuff that’s more complex than we can build.

Well, I think we now know how this works. Rule 30 is a great example. It’s just that we build stuff we operate according to fairly narrow kinds of rules that our current science and technology let us understand.

But nature is under no such constraint. It just uses all kinds of rules from around the computational universe. And that means that like in rule 30, it can sort of effortlessly produce all the complexity it wants.

Well, after I started building Mathematica as my kind of computational power tool, I spent about a decade using it to try to understand the implications of this, for all kinds of science and so on. And indeed there are lots of longstanding problems in physics and biology and elsewhere that one starts to be able to crack. Which is very exciting.

But OK, it’s one thing to understand how nature works. How about building stuff for ourselves? How about technology?

Well, there’s something very interesting here.

You see, mostly in technology what we do is to try to construct stuff, step by step, always making sure we can foresee what’s going to happen. That’s the traditional approach in engineering. But once we’ve seen what’s out there in the computational universe, we realize there’s another possibility: we can just mine things from the computational universe.

You know, in a sense the whole idea of technology is to take what exists in the world, and harness it for human purposes. And that’s what happens when we take materials that we find out there—magnets, liquid crystals, whatever—and then find technological uses for them.

Well, it’s the same in the computational universe.

Out there there are things like rule 30. That behave in remarkable ways. And that we can effectively harvest to make our technology. Like rule 30 is a great randomness generator. Another rule might be great for some kind of network routing. Or for image analysis. Or whatever.

You know, as we’ve developed Mathematica, and even more so Wolfram|Alpha, we’ve increasingly been using this idea. Not creating technology by explicit engineering. But instead by mining it from the computational universe. In effect searching zillions of possible simple programs to find the ones that are useful to us.

Now, once we have these programs, there’s no guarantee that we can readily understand how they work. We have some way of testing that they do what we want. But their operation can be as mysterious—and look as complex—as what we often see in nature.

It’s been interesting in the last few years watching the advance of this idea of mining technology from the computational universe. It’s not too easy to trace everything that’s happening. But in more and more places it’s catching on. And my guess is that in time this approach—this new kind of technology, this new source of technology—will come to dwarf everything produced by traditional engineering.

You know, it’s actually not just technology that’s involved. Like here’s an experiment we did a few years ago in the artistic domain.

Scanning through lots of rules in the computational universe to find ones that create music in different styles. In a sense using the fact that there is an underlying rule—so there is a kind of logic to each piece. But yet as a result of the rule-30-type phenomenon, there’s complexity and richness that we respond to.

And, you know, it’s funny. I had thought that a big thing about humans is that they’re the creative ones; computers aren’t. But actually I keep on hearing from composers and so on that they like this site because it gives them ideas—inspirations—for pieces.

Out there in the computational universe there’s in effect a whole seething world of creativity, ready for us to tap.

And, you know, it’s interesting how this changes the economics of things. Mining the computational universe makes creativity cheap. It means we don’t have to mass produce, we can mass customize. We can invent on the fly on a mass scale. And actually there are things coming, for example in Wolfram|Alpha, that will really make use of this.

Well, OK. I wanted to go back to world views a bit. Because there’s some development of my world view that I have to explain to talk more about things I’m expecting in the future.

So OK. How can we understand this remarkable phenomenon that we see in rule 30 and so on? What’s the fundamental cause of it?

Well, from my explorations in the computational universe, I came up with a hypothesis that I call the Principle of Computational Equivalence. Let me explain it a bit.

When we have a system, like rule 30, or like something in nature, we can think of it as doing a computation. In effect we feed some input in at the beginning, then it grinds around, and eventually out comes some output.

Then the question is: how sophisticated is that computation?

Now, we might have thought that as we make the underlying rules for a system more complicated, the computations it ends up doing would somehow become progressively more sophisticated.

But here’s the big claim of the Principle of Computational Equivalence: that isn’t true. Instead, after one passes a pretty low threshold—and one has a system that isn’t obviously simple in its behavior—one immediately ends up with a system that’s doing a computation that’s as sophisticated as anything.

So that means that all these different systems—whether it’s rule 30, or whether it’s a fancy computer, or a brain—they’re all effectively doing computations that have just the same level of sophistication.

Well, OK. So there’s an immediate prediction then from the Principle of Computational Equivalence.

There’s this idea of universal computation… it’s really the idea that launched the whole computer revolution. It’s due to Alan Turing and friends.

You might have thought that any time you want to do a different kind of computation, you’d need a completely different system. But the point of universal computation is that you can have a single universal machine that can be programmed to do any kind of computation. And that’s what makes software possible. And that’s the foundation for essentially all computer technology today.

But, OK. The Principle of Computational Equivalence has many implications. But one of them relates to universal computation. It says that not only is it possible to construct a universal computer, but actually it’s easy. In fact, pretty much any system whose behavior isn’t obviously simple will manage to do universal computation.

Well, one can test that. Just look for example at these simple programs like rule 30, and see if they’re universal. We don’t know yet about rule 30. But we do know for example that a program very much like it—rule 110—is universal.

And a few years ago, we found out that among Turing machines too, the very simplest one that shows not-obviously-simple behavior—though it still has a very simple rule—is also universal.

Now we might have thought that to build something as sophisticated as a universal computer we’d have to build up all kinds of stuff… we’d in effect have to have our whole civilization, and figure out all this stuff.

But actually, what the Principle of Computational Equivalence says—and what we’ve found out is true—is that actually out there in the computational universe, it’s really easy to find universal computation. It’s not a rare and special thing; it’s ubiquitous. And for example, it’d be easy to mine from the computational universe. Say for the particular case of a molecular computer or something.

Well, OK. The Principle of Computational Equivalence has other implications too that are pretty important. One of them is what I call computational irreducibility.

You see, a big idea of traditional exact science is that the systems we see in nature are computationally reducible. We can look at those systems—say an idealized earth orbiting an idealized sun—and we can, with all our mathematical and calculational prowess, can immediately predict what the systems are going to do. We don’t have to trace every orbit, say. We can just plug a number into a formula, and immediately get a result. Using the fact that the system itself is computational reducible.

Well, it turns out that in the computational universe, one finds that lots of systems aren’t computationally reducible. Instead, they’re irreducible. There’s no way to work out what they’ll do in any reduced way. There’s no choice but just to trace each step and see what happens.

It’s actually pretty easy to see why this has to happen, given the Principle of Computational Equivalence. We’ve always had kind of an idealization in science that systems we study are somehow computationally much simpler than the observers that study them. But now the Principle of Computational Equivalence says that that isn’t true. Because it says that a little system like rule 30 can be just as computationally sophisticated as us, with our brains and computers and so on.

And that’s why it’s computational irreducible, and by the way also why its behavior seems to us so complex.

Well, computational irreducibility has all sorts of implications for the limits of science and knowledge. At a practical level, it makes it clear how important simulation is, and how important it is to have the simplest possible models for things.

And at for example a philosophical level, I think it finally gives an explanation of how there can be both free will and determinism. Things are determined, but to figure them out requires an irreducibly large computation.

But OK. So the Principle of Computational Equivalence has another implication, that relates to intelligence. There are various concepts—like life, for example—that always seem a bit elusive. I mean, it’s pretty easy to tell what’s alive and what’s not here on earth. But all life shares a common history, and has all sorts of detailed features, like cell membranes and RNA and so on, in common.

But what’s the abstract definition of life? Well, there are various candidates. But realistically they all fail. And either we have to fall back on the shared history definition, or we have to just say in effect that what we need is a certain degree of computational sophistication.

Well, it’s the same thing with intelligence. We know sort of historically what human-like intelligence is about. But we don’t have a clear abstract definition, independent of the history. And I don’t think there ultimately is one.

In fact, it’s sort of a consequence of the Principle of Computational Equivalence that we can’t make one. And that all sorts of systems are ultimately equivalent in this way.

You know, there are expressions like “the weather has a mind of its own”. And one might have thought that that was just some primitive animistic point of view.

But what the Principle of Computational Equivalence suggests is that in fact there is a fundamental equivalence between what’s going on in the fluid turbulence in the atmosphere, and things like the patterns of neuron firings in our brains.

And you know, this type of issue gets cast into a more definite form when one starts thinking about extraterrestrial intelligence. If we see some sophisticated signal coming from the cosmos, does it necessarily follow that it needed some whole development of an intelligent civilization to make it? Or could it instead just come from some physical process with simple rules?

Well, our usual intuition would be that if we see something sophisticated, it must have a sophisticated cause. But from what we’ve discovered in the computational universe—and encapsulated in the Principle of Computational Equivalence—that’s not the case. And when we see those little glitches in signals from a pulsar, we can’t really say that they’re not associated with something like intelligence.

And of course historically things like this were often plenty confusing. Like Tesla’s radio signals from Mars, that turned out to be modes in the ionosphere.

And it sort of gets even worse.

Let’s imagine for a moment the distant future. Where technology discovered from the computational universe is widely used. And all our processes of human thinking and so on are implemented at a molecular scale, by motions of electrons in some block of something or another.

Now imagine we find that block lying around somewhere, and ask whether what it’s doing is intelligent. I don’t think that’s really a meaningful question as such.

And in fact I don’t think there’ll ever be a fundamental distinction between the processes that go on in that block, and in a pretty generic block of material with electrons whizzing around. As we learn from the Principle of Computational Equivalence, at a fundamental level, they’re both doing the same kind of thing.

Of course, at the level of details there can be a huge distinction. One of them can have an elaborate history that’s all about the details of our evolution and civilization. And the other doesn’t. And as I’ll talk about in a bit, this is all bound up with issues of purpose and so on. But at the level of just looking at these blocks of material, without the details of history, there’s no fundamental distinction.

Well, needless to say, this realization also has implications for artificial intelligence.

You know, when I was younger I used to think that there’d be some great idea, some core breakthrough, that would suddenly give us artificial intelligence.

But what I’ve gradually realized, especially through the Principle of Computational Equivalence, is that that’s not how it’ll work. Because in a sense all this intelligence that we’re trying to make is ultimately, at an abstract level, just computation. That might sound very abstract and philosophical. But at least for me it’s had a big practical consequence.

You know, when I was a kid I was really interested in the problem of taking the world’s knowledge, and setting it up so that it was possible to automatically ask questions on the basis of it.

Well, it seemed like a hard problem. Because to solve it it seemed like one would have to solve the general problem of artificial intelligence. But after I’d come up the Principle of Computational Equivalence, I gradually realized that that wasn’t true. And that in a sense all one had to do was computation.

Well, at a personal level, I had this great system for doing computation—Mathematica. With a language that could very efficiently represent all this abstract stuff. And a whole giant web of algorithms covering pretty much every fundamental area.

And so I thought: OK, so maybe it isn’t so impossible or crazy to build a system that will make the world’s knowledge computable. And that was how I came to start building Wolfram|Alpha.

I have to say that I’m still often surprised that Wolfram|Alpha is actually possible as a practical matter at this point in history. It’s an incredibly complicated technological object. But I’m happy to say that the big discovery of the last couple of years is that it actually does work. And it’s steadily expanding, domain by domain, in effect automating the process of delivering expert-level knowledge.

And making it so that if there’s something that could be figured by an expert from the knowledge that our civilization has accumulated, then Wolfram|Alpha can automatically figure it out.

From the point of view of democratizing knowledge, it’s pretty exciting, and it’s clear it’s already leading to some pretty interesting things. Inside it’s a strange kind of object. I mean, it starts off from all sorts of sources of data. That at first is just raw data… but the real work is in making the data computable. Making it so that one isn’t just looking things up, but instead one’s able to figure things out from the data.

Now in that process, a big piece is that one has to implement all the various methods and models and algorithms that have been developed across science and other areas. And one has to capture the expertise of actual human experts; they’re always needed. So then the result is that one can compute all kinds of things.

But then the challenge is to be able to say what to compute. And the only realistic way to do that is to be able to understand actual human language, or actually those strange utterances that people enter in various ways to Wolfram|Alpha.

And—somewhat to my surprise—using a bunch of thinking from A New Kind of Science (NKS), it’s turned out actually to be possible to do a good job of this. In effect turning human utterances—that in the textual domain actually often seem to be rather close to raw human thoughts—into a systematic symbolic internal representation. From which one can compute answers, and generate all those elaborate reports that you see in Wolfram|Alpha.

It’s kind of interesting what happens in the actuality of Wolfram|Alpha. People are always asking it new things. Asking it to compute answers in their specific situation.

The web has lots of stuff in it. But searching it is quite a different proposition. Because all you’re ever doing is looking at things people happened to write down. What Wolfram|Alpha is doing is actually figuring out new, specific, things. In some ways, it’s achieving all sorts of things people have said in the past are characteristic of an artificial intelligence.

But it’s interesting the extent to which it’s not like a human intelligence. I mean, think for example about how it solves some physics problem. It could do it like a human, reasoning through to an answer, kind of medieval natural philosophy style. But instead what it does is to make use of the last 300 years of science. From an AI point of view it kind of cheats. It just sets up the equations, and blasts through to the answer.

It’s really not trying to emulate some kind of human-like intelligence. Rather, it’s trying to be the structure that all that human-like intelligence can build, to do what it does as efficiently as possible. It’s not trying to be a bird. It’s trying to be an airplane.

Well, so now I’ve explained a bit about my world view. And a bit about how it’s led me to some very practical things like Wolfram|Alpha.

Let me talk now a little bit about what I think it tells us about the future.

I’ll talk both about the fairly near-term future, and about the much more distant future. I don’t talk much about the future usually. I find it a bit weird. I like to just deliver stuff, not talk about what could be delivered.

But I certainly think a lot about the future myself. And I’ve ended up with a whole inventory of major projects that I think can be done in the future. But I’m waiting for the right year, or the right decade, to do them.

I always try to remember what I predicted about the future, and then I check later whether I got it right. Sometimes it’s kind of depressing.

Like in 1987 I worked with a team of students to win a competition about predicting the personal computer of the year 2000. At the time it seemed pretty obvious how some things were going to play out. And looking recently at what we said, it’s depressing how accurate it was. Touchscreen tablet with all these characteristics and uses and so on.

I guess there are always things that are pretty much progressing in straight lines.

I must say personally I always much prefer to build what I think of as “alien artifacts”: stuff people didn’t even imagine was possible until it arrives. But anyway, what can we see in kind of straight lines from where we are?

Well, first, data and computation are going to be more and more ubiquitous. We’ll have sensors and things that give us data on everything. And we’ll be able to compute more and more from it.

It used to be the case that one had to just live from one’s wits, and from what one happened to know and be able to work out.

But then long ago there were books, that could spread knowledge in a systematic way. And more recently there started to be algorithms, and the web, and computational knowledge, and everything.

We made this poster recently about the advance of systematic knowledge in the world, and the ability to compute from it—from the Babylonians to now. It’s been a pretty important driving force in the progress of civilization, gradually getting more systematic, and more automated.

Well, OK, so at least in my little corner of the world, we have Wolfram|Alpha, which takes lots of systematic knowledge, and lets one be able to compute answers from it.

And as that gets done more and more, more and more of what happens in the world will become understandable, and predictable. One will routinely know what’s going to happen—at least up to the limits of what computational irreducibility allows.

Right now, one usually still has to ask for it: explicitly say what one wants. But increasingly the knowledge we need will just be delivered preemptively, when and where we need it. With all kinds of interesting new interface technologies, that kind of link more and more directly into our senses.

There’s a big question of knowing what knowledge to deliver, though.

And for that, our systems have to know more and more about us. Which isn’t going to be hard. I mean, informational packrats like me have been collecting data on themselves for ages. I’ve got dozens of streams of data, including things like every keystroke I’ve typed in the past 20 years, and so on.

But all of this will become completely ubiquitous. We’ll all be routinely doing all sorts of personal analytics. And judging from my own experience we’ll quickly learn some interesting things about ourselves from it.

But more than that, it’ll allow our systems to successfully deliver knowledge to us preemptively.

There are all kinds of detailed issues. Will it be that our systems can systematically sense things because their environment is explicitly tagged, or will they have to deduce things indirectly with vision systems and so on? But the end result is that quite soon we’ll have an increasing symbiosis with our computational systems.

In fact, in Wolfram|Alpha for example, in just a few short weeks, it’ll be able to start taking images and data as well as language-based queries as input.

And increasingly our computational systems will be able to predict things, optimize things, communicate things. Much more effectively than us humans ever can.

But here’s a critical point: we can have all this amazing computation; effectively all this amazing intelligence.

But the question is: what is it supposed to do? What is its purpose?

You know, you look at all these systems in the computational universe, and you see them doing what they’re doing. But what is their purpose?

Well, sometimes we can look at a system and say, “What that system does is more economically explained by saying it achieves a certain purpose than just that it operates according to a certain mechanism”. But ultimately our description of purposes is a very human thing—and very tied into the thread of history that runs through our civilization. I mean, when the thread of history is broken, it’s very hard, even with something like Stonehenge, to know what its purpose was supposed to be.

You know, when we look at the future, it’s pretty obvious that more and more of our world can be automated. At an informational level. And at a physical level.

One of my pet future projects, that I don’t think we’re quite ready for yet, is to really turn robotics into a software problem.

I imagine some bizarre collection of little tiny identical objects, moving around perhaps Rubik’s Cube style, operating a bit like cells in a cellular automaton. A kind of universal mechanical object, that configures itself in whatever way it needs. And I’m guessing this can be done at a molecular level too. And I’ve got some pretty definite ideas from NKS about how to do it.

But I don’t think the ambient technology exists yet; there’s still a lot of practical infrastructure to be built.

When so much can be automated—even, as I mentioned before, creative things—it’ll be interesting how economics change. Some things will still be scarce, but so much will in a sense be infinitely cheap.

And then of course there’s us humans as biological entities. One question one can ask is how the kind of world view I have affects how one thinks about that.

I mean, people like me have all the data about their genomes, for example. But how does one build the whole organism from that? What are the kinds of architectural principles? Should we imagine that it’s kind of like flowcharts or simple systems of chemical equations? Where one thing affects another, and we can just trace the diagrams around?

I suspect that in many cases it’s more complicated. It’s more like what we see all over the computational universe. I mean, lots of cellular automata look so biological in their behavior. And we now know lots of detailed examples where we can map systems like cellular automata onto biological systems—whether at a macroscopic or microscopic level.

And what we realize is that many aspects of biological systems are operating like simple programs—often with very complex behavior. And that means all those phenomena like computational irreducibility come in.

Will some tumor-like process in a biological system grow forever or not? That might be like the halting problem for a Turing machine; it might be formally undecidable, and to know what will happen for any finite time we’d just have to simulate each step.

And, you know, one starts thinking about all sorts of basic questions in medicine.

Here’s an analogy. Think of a human as being a bit like a big computer system. The system runs just fine for a while. But gradually it builds up more and more cruft. Buffers get full, whatever. And no doubt the system has bugs. And those sometimes get in the way. Well, eventually the system gets so messed up that it just crashes—it dies. And that’s rather like what seems to happen with us humans.

And of course with the system one can just reboot, and start again from the same underlying code, just like the next generation for humans can start again from more or less the same genome.

You know, for humans we have all this medical diagnosis stuff. All these diagnosis codes for particular diseases and so on. We could imagine doing that for computer systems too. Diseases of the display subsystem. Diseases of memory management. Trauma to I/O systems. And so on. I think it’d be instructive to really work through this.

What I know one will find is that the idea of very specific diseases is not really right. There are all these different shades; things that can’t even be described just by parameters, they have to be described by different algorithms.

When we run all the servers for Wolfram|Alpha, for example, we have, by the standards of the computer industry, very beautiful Mathematica-powered dashboards showing us the overall health of the different pieces of the system.

But they’re really very coarse. And I think it’ll be interesting to see how much more detail we can represent well. I mean, there’s a lot of computational irreducibility lurking around. The very phenomenon of bugs is a consequence of computational irreducibility.

But the question is: how should one best make a detailed dashboard that allows one to trace the path of problems in a big computer system?

It’s a model for how we should do it for us humans, sampling all sorts of things with sensors, or genomic assays, or whatever. Sampling what’s happening, then computing to see what consequences things will have.

In the case of big computer systems, we tend to just use redundancy and parallelism, and not worry too much about individual pieces dying. So we don’t yet know much about how we’d go in and do interventions.

But with humans we care about each one, and we want to be able to do that. It’s going to be a tough battle with computational irreducibility. No doubt we’ll have algorithmic drugs and so on. Where molecules can effectively compute what to do inside our bodies.

But figuring out the consequences of particular actions will be difficult: that’s the lesson of computational irreducibility.

Still, I’m quite sure that in time, for all practical purposes we’ll be able to fix our biology, and keep things running forever. Actually, I have to say that an intermediate step will surely be various forms of biostasis.

I’m always amused at what happens with things in science. Like I remember cloning. Where I asked a zillion times why mammalian cloning couldn’t be done. And there were always these very detailed arguments. Well, as we know, eventually this kind of weird procedure got invented that made it possible.

And I guess I very strongly suspect the same kind of thing will happen with cryonics. I’m sure there’s nothing fundamentally impossible about it. And right now it’s kind of a weird non-respectable thing to study. But one day some wacky procedure will be invented that just does it. And it’ll immediately change all sorts of attitudes and psychology about death.

But it’s only just one step. In the end, one way or another, effective human immortality will be achieved. And it’ll be the single largest discontinuity in human history.

I wonder what’s on the other side though. I mean, so much of society, and human motivation, and so on, is tied up with mortality. When we have immortality, and all sorts of technology, we’ll at some level be able to do almost anything.

So then the big question is: what will we choose to do? Where will our overarching purpose come from?

It’s so strange how our purposes have evolved over the course of human history. So much of what we do today—in our intellectual world, or for that matter in virtual worlds and so on—would seem utterly pointless to people from another age.

I think computational irreducibility has something to say here. I think it implies that there at least exists a sort of endless frontier of different purposes that can be built on one another. In a sense, computational irreducibility is ultimately why history is meaningful. For if everything was reducible, one would just always be able to jump ahead, and nothing would be achieved by all those steps of history.

But even though new purposes and new history can be built, will we choose to do so?

It is hard to know. Perhaps for all practical purposes, history will end.

You know, I have a strange guess about at least part of this. My guess is that when in a sense almost anything is possible, there’ll be an almost religious interest not in the future but in the past.

Perhaps it’ll be like the Middle Ages, when it was the ancients who had the wisdom. And instead in the future, people will seek their purposes by looking at what purposes existed at a time when not everything was yet possible.

And perhaps it will be our times now that will be of the greatest interest. For we are just at that point in history where a lot is getting recorded, but not everything is possible. It’s a big responsibility if our times will define the purposes for all of the future. But perhaps it will be that way.

You know, I talk about everything being possible. But ultimately we’re just physical entities, governed by the laws of physics.

So an obvious question is what those laws ultimately are.

And, you know, the world view that I’ve developed has a lot to say about that too.

Ultimately the real question is: if our universe is governed by definite rules, it must in effect be one of those programs that’s out there in the computational universe.

Now it could be a huge program—like a giant operating system. Or it could be a tiny program—just a few lines of code.

In the past, it would have seemed inconceivable that all the richness of our universe could be generated just by a few simple lines of code. But once we’ve seen what’s possible, and what’s out there in the computational universe, it’s a whole different story.

I won’t get into this in detail here. It’s a big topic. But suffice it to say that if the universe can really be represented by a few simple lines of code, then it’s inevitable that that code must operate at a very low level. Below, for example, our current notions of space, and time, and quantum mechanics, and so on.

Well, I don’t think we can know a priori whether our universe is actually a simple program. Of course, we know it’s not as complicated as it could be, because after all, there is order in the universe.

But we don’t know how simple it might be. And I suppose it seems very non-Copernican to imagine that our universe happens to be one of the simple ones.

But still, if it is simple, we should be able to find it just by searching the computational universe of possible universes. And if it’s out there to be found, it seems to me embarrassing that we wouldn’t at least try looking for it. Which is why, when I’m not distracted by fascinating technology to build, I’ve worked a lot on doing that search.

Actually, I had thought that I’d have to search through billions of candidate universes to find out that was at all plausible. And at the front lines of the universe hunt, the easy part is rejecting candidate universes that are obviously not ours: that have no notion of time, or an infinite number of dimensions of space, or no possibility of causality, or whatever.

But here’s the surprising thing I’ve found. Even in the first thousand universes or so, there are ones that aren’t obviously not our universe. You run them on a computer. You get billions of little microscopic nodes or whatever. And the model universe is blobbing around.

But is it our universe, or not?

Well, computational irreducibility bites us once again. Because it tells us we might have to simulate all the actual steps in the universe to find out.

Well, in practice, I’m hopeful that there are pockets of computational reducibility that will let us get a foothold in comparing with the laws we know for our universe. And when one’s dealing with sufficiently simple models, there are no knobs to turn: they’re either dead right or dead wrong.

I don’t know how it’ll come out. But I think it’s far from impossible that in a limited time, we’ll effectively hold in our hands a little program, and be able to say: “This is our universe, in every precise detail”. Just run it and you’ll grow our universe, and everything that happens in it.

Well, then we’ll be asking why it’s this program, and not another. And that’ll be an interesting and strange question. Which I suspect might be resolved, as such questions so often are, by realizing that it’s actually a meaningless question.

Because perhaps in some principle beyond the Principle of Computational Equivalence, all nontrivial universes are in some fundamental sense precisely equivalent when viewed by entities within them.

I’m not sure. But if we do manage to find the fundamental theory—the fundamental program—for the universe, it’ll ground our sense of what’s possible.

It still won’t be easy—because of computational irreducibility—to answer everything. It might even for example be undecidable whether something like warp drive is possible. But we’ll have at least gotten to the edge of science in a certain direction.

Alright. Well, I think I should wrap up here.

All this started with this one little experiment.

A sort of crack for me in everything I thought I knew about science. That gradually widened to give a whole world view. That I’ve tried to explain a bit here. And that I think is going to be increasingly important in understanding and defining our future.

Thank you very much.