Transcript of a talk at TED AI on October 17, 2023, in San Francisco

Human language. Mathematics. Logic. These are all ways to formalize the world. And in our century there’s a new and yet more powerful one: computation.

And for nearly 50 years I’ve had the great privilege of building an ever taller tower of science and technology based on that idea of computation. And today I want to tell you some of what that’s led to.

There’s a lot to talk about—so I’m going to go quickly… sometimes with just a sentence summarizing what I’ve written a whole book about.

You know, I last gave a TED talk thirteen years ago—in February 2010—soon after Wolfram|Alpha launched.

And I ended that talk with a question: is computation ultimately what’s underneath everything in our universe?

I gave myself a decade to find out. And actually it could have needed a century. But in April 2020—just after the decade mark—we were thrilled to be able to announce what seems to be the ultimate “machine code” of the universe.

And, yes, it’s computational. So computation isn’t just a possible formalization; it’s the ultimate one for our universe.

It all starts from the idea that space—like matter—is made of discrete elements. And that the structure of space and everything in it is just defined by the network of relations between these elements—that we might call atoms of space. It’s very elegant—but deeply abstract.

But here’s a humanized representation:

A version of the very beginning of the universe. And what we’re seeing here is the emergence of space and everything in it by the successive application of very simple computational rules. And, remember, those dots are not atoms in any existing space. They’re atoms of space—that are getting put together to make space. And, yes, if we kept going long enough, we could build our whole universe this way.

Eons later here’s a chunk of space with two little black holes, that eventually merge, radiating ripples of gravitational radiation:

And remember—all this is built from pure computation. But like fluid mechanics emerging from molecules, what emerges here is spacetime—and Einstein’s equations for gravity. Though there are deviations that we just might be able to detect. Like that the dimensionality of space won’t always be precisely 3.

And there’s something else. Our computational rules can inevitably be applied in many ways, each defining a different thread of time—a different path of history—that can branch and merge:

But as observers embedded in this universe, we’re branching and merging too. And it turns out that quantum mechanics emerges as the story of how branching minds perceive a branching universe.

The little pink lines here show the structure of what we call branchial space—the space of quantum branches. And one of the stunningly beautiful things—at least for a physicist like me—is that the same phenomenon that in physical space gives us gravity, in branchial space gives us quantum mechanics.

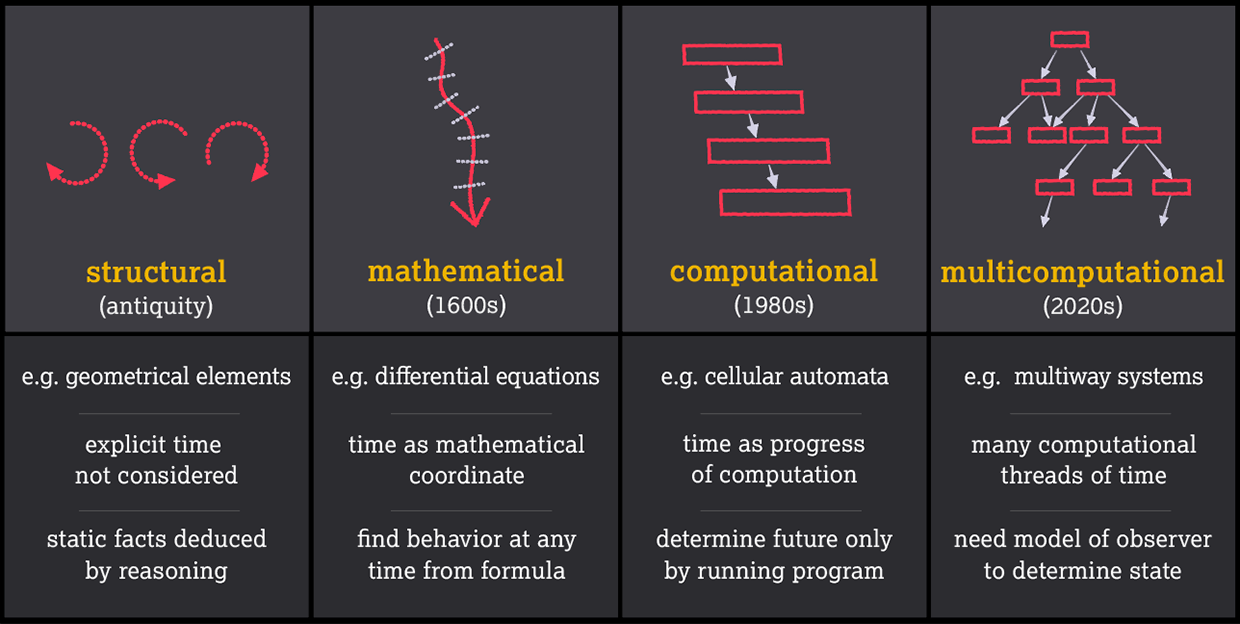

In the history of science so far, I think we can identify four broad paradigms for making models of the world—that can be distinguished by how they deal with time.

In antiquity—and in plenty of areas of science even today—it’s all about “what things are made of”, and time doesn’t really enter. But in the 1600s came the idea of modeling things with mathematical formulas—in which time enters, but basically just as a coordinate value.

Then in the 1980s—and this is something in which I was deeply involved—came the idea of making models by starting with simple computational rules and then just letting them run:

Can one predict what will happen? No, there’s what I call computational irreducibility: in effect the passage of time corresponds to an irreducible computation that we have to run to know how it will turn out.

But now there’s something even more: in our Physics Project things become multicomputational, with many threads of time, that can only be knitted together by an observer.

It’s a new paradigm—that actually seems to unlock things not only in fundamental physics, but also in the foundations of mathematics and computer science, and possibly in areas like biology and economics too.

You know, I talked about building up the universe by repeatedly applying a computational rule. But how is that rule picked? Well, actually, it isn’t. Because all possible rules are used. And we’re building up what I call the ruliad: the deeply abstract but unique object that is the entangled limit of all possible computational processes. Here’s a tiny fragment of it shown in terms of Turing machines:

OK, so the ruliad is everything. And we as observers are necessarily part of it. In the ruliad as a whole, everything computationally possible can happen. But observers like us can just sample specific slices of the ruliad.

And there are two crucial facts about us. First, we’re computationally bounded—our minds are limited. And second, we believe we’re persistent in time—even though we’re made of different atoms of space at every moment.

So then here’s the big result. What observers with those characteristics perceive in the ruliad necessarily follows certain laws. And those laws turn out to be precisely the three key theories of 20th-century physics: general relativity, quantum mechanics, and statistical mechanics and the Second Law.

It’s because we’re observers like us that we perceive the laws of physics we do.

We can think of different minds as being at different places in rulial space. Human minds who think alike are nearby. Animals further away. And further out we get to alien minds where it’s hard to make a translation.

How can we get intuition for all this? We can use generative AI to take what amounts to an incredibly tiny slice of the ruliad—aligned with images we humans have produced.

We can think of this as a place in the ruliad described using the concept of a cat in a party hat:

Zooming out, we see what we might call “cat island”. But pretty soon we’re in interconcept space. Occasionally things will look familiar, but mostly we’ll see things we humans don’t have words for.

In physical space we explore more of the universe by sending out spacecraft. In rulial space we explore more by expanding our concepts and our paradigms.

We can get a sense of what’s out there by sampling possible rules—doing what I call ruliology:

Even with incredibly simple rules there’s incredible richness. But the issue is that most of it doesn’t yet connect with things we humans understand or care about. It’s like when we look at the natural world and only gradually realize we can use features of it for technology. Even after everything our civilization has achieved, we’re just at the very, very beginning of exploring rulial space.

But what about AIs? Just like we can do ruliology, AIs can in principle go out and explore rulial space. But left to their own devices, they’ll mostly be doing things we humans don’t connect with, or care about.

The big achievements of AI in recent times have been about making systems that are closely aligned with us humans. We train LLMs on billions of webpages so they can produce text that’s typical of what we humans write. And, yes, the fact that this works is undoubtedly telling us some deep scientific things about the semantic grammar of language—and generalizations of things like logic—that perhaps we should have known centuries ago.

You know, for much of human history we were kind of like LLMs, figuring things out by matching patterns in our minds. But then came more systematic formalization—and eventually computation. And with that we got a whole other level of power—to create truly new things, and in effect to go wherever we want in the ruliad.

But the challenge is to do that in a way that connects with what we humans—and our AIs—understand.

And in fact I’ve devoted a large part of my life to building that bridge. It’s all been about creating a language for expressing ourselves computationally: a language for computational thinking.

The goal is to formalize what we know about the world—in computational terms. To have computational ways to represent cities and chemicals and movies and formulas—and our knowledge about them.

It’s been a vast undertaking—that’s spanned more than four decades of my life. It’s something very unique and different. But I’m happy to report that in what has been Mathematica and is now the Wolfram Language I think we have now firmly succeeded in creating a truly full-scale computational language.

In effect, every one of the functions here can be thought of as formalizing—and encapsulating in computational terms—some facet of the intellectual achievements of our civilization:

It’s the most concentrated form of intellectual expression I know: finding the essence of everything and coherently expressing it in the design of our computational language. For me personally it’s been an amazing journey, year after year building the tower of ideas and technology that’s needed—and nowadays sharing that process with the world on open livestreams.

A few centuries ago the development of mathematical notation, and what amounts to the “language of mathematics”, gave a systematic way to express math—and made possible algebra, and calculus, and ultimately all of modern mathematical science. And computational language now provides a similar path—letting us ultimately create a “computational X” for all imaginable fields X.

We’ve seen the growth of computer science—CS. But computational language opens up something ultimately much bigger and broader: CX. For 70 years we’ve had programming languages—which are about telling computers in their terms what to do. But computational language is about something intellectually much bigger: it’s about taking everything we can think about and operationalizing it in computational terms.

You know, I built the Wolfram Language first and foremost because I wanted to use it myself. And now when I use it, I feel like it’s giving me a superpower:

I just have to imagine something in computational terms and then the language almost magically lets me bring it into reality, see its consequences and then build on them. And, yes, that’s the superpower that’s let me do things like our Physics Project.

And over the past 35 years it’s been my great privilege to share this superpower with many other people—and by doing so to have enabled such an incredible number of advances across so many fields. It’s a wonderful thing to see people—researchers, CEOs, kids—using our language to fluently think in computational terms, crispening up their own thinking and then in effect automatically calling in computational superpowers.

And now it’s not just people who can do that. AIs can use our computational language as a tool too. Yes, to get their facts straight, but even more importantly, to compute new facts. There are already some integrations of our technology into LLMs—and there’s a lot more you’ll be seeing soon. And, you know, when it comes to building new things, a very powerful emerging workflow is basically to start by telling the LLM roughly what you want, then have it try to express that in precise Wolfram Language. Then—and this is a critical feature of our computational language compared to a programming language—you as a human can “read the code”. And if it does what you want, you can use it as a dependable component to build on.

OK, but let’s say we use more and more AI—and more and more computation. What’s the world going to be like? From the Industrial Revolution on, we’ve been used to doing engineering where we can in effect “see how the gears mesh” to “understand” how things work. But computational irreducibility now shows that won’t always be possible. We won’t always be able to make a simple human—or, say, mathematical—narrative to explain or predict what a system will do.

And, yes, this is science in effect eating itself from the inside. From all the successes of mathematical science we’ve come to believe that somehow—if only we could find them—there’d be formulas to predict everything. But now computational irreducibility shows that isn’t true. And that in effect to find out what a system will do, we have to go through the same irreducible computational steps as the system itself.

Yes, it’s a weakness of science. But it’s also why the passage of time is significant—and meaningful. We can’t just jump ahead and get the answer; we have to “live the steps”.

It’s going to be a great societal dilemma of the future. If we let our AIs achieve their full computational potential, they’ll have lots of computational irreducibility, and we won’t be able to predict what they’ll do. But if we put constraints on them to make them predictable, we’ll limit what they can do for us.

So what will it feel like if our world is full of computational irreducibility? Well, it’s really nothing new—because that’s the story with much of nature. And what’s happened there is that we’ve found ways to operate within nature—even though nature can still surprise us.

And so it will be with the AIs. We might give them a constitution, but there will always be consequences we can’t predict. Of course, even figuring out societally what we want from the AIs is hard. Maybe we need a promptocracy where people write prompts instead of just voting. But basically every control-the-outcome scheme seems full of both political philosophy and computational irreducibility gotchas.

You know, if we look at the whole arc of human history, the one thing that’s systematically changed is that more and more gets automated. And LLMs just gave us a dramatic and unexpected example of that. So does that mean that in the end we humans will have nothing to do? Well, if you look at history, what seems to happen is that when one thing gets automated away, it opens up lots of new things to do. And as economies develop, the pie chart of occupations seems to get more and more fragmented.

And now we’re back to the ruliad. Because at a foundational level what’s happening is that automation is opening up more directions to go in the ruliad. And there’s no abstract way to choose between them. It’s just a question of what we humans want—and it requires humans “doing work” to define that.

A society of AIs untethered by human input would effectively go off and explore the whole ruliad. But most of what they’d do would seem to us random and pointless. Much like now most of nature doesn’t seem like it’s “achieving a purpose”.

One used to imagine that to build things that are useful to us, we’d have to do it step by step. But AI and the whole phenomenon of computation tell us that really what we need is more just to define what we want. Then computation, AI, automation can make it happen.

And, yes, I think the key to defining in a clear way what we want is computational language. You know—even after 35 years—for many people the Wolfram Language is still an artifact from the future. If your job is to program it seems like a cheat: how come you can do in an hour what would usually take a week? But it can also be daunting, because having dashed off that one thing, you now have to conceptualize the next thing. Of course, it’s great for CEOs and CTOs and intellectual leaders who are ready to race onto the next thing. And indeed it’s impressively popular in that set.

In a sense, what’s happening is that Wolfram Language shifts from concentrating on mechanics to concentrating on conceptualization. And the key to that conceptualization is broad computational thinking. So how can one learn to do that? It’s not really a story of CS. It’s really a story of CX. And as a kind of education, it’s more like liberal arts than STEM. It’s part of a trend that when you automate technical execution, what becomes important is not figuring out how to do things—but what to do. And that’s more a story of broad knowledge and general thinking than any kind of narrow specialization.

You know, there’s an unexpected human-centeredness to all of this. We might have thought that with the advance of science and technology, the particulars of us humans would become ever less relevant. But we’ve discovered that that’s not true. And that in fact everything—even our physics—depends on how we humans happen to have sampled the ruliad.

Before our Physics Project we didn’t know if our universe really was computational. But now it’s pretty clear that it is. And from that we’re inexorably led to the ruliad—with all its vastness, so hugely greater than all the physical space in our universe.

So where will we go in the ruliad? Computational language is what lets us chart our path. It lets us humans define our goals and our journeys. And what’s amazing is that all the power and depth of what’s out there in the ruliad is accessible to everyone. One just has to learn to harness those computational superpowers. Which starts here. Our portal to the ruliad: