From the Foundations Laid by A New Kind of Science

When A New Kind of Science was published twenty years ago I thought what it had to say was important. But what’s become increasingly clear—particularly in the last few years—is that it’s actually even much more important than I ever imagined. My original goal in A New Kind of Science was to take a step beyond the mathematical paradigm that had defined the state of the art in science for three centuries—and to introduce a new paradigm based on computation and on the exploration of the computational universe of possible programs. And already in A New Kind of Science one can see that there’s immense richness to what can be done with this new paradigm.

There’s a new abstract basic science—that I now call ruliology—that’s concerned with studying the detailed properties of systems with simple rules. There’s a vast new source of “raw material” to “mine” from the computational universe, both for making models of things and for developing technology. And there are new, computational ways to think about fundamental features of how systems in nature and elsewhere work.

But what’s now becoming clear is that there’s actually something still bigger, still more overarching that the paradigm of A New Kind of Science lays the foundations for. In a sense, A New Kind of Science defines how one can use computation to think about things. But what we’re now realizing is that actually computation is not just a way to think about things: it is at a very fundamental level what everything actually is.

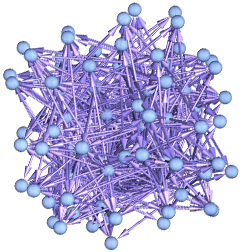

One can see this as a kind of ultimate limit of A New Kind of Science. What we call the ruliad is the entangled limit of all possible computations. And what we, for example, experience as physical reality is in effect just our particular sampling of the ruliad. And it’s the ideas of A New Kind of Science—and particularly things like the Principle of Computational Equivalence—that lay the foundations for understanding how this works.

When I wrote A New Kind of Science I discussed the possibility that there might be a way to find a fundamental model of physics based on simple programs. And from that seed has now come the Wolfram Physics Project, which, with its broad connections to existing mathematical physics, now seems to show that, yes, it’s really true that our physical universe is “computational all the way down”.

But there’s more. It’s not just that at the lowest level there’s some specific rule operating on a vast network of atoms of space. It’s that underneath everything is all possible computation, encapsulated in the single unique construct that is the ruliad. And what determines our experience—and the science we use to summarize it—is what characteristics we as observers have in sampling the ruliad.

There is a tower of ideas that relate to fundamental questions about the nature of existence, and the foundations not only of physics, but also of mathematics, computer science and a host of other fields. And these ideas build crucially on the paradigm of A New Kind of Science. But they need something else as well: what I now call the multicomputational paradigm. There were hints of it in A New Kind of Science when I discussed multiway systems. But it has only been within the past couple of years that this whole new paradigm has begun to come into focus. In A New Kind of Science I explored some of the remarkable things that individual computations out in the computational universe can do. What the multicomputational paradigm now does is to consider the aggregate of multiple computations—and in the end the entangled limit of all possible computations, the ruliad.

The Principle of Computational Equivalence is in many ways the intellectual culmination of A New Kind of Science—and it has many deep consequences. And one of them is the idea—and uniqueness—of the ruliad. The Principle of Computational Equivalence provides a very general statement about what all possible computational systems do. What the ruliad then does is to pull together the behaviors and relationships of all these systems into a single object that is, in effect, an ultimate representation of everything computational, and indeed in a certain sense simply of everything.

The Intellectual Journey: From Physics to Physics, and Beyond

The publication of A New Kind of Science 20 years ago was for me already the culmination of an intellectual journey that had begun more than 25 years earlier. I had started in theoretical physics as a teenager in the 1970s. And stimulated by my needs in physics, I had then built my first computational language. A couple of years later I returned to basic science, now interested in some very fundamental questions. And from my blend of experience in physics and computing I was led to start trying to formulate things in terms of computation, and computational experiments. And soon discovered the remarkable fact that in the computational universe, even very simple programs can generate immensely complex behavior.

For several years I studied the basic science of the particular class of simple programs known as cellular automata—and the things I saw led me to identify some important general phenomena, most notably computational irreducibility. Then in 1986—having “answered most of the obvious questions I could see”—I left basic science again, and for five years concentrated on creating Mathematica and what’s now the Wolfram Language. But in 1991 I took the tools I’d built, and again immersed myself in basic science. The decade that followed brought a long string of exciting and unexpected discoveries about the computational universe and its implications—leading finally in 2002 to the publication of A New Kind of Science.

In many ways, A New Kind of Science is a very complete book—that in its 1280 pages does well at “answering all the obvious questions”, save, notably, for some about the “application area” of fundamental physics. For a couple of years after the book was published, I continued to explore some of these remaining questions. But pretty soon I was swept up in the building of Wolfram|Alpha and then the Wolfram Language, and in all the complicated and often deep questions involved in for the first time creating a full-scale computational language. And so for nearly 17 years I did almost no basic science.

The ideas of A New Kind of Science nevertheless continued to exert a deep influence—and I came to see my decades of work on computational language as ultimately being about creating a bridge between the vast capabilities of the computational universe revealed by A New Kind of Science, and the specific kinds of ways we humans are able to think about things. This point of view led me to all kinds of important conclusions about the role of computation and its implications for the future. But through all this I kept on thinking that one day I should look at physics again. And finally in 2019, stimulated by a small technical breakthrough, as well as enthusiasm from physicists of a new generation, I decided it was time to try diving into physics again.

My practical tools had developed a lot since I’d worked on A New Kind of Science. And—as I have found so often—the passage of years had given me greater clarity and perspective about what I’d discovered in A New Kind of Science. And it turned out we were rather quickly able to make spectacular progress. A New Kind of Science had introduced definite ideas about how fundamental physics might work. Now we could see that these ideas were very much on the right track, but on their own they did not go far enough. Something else was needed.

In A New Kind of Science I’d introduced what I called multiway systems, but I’d treated them as a kind of sideshow. Now—particularly tipped off by quantum mechanics—we realized that multiway systems were not a sideshow but were actually in a sense the main event. They had come out of the computational paradigm of A New Kind of Science, but they were really harbingers of a new paradigm: the multicomputational paradigm.

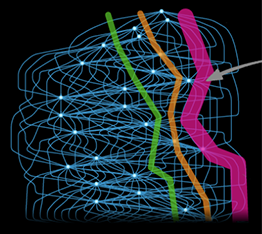

In A New Kind of Science, I’d already talked about space—and everything else in the universe—ultimately being made up of a network of discrete elements that I’d now call “atoms of space”. And I’d talked about time being associated with the inexorable progressive application of computationally irreducible rules. But now we were thinking not just of a single thread of computation, but instead of a whole multiway system of branching and merging threads—representing in effect a multicomputational history for the universe.

In A New Kind of Science I’d devoted a whole chapter to “Processes of Perception and Analysis”, recognizing the importance of the observer in computational systems. But with multicomputation there was yet more focus on this, and on how a physical observer knits things together to form a coherent thread of experience. Indeed, it became clear that it’s certain features of the observer that ultimately determine the laws of physics we perceive. And in particular it seems that as soon as we—somehow reflecting core features of our conscious experience—believe that we exist persistently through time, but are computationally bounded, then it follows that we will attribute to the universe the central known laws of spacetime and quantum mechanics.

At the level of atoms of space and individual threads of history everything is full of computational irreducibility. But the key point is that observers like us don’t experience this; instead we sample certain computationally reducible features—that we can describe in terms of meaningful “laws of physics”.

I never expected it would be so easy, but by early 2020—only a few months into our Wolfram Physics Project—we seemed to have successfully identified how the “machine code” of our universe must work. A New Kind of Science had established that computation was a powerful way of thinking about things. But now it was becoming clear that actually our whole universe is in a sense “computational all the way down”.

But where did this leave the traditional mathematical view? To my surprise, far from being at odds it seemed as if our computation-all-the-way-down model of physics perfectly plugged into a great many of the more abstract existing mathematical approaches. Mediated by multicomputation, the concepts of A New Kind of Science—which began as an effort to go beyond mathematics—seemed now to be finding a kind of ultimate convergence with mathematics.

But despite our success in working out the structure of the “machine code” for our universe, a major mystery remained. Let’s say we could find a particular rule that could generate everything in our universe. Then we’d have to ask “Why this rule, and not another?” And if “our rule” was simple, how come we’d “lucked out” like that? Ever since I was working on A New Kind of Science I’d wondered about this.

And just as we were getting ready to announce the Physics Project in May 2020 the answer began to emerge. It came out of the multicomputational paradigm. And in a sense it was an ultimate version of it. Instead of imagining that the universe follows some particular rule—albeit applying it multicomputationally in all possible ways—what if the universe follows all possible rules?

And then we realized: this is something much more general than physics. And in a sense it’s the ultimate computational construct. It’s what one gets if one takes all the programs in the computational universe that I studied in A New Kind of Science and runs them together—as a single, giant, multicomputational system. It’s a single, unique object that I call the ruliad, formed as the entangled limit of all possible computations.

There’s no choice about the ruliad. Everything about it is abstractly necessary—emerging as it does just from the formal concept of computation. A New Kind of Science developed the abstraction of thinking about things in terms of computation. The ruliad takes this to its ultimate limit—capturing the whole entangled structure of all possible computations—and defining an object that in some sense describes everything.

Once we believe—as the Principle of Computational Equivalence implies—that things like our universe are computational, it then inevitably follows that they are described by the ruliad. But the observer has a crucial role here. Because while as a matter of theoretical science we can discuss the whole ruliad, our experience of it inevitably has to be based on sampling it according to our actual capabilities of perception.

In the end, it’s deeply analogous to something that—as I mention in A New Kind of Science—first got me interested in fundamental questions in science 50 years ago: the Second Law of thermodynamics. The molecules in a gas move around and interact according to certain rules. But as A New Kind of Science argues, one can think about this as a computational process, which can show computational irreducibility. If one didn’t worry about the “mechanics” of the observer, one might imagine that one could readily “see through” this computational irreducibility, to the detailed behavior of the molecules underneath. But the point is that a realistic, computationally bounded observer—like us—will be forced by computational irreducibility to perceive only certain “coarse-grained” aspects of what’s going on, and so will consider the gas to be behaving in a standard large-scale thermodynamic way.

And so it is, at a grander level, with the ruliad. Observers like us can only perceive certain aspects of what’s going on in the ruliad, and a key result of our Physics Project is that with only quite loose constraints on what we’re like as observers, it’s inevitable that we will perceive our universe to operate according to particular precise known laws of physics. And indeed the attributes that we associate with “consciousness” seem closely tied to what’s needed to get the features of spacetime and quantum mechanics that we know from physics. In A New Kind of Science one of the conclusions is that the Principle of Computational Equivalence implies a fundamental equivalence between systems (like us) that we consider “intelligent” or “conscious”, and systems that we consider “merely computational”.

But what’s now become clear in the multicomputational paradigm is that there’s more to this story. It’s not (as people have often assumed) that there’s something more powerful about “conscious observers” like us. Actually, it’s rather the opposite: that in order to have consistent “conscious experience” we have to have certain limitations (in particular, computational boundedness, and a belief of persistence in time), and these limitations are what make us “see the ruliad” in the way that corresponds to our usual view of the physical world.

The concept of the ruliad is a powerful one, with implications that significantly transcend the traditional boundaries of science. For example, last year I realized that thinking in terms of the ruliad potentially provides a meaningful answer to the ultimate question of why our universe exists. The answer, I posit, is that the ruliad—as a “purely formal” object—“necessarily exists”. And what we perceive as “our universe” is then just the “slice” that corresponds to what we can “see” from the particular place in “rulial space” at which we happen to be. There has to be “something there”—and the remarkable fact is that for an observer with our general characteristics, that something has to have features that are like our usual laws of physics.

In A New Kind of Science I discussed how the Principle of Computational Equivalence implies that almost any system can be thought of as being “like a mind” (as in, “the weather has a mind of its own”). But the issue—that for example is of central importance in talking about extraterrestrial intelligence—is how similar to us that mind is. And now with the ruliad we have a more definite way to discuss this. Different minds (even different human ones) can be thought of as being at different places in the ruliad, and thus in effect attributing different rules to the universe. The Principle of Computational Equivalence implies that there must ultimately be a way to translate (or, in effect, move) from one place to another. But the question is how far it is.

Our senses and measuring devices—together with our general paradigms for thinking about things—define the basic area over which our understanding extends, and for which we can readily produce a high-level narrative description of what’s going on. And in the past we might have assumed that this was all we’d ever need to reach with whatever science we built. But what A New Kind of Science—and now the ruliad—show us is that there’s much more out there. There’s a whole computational universe of possible programs—many of which behave in ways that are far from our current domain of high-level understanding.

Traditional science we can view as operating by gradually expanding our domain of understanding. But in a sense the key methodological idea that launched A New Kind of Science is to do computational experiments, which in effect just “jump without prior understanding” out into the wilds of the computational universe. And that’s in the end why all that ruliology in A New Kind of Science at first looks so alien: we’ve effectively jumped quite far from our familiar place in rulial space, so there’s no reason to expect we’ll recognize anything. And in effect, as the title of the book says, we need to be doing a new kind of science.

In A New Kind of Science, an important part of the story has to do with the phenomenon of computational irreducibility, and the way in which it prevents any computationally bounded observer (like us) from being able to “reduce” the behavior of systems, and thereby perceive them as anything other than complex. But now that we’re thinking not just about computation, but about multicomputation, other attributes of other observers start to be important too. And with the ruliad ultimately representing everything, the question of what will be perceived in any particular case devolves into one about the characteristics of observers.

In A New Kind of Science I give examples of how the same kinds of simple programs (such as cellular automata) can provide good “metamodels” for a variety of kinds of systems in nature and elsewhere, that show up in very different areas of science. But one feature of different areas of science is that they’re often concerned with different kinds of questions. And with the focus on the characteristics of the observer this is something we get to capture—and we get to discuss, for example, what the chemical observer, or the economic observer, might be like, and how that affects their perception of what’s ultimately in the ruliad.

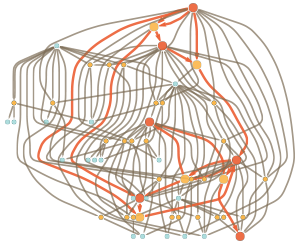

In Chapter 12 of A New Kind of Science there’s a long section on “Implications for Mathematics and Its Foundations”, which begins with the observation that just as many models in science seem to be able to start from simple rules, mathematics is traditionally specifically set up to start from simple axioms. I then analyzed how multiway systems could be thought of as defining possible derivations (or proofs) of new mathematical theorems from axioms or other theorems—and I discussed how the difficulty of doing mathematics can be thought of as a reflection of computational irreducibility.

But informed by our Physics Project I realized that there’s much more to say about the foundations of mathematics—and this has led to our recently launched Metamathematics Project. At the core of this project is the idea that mathematics, like physics, is ultimately just a sampling of the ruliad. And just as the ruliad defines the lowest-level machine code of physics, so does it also for mathematics.

The traditional axiomatic level of mathematics (with its built-in notions of variables and operators and so on) is already higher level than the “raw ruliad”. And a crucial observation is that just like physical observers operate at a level far above things like the atoms of space, so “mathematical observers” mostly operate at a level far above the raw ruliad, or even the “assembly code” of axioms. In an analogy with gases, the ruliad—or even axiom systems—are talking about the “molecular dynamics” level; but “mathematical observers” operate more at the “fluid dynamics” level.

And the result of this is what I call the physicalization of metamathematics: the realization that our “perception” of mathematics is like our perception of physics. And that, for example, the very possibility of consistently doing higher-level mathematics where we don’t always have to drop down to the level of axioms or the raw ruliad has the same origin as the fact that “observers like us” typically view space as something continuous, rather than something made up of lots of atoms of space.

In A New Kind of Science I considered it a mystery why phenomena like undecidability are not more common in typical pure mathematics. But now our Metamathematics Project provides an answer that’s based on the character of mathematical observers.

My stated goal at the beginning of A New Kind of Science was to go beyond the mathematical paradigm, and that’s exactly what was achieved. But now there’s almost a full circle—because we see that building on A New Kind of Science and the computational paradigm we reach the multicomputational paradigm and the ruliad, and then we realize that mathematics, like physics, is part of the ruliad. Or, put another way, mathematics, like physics—and like everything else—is “made of computation”, and all computation is in the ruliad.

And that means that insofar as we consider there to be physical reality, so also we must consider there to be “mathematical reality”. Physical reality arises from the sampling of the ruliad by physical observers; so similarly mathematical reality must arise from the sampling of the ruliad by mathematical observers. Or, in other words, if we believe that the physical world exists, so we must—essentially like Plato—also believe that the mathematics exists, and that there is an underlying reality to mathematics.

All of these ideas rest on what was achieved in A New Kind of Science but now go significantly beyond it. In an “Epilog” that I eventually cut from the final version of A New Kind of Science I speculated that “major new directions” might be built in 15–30 years. And when I wrote that, I wasn’t really expecting that I would be the one to be central in doing that. And indeed I suspect that had I simply continued the direct path in basic science defined by my work on A New Kind of Science, it wouldn’t have been me.

It’s not something I’ve explicitly planned, but at this point I can look back on my life so far and see it as a repeated alternation between technology and basic science. Each builds on the other, giving me both ideas and tools—and creating in the end a taller and taller intellectual tower. But what’s crucial is that every alternation is in many ways a fresh start, where I’m able to use what I’ve done before, but have a chance to reexamine everything from a new perspective. And so it has been in the past few years with A New Kind of Science: having returned to basic science after 17 years away, it’s been possible to make remarkably rapid and dramatic progress that’s taken things to a new and wholly unexpected level.

The Arrival of a Fourth Scientific Paradigm

In the course of intellectual history, there’ve been very few fundamentally different paradigms introduced for theoretical science. The first is what one might call the “structural paradigm”, in which one’s basically just concerned with what things are made of. And beginning in antiquity—and continuing for two millennia—this was pretty much the only paradigm on offer. But in the 1600s there was, as I described it in the opening sentence of A New Kind of Science, a “dramatic new idea”—that one could describe not just how things are, but also what they can do, in terms of mathematical equations.

And for three centuries this “mathematical paradigm” defined the state of the art for theoretical science. But as I went on to explain in the opening paragraph of A New Kind of Science, my goal was to develop a new “computational paradigm” that would describe things not in terms of mathematical equations but instead in terms of computational rules or programs. There’d been precursors to this in my own work in the 1980s, but despite the practical use of computers in applying the mathematical paradigm, there wasn’t much of a concept of describing things, say in nature, in a fundamentally computational way.

One feature of a mathematical equation is that it aims to encapsulate “in one fell swoop” the whole behavior of a system. Solve the equation and you’ll know everything about what the system will do. But in the computational paradigm it’s a different story. The underlying computational rules for a system in principle determine what it will do. But to actually find out what it does, you have to run those rules—which is often a computationally irreducible process.

Put another way: in the structural paradigm, one doesn’t talk about time at all. In the mathematical paradigm, time is there, but it’s basically just a parameter, that if you can solve the equations you can set to whatever value you want. In the computational paradigm, however, time is something more fundamental: it’s associated with the actual irreducible progression of computation in a system.

It’s an important distinction that cuts to the core of theoretical science. Heavily influenced by the mathematical paradigm, it’s often been assumed that science is fundamentally about being able to make predictions, or in a sense having a model that can “outrun” the system you’re studying, and say what it’s going to do with much less computational effort than the system itself.

But computational irreducibility implies that there’s a fundamental limit to this. There are systems whose behavior is in effect “too complex” for us to ever be able to “find a formula for it”. And this is not something we could, for example, resolve just by increasing our mathematical sophistication: it is a fundamental limit that arises from the whole structure of the computational paradigm. In effect, from deep inside science we’re learning that there are fundamental limitations on what science can achieve.

But as I mentioned in A New Kind of Science, computational irreducibility has an upside as well. If everything were computationally reducible, the passage of time wouldn’t in any fundamental sense add up to anything; we’d always be able to “jump ahead” and see what the outcome of anything would be without going through the steps, and we’d never have something we could reasonably experience as free will.

In practical computing it’s pretty common to want to go straight from “question” to “answer”, and not be interested in “what happened inside”. But in A New Kind of Science there is in a sense an immediate emphasis on “what happens inside”. I don’t just show the initial input and final output for a cellular automaton. I show its whole “spacetime” history. And now that we have a computational theory of fundamental physics we can see that all the richness of our physical experience is contained in the “process inside”. We don’t just want to know the endpoint of the universe; we want to live the ongoing computational process that corresponds to our experience of the passage of time.

But, OK, so in A New Kind of Science we reached what we might identify as the third major paradigm for theoretical science. But the exciting—and surprising—thing is that inspired by our Physics Project we can now see a fourth paradigm: the multicomputational paradigm. And while the computational paradigm involves considering the progression of particular computations, the multicomputational paradigm involves considering the entangled progression of many computations. The computational paradigm involves a single thread of time. The multicomputational paradigm involves multiple threads of time that branch and merge.

What in a sense forced us into the multicomputational paradigm was thinking about quantum mechanics in our Physics Project, and realizing that multicomputation was inevitable in our models. But the idea of multicomputation is vastly more general, and in fact immediately applies to any system where at any given step multiple things can happen. In A New Kind of Science I studied many kinds of computational systems—like cellular automata and Turing machines—where one definite thing happens at each step. I looked a little at multiway systems—primarily ones based on string rewriting. But now in general in the multicomputational paradigm one is interested in studying multiway systems of all kinds. They can be based on simple iterations, say involving numbers, in which multiple functions can be applied at each step. They can be based on systems like games where there are multiple moves at each step. And they can be based on a whole range of systems in nature, technology and elsewhere where there are multiple “asynchronous” choices of events that can occur.

Given the basic description of multicomputational systems, one might at first assume that whatever difficulties there are in deducing the behavior of computational systems, they would only be greater for multicomputational systems. But the crucial point is that whereas with a purely computational system (like a cellular automaton) it’s perfectly reasonable to imagine “experiencing” its whole evolution—say just by seeing a picture of it, the same is not true of a multicomputational system. Because for observers like us, who fundamentally experience time in a single thread, we have no choice but to somehow “sample” or “coarse grain” a multicomputational system if we are to reduce its behavior to something we can “experience”.

And there’s then a remarkable formal fact: if one has a system that shows fundamental computational irreducibility, then computationally bounded “single-thread-of-time” observers inevitably perceive certain effective behavior in the system, that follows something like the typical laws of physics. Once again we can make an analogy with gases made from large numbers of molecules. Large-scale (computationally bounded) observers will essentially inevitably perceive gases to follow, say, the standard gas laws, quite independent of the detailed properties of individual molecules.

In other words, the interplay between an “observer like us” and a multicomputational system will effectively select out a slice of computational reducibility from the underlying computational irreducibility. And although I didn’t see this coming, it’s in the end fairly obvious that something like this has to happen. The Principle of Computational Equivalence makes it basically inevitable that the underlying processes in the universe will be computationally irreducible. But somehow the particular features of the universe that we perceive and care about have to be ones that have enough computational reducibility that we can, for example, make consistent decisions about what to do, and we’re not just continually confronted by irreducible unpredictability.

So how general can we expect this picture of multicomputation to be, with its connection to the kinds of things we’ve seen in physics? It seems to be extremely general, and to provide a true fourth paradigm for theoretical science.

There are many kinds of systems for which the multicomputational paradigm seems to be immediately relevant. Beyond physics and metamathematics, there seems to be near-term promise in chemistry, molecular biology, evolutionary biology, neuroscience, immunology, linguistics, economics, machine learning, distributed computing and more. In each case there are underlying low-level elements (such as molecules) that interact through some kind of events (say collisions or reactions). And then there’s a big question of what the relevant observer is like.

In chemistry, for example, the observer could just measure the overall concentration of some kind of molecule, coarse-graining together all the individual instances of those molecules. Or the observer could be sensitive, for example, to detailed causal relationships between collisions among molecules. In traditional chemistry, things like this generally aren’t “observed”. But in biology (for example in connection with membranes), or in molecular computing, they may be crucial.

When I began the project that became A New Kind of Science the central question I wanted to answer is why we see so much complexity in so many kinds of systems. And with the computational paradigm and the ubiquity of computational irreducibility we had an answer, which also in a sense told us why it was difficult to make certain kinds of progress in a whole range of areas.

But now we’ve got a new paradigm, the multicomputational paradigm. And the big surprise is that through the intermediation of the observer we can tap into computational reducibility, and potentially find “physics-like” laws for all sorts of fields. This may not work for the questions that have traditionally been asked in these fields. But the point is that with the “right kind of observer” there’s computational reducibility to be found. And that computational reducibility may be something we can tap into for understanding, or to use some kind of system for technology.

It can all be seen as starting with the ruliad, and involving almost philosophical questions of what one can call “observer theory”. But in the end it gives us very practical ideas and methods that I think have the potential to lead to unexpectedly dramatic progress in a remarkable range of fields.

I knew that A New Kind of Science would have practical applications, particularly in modeling, in technology and in producing creative material. And indeed it has. But for our Physics Project applications seemed much further away, perhaps centuries. But a great surprise has been that through the multicomputational paradigm it seems as if there are going to be some quite immediate and very practical applications of the Physics Project.

In a sense the reason for this is that through the intermediation of multicomputation we see that many kinds of systems share the same underlying “metastructure”. And this means that as soon as there are things to say about one kind of system these can be applied to other systems. And in particular the great successes of physics can be applied to a whole range of systems that share the same multicomputational metastructure.

An immediate example is in practical computing, and particularly in the Wolfram Language. It’s something of a personal irony that the Wolfram Language is based on transformation rules for symbolic expressions, which is a structure very similar to what ends up being what’s involved in the Physics Project. But there’s a crucial difference: in the usual case of the Wolfram Language, everything works in a purely computational way, with a particular transformation being done at each step. But now there’s the potential to generalize that to the multicomputational case, and in effect to trace the multiway system of every possible transformation.

It’s not easy to pick out of that structure things that we can readily understand. But there are important lessons from physics for this. And as we build out the multicomputational capabilities of the Wolfram Language I fully expect that the “notational clarity” it will bring will help us to formulate much more in terms of the multicomputational paradigm.

I built the Wolfram Language as a tool that would help me explore the computational paradigm, and from that paradigm there emerged principles like the Principle of Computational Equivalence, which in turn led me to see the possibility of something like Wolfram|Alpha. But now from the latest basic science built on the foundations of A New Kind of Science, together with the practical tooling of the Wolfram Language, it’s becoming possible again to see how to make conceptual advances that can drive technology that will again in turn let us make—likely dramatic—progress in basic science.

Harvesting Seeds from A New Kind of Science

A New Kind of Science is full of intellectual seeds. And in the past few years—having now returned to basic science—I’ve been harvesting a few of those seeds. The Physics Project and the Metamathematics Project are two major results. But there’s been quite a bit more. And in fact it’s rather remarkable how many things that were barely more than footnotes in A New Kind of Science have turned into major projects, with important results.

Back in 2018—a year before beginning the Physics Project—I returned, for example, to what’s become known as the Wolfram Axiom: the axiom that I found in A New Kind of Science that is the very simplest possible axiom for Boolean algebra. But my focus now was not so much on the axiom itself as on the automated process of proving its correctness, and the effort to see the relation between “pure computation” and what one might consider a human-absorbable “narrative proof”.

Computational irreducibility appeared many times, notably in my efforts to understand AI ethics and the implications of computational contracts. I’ve no doubt that in the years to come, the concept of computational irreducibility will become increasingly important in everyday thinking—a bit like how concepts such as energy and momentum from the mathematical paradigm have become important. And in 2019, for example, computational irreducibility made an appearance in government affairs, as a result of me testifying about its implications for legislation about AI selection of content on the internet.

In A New Kind of Science I explored many specific systems about which one can ask all sorts of questions. And one might think that after 20 years “all the obvious questions” would have been answered. But they have not. And in a sense the fact that they have not is a direct reflection of the ubiquity of computational irreducibility. But it’s a fundamental feature that whenever there’s computational irreducibility, there must also be pockets of computational reducibility: in other words, the very existence of computational irreducibility implies an infinite frontier of potential progress.

Back in 2007, we’d had great success with our Turing Machine Prize, and the Turing machine that I’d suspected was the very simplest possible universal Turing machine was indeed proved universal—providing another piece of evidence for the Principle of Computational Equivalence. And in a sense there’s a general question that’s raised by A New Kind of Science about where the threshold of universality—or computational equivalence—really is in different kinds of systems.

But there are simpler-to-define questions as well. And ever since I first studied rule 30 in 1984 I’d wondered about many questions related to it. And in October 2019 I decided to launch the Rule 30 Prizes, defining three specific easy-to-state questions about rule 30. So far I don’t know of progress on them. And for all I know they’ll be open problems for centuries. From the point of view of the ruliad we can think of them as distant explorations in rulial space, and the question of when they can be answered is like the question of when we’ll have the technology to get to some distant place in physical space.

Having launched the Physics Project in April 2020, it was rapidly clear that its ideas could also be applied to metamathematics. And it even seemed as if it might be easier to make relevant “real-world” observations in metamathematics than in physics. And the seed for this was in a note in A New Kind of Science entitled “Empirical Metamathematics”. That note contained one picture of the theorem-dependency graph of Euclid’s Elements, which in the summer of 2020 expanded into a 70-page study. And in my recent “Physicalization of Metamathematics” there’s a continuation of that—beginning to map out empirical metamathematical space, as explored in the practice of mathematics, with the idea that multicomputational phenomena that in physics may take technically infeasible particle accelerators or telescopes might actually be within reach.

In addition to being the year we launched our Physics Project, 2020 was also the 100th anniversary of combinators—the first concrete formalization of universal computation. In A New Kind of Science I devoted a few pages and some notes to combinators, but I decided to do a deep dive and use both what I’d learned from A New Kind of Science and from the Physics Project to take a new look at them. Among other things the result was another application of multicomputation, as well as the realization that even though the S, K combinators from 1920 seemed very minimal, it was possible that S alone might also be universal, though with something different than the usual input → output “workflow” of computation.

In A New Kind of Science a single footnote mentions multiway Turing machines. And early last year I turned this seed into a long and detailed study that provides further foundational examples of multicomputation, and explores the question of just what it means to “do a computation” multicomputationally—something which I believe is highly relevant not only for practical distributed computing but also for things like molecular computing.

In 2021 it was the centenary of Post tag systems, and again I turned a few pages in A New Kind of Science into a long and detailed study. And what’s important about both this and my study of combinators is that they provide foundational examples (much like cellular automata in A New Kind of Science), which even in the past year or so I’ve used multiple times in different projects.

In mid-2021, yet another few-page discussion in A New Kind of Science turned into a detailed study of “The Problem of Distributed Consensus”. And once again, this turned out to have a multicomputational angle, at first in understanding the multiway character of possible outcomes, but later with the realization that the formation of consensus is deeply related to the process of measurement and the coarse-graining involved in it—and the fundamental way that observers extract “coherent experiences” from systems.

In A New Kind of Science, there’s a short note about multiway systems based on numbers. And once again, in fall 2021 I expanded on this to produce an extensive study of such systems, as a certain kind of very minimal example of multicomputation, that at least in some cases connects with traditional mathematical ideas.

From the vantage point of multicomputation and our Physics Project it’s interesting to look back at A New Kind of Science, and see some of what it describes with more clarity. In the fall of 2021, for example, I reviewed what had become of the original goal of “understanding complexity”, and what methodological ideas had emerged from that effort. I identified two primary ones, which I called “ruliology” and “metamodeling”. Ruliology, as I’ve mentioned above, is my new name for the pure, basic science of studying the behavior of systems with simple rules: in effect, it’s the science of exploring the computational universe.

Metamodeling is the key to making connections to systems in nature and elsewhere that one wants to study. Its goal is to find the “minimal models for models”. Often there are existing models for systems. But the question is what the ultimate essence of those models is. Can everything be reduced to a cellular automaton? Or a multiway system? What is the minimal “computational essence” of a system? And as we begin to apply the multicomputational paradigm to different fields, a key step will be metamodeling.

Ruliology and metamodeling are in a sense already core concepts in A New Kind of Science, though not under those names. Observer theory is much less explicitly covered. And many concepts—like branchial space, token-event graphs, the multiway causal graph and the ruliad—have only emerged now, with the Physics Project and the arrival of the multicomputational paradigm.

Multicomputation, the Physics Project and the Metamathematics Project are sowing their own seeds. But there are still many more seeds to harvest even from A New Kind of Science. And just as the multicomputational paradigm was not something that I, for one, could foresee from A New Kind of Science, no doubt there will in time be other major new directions that will emerge. But, needless to say, one should expect that it will be computationally irreducible to determine what will happen: a metacontribution of the science to the consideration of its own future.

The Doing of Science

The creation of A New Kind of Science took me a decade of intense work, none of which saw the light of day until the moment the book was published on May 14, 2002. Returning to basic science 17 years later the world had changed and it was possible for me to adopt a quite different approach, in a sense making the process of doing science as open and incremental as possible.

It’s helped that there’s the web, the cloud and livestreaming. But in a sense the most crucial element has been the Wolfram Language, and its character as a full-scale computational language. Yes, I use English to tell the story of what we’re doing. But fundamentally I’m doing science in the Wolfram Language, using it both as a practical tool, and as a medium for organizing my thoughts, and sharing and communicating what I’m doing.

Starting in 2003, we’ve had an annual Wolfram Summer School at which a long string of talented students have explored ideas based on A New Kind of Science, always through the medium of the Wolfram Language. In the last couple of years we’ve added a Physics track, connected to the Physics Project, and this year we’re adding a Metamathematics track, connected to the Metamathematics Project.

During the 17 years that I wasn’t focused on basic science, I was doing technology development. And I think it’s fair to say that at Wolfram Research over the past 35 years we’ve created a remarkably effective “machine” for doing innovative research and development. Mostly it’s been producing technology and products. But one of the very interesting features of the Physics Project and the projects that have followed it is that we’ve been applying the same managed approach to innovation to them that we have been using so successfully for so many years at our company. And I consider the results to be quite spectacular: in a matter of weeks or months I think we’ve managed to deliver what might otherwise have taken years, if it could have been done at all.

And particularly with the arrival of the multicomputational paradigm there’s quite a challenge. There are a huge number of exceptionally promising directions to follow, that have the potential to deliver revolutionary results. And with our concepts of managed research, open science and broad connection to talent it should be possible to make great progress even fairly quickly. But to do so requires significant scaling up of our efforts so far, which is why we’re now launching the Wolfram Institute to serve as a focal point for these efforts.

When I think about A New Kind of Science, I can’t help but be struck by all the things that had to align to make it possible. My early experiences in science and technology, the personal environment I’d created—and the tools I built. I wondered at the time whether the five years I took “away from basic science” to launch Mathematica and what’s now the Wolfram Language might have slowed down what became A New Kind of Science. Looking back I can say that the answer was definitively no. Because without the Wolfram Language the creation of A New Kind of Science would have needed “not just a decade”, but likely more than a lifetime.

And a similar pattern has repeated now, though even more so. The Physics Project and everything that has developed from it has been made possible by a tower of specific circumstances that stretch back nearly half a century—including my 17-year hiatus from basic science. Had all these circumstances not aligned, it is hard to say when something like the Physics Project would have happened, but my guess is that it would have been at least a significant part of a century away.

It is a lesson of the history of science that the absorption of major new paradigms is a slow process. And normally the timescales are long compared to the 20 years since A New Kind of Science was published. But in a sense we’ve managed to jump far ahead of schedule with the Physics Project and with the development of the multicomputational paradigm. Five years ago, when I summarized the first 15 years of A New Kind of Science I had no idea that any of this would happen.

But now that it has—and with all the methodology we’ve developed for getting science done—it feels as if we have a certain obligation to see just what can be achieved. And to see just what can be built in the years to come on the foundations laid down by A New Kind of Science.