The Importance of Multiway Systems

It’s all about systems where there can in effect be many possible paths of history. In a typical standard computational system like a cellular automaton, there’s always just one path, defined by evolution from one state to the next. But in a multiway system, there can be many possible next states—and thus many possible paths of history. Multiway systems have a central role in our Physics Project, particularly in connection with quantum mechanics. But what’s now emerging is that multiway systems in fact serve as a quite general foundation for a whole new “multicomputational” paradigm for modeling.

My objective here is twofold. First, I want to use multiway systems as minimal models for growth processes based on aggregation and tiling. And second, I want to use this concrete application as a way to develop further intuition about multiway systems in general. Elsewhere I have explored multiway systems for strings, multiway systems based on numbers, multiway Turing machines, multiway combinators, multiway expression evaluation and multiway systems based on games and puzzles. But in studying multiway systems for aggregation and tiling, we’ll be dealing with something that is immediately more physical and tangible.

When we think of “growth by aggregation” we typically imagine a “random process” in which new pieces get added “at random” to something. But each of these “random possibilities” in effect defines a different path of history. And the concept of a multiway system is to capture all those possibilities together. In a typical random (or “stochastic”) model one’s just tracing a single path of history, and one imagines one doesn’t have enough information to say which path it will be. But in a multiway system one’s looking at all the paths. And in doing so, one’s in a sense making a model for the “whole story” of what can happen.

The choice of a single path can be “nondeterministic”. But the whole multiway system is deterministic. And by studying that “deterministic whole” it’s often possible to make useful, quite general statements.

One can think of a particular moment in the evolution of a multiway system as giving something like an ensemble of states of the kind studied in statistical mechanics. But the general concept of a multiway system, with its discrete branching at discrete steps, depends on a level of fundamental discreteness that’s quite unfamiliar from traditional statistical mechanics—though is perfectly straightforward to define in a computational, or even mathematical, way.

For aggregation it’s easy enough to set up a minimal discrete model—at least if one allows explicit randomness in the model. But a major point of what we’ll do here is to “go above” that randomness, setting up our model in terms of a whole, deterministic multiway system.

What can we learn by looking at this whole multiway system? Well, for example, we can see whether there’ll always be growth—whatever the random choices may be—or whether the growth will sometimes, or even always, stop. And in many practical applications (think, for example, tumors) it can be very important to know whether growth always stops—or through what paths it can continue.

A lot of what we’ll at first do here involves seeing the effect of local constraints on growth. Later on, we’ll also look at effects of geometry, and we’ll study how objects of different shapes can aggregate, or ultimately tile.

The models we’ll introduce are in a sense very minimal—combining the simplest multiway structures with the simplest spatial structures. And with this minimality it’s almost inevitable that the models will show up as idealizations of all sorts of systems—and as foundations for good models of these systems.

At first, multiway systems can seem rather abstract and difficult to grasp—and perhaps that’s inevitable given our human tendency to think sequentially. But by seeing how multiway systems play out in the concrete case of growth processes, we get to build our intuition and develop a more grounded view—that will stand us in good stead in exploring other applications of multiway systems, and in general in coming to terms with the whole multicomputational paradigm.

The Simplest Case

It’s the ultimate minimal model for random discrete growth (often called the Eden model). On a square grid, start with one black cell, then at each step randomly attach a new black cell somewhere onto the growing “cluster”:

After 10,000 steps we might get:

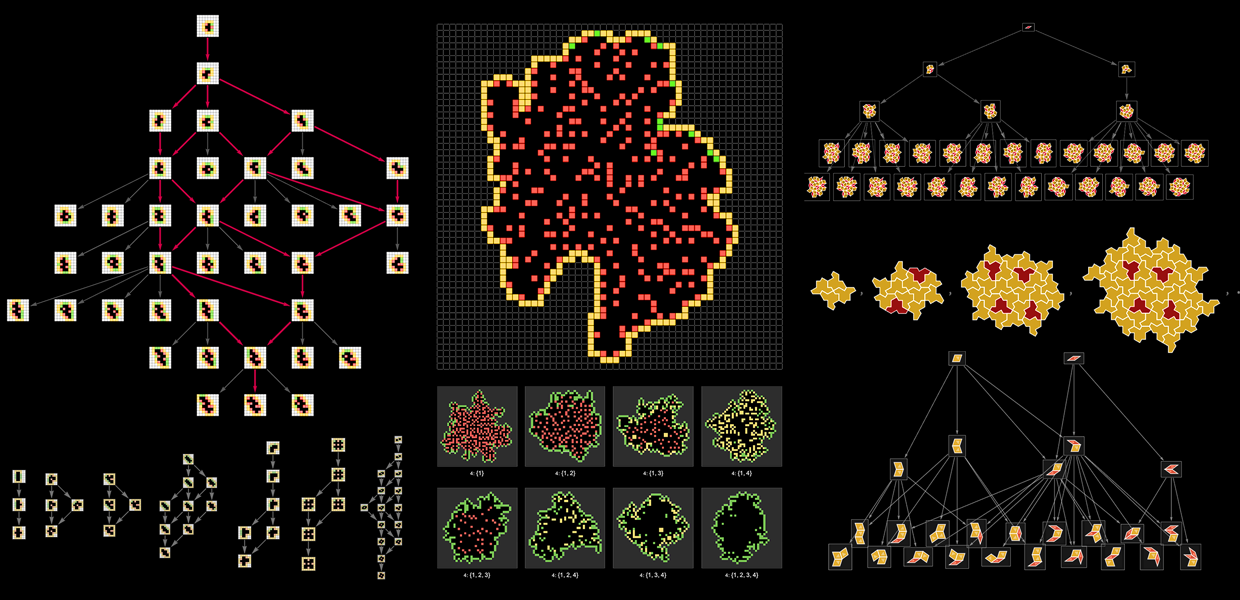

But what are all the possible things that can happen? For that, we can construct a multiway system:

A lot of these clusters differ only by a trivial translation; canonicalizing by translation we get

or after another step:

If we also reduce out rotations and reflections we get

or after another step:

The set of possible clusters after t steps are just the possible polyominoes (or “square lattice animals”) with t cells. The number of these for successive t is

growing roughly like kt for large t, with k a little larger than 4:

By the way, canonicalization by translation always reduces the number of possible clusters by a factor of t. Canonicalization by rotation and reflection can reduce the number by a factor of 8 if the cluster has no symmetry (which for large clusters becomes increasingly likely), and by a smaller factor the more symmetry the cluster has, as in:

With canonicalization, the multiway graph after 7 steps has the form

and it doesn’t look any simpler with alternative rendering:

If we imagine that at each step, cells are added with equal probability at every possible position on the cluster, or equivalently that all outgoing edges from a given cluster in the uncanonicalized multiway graph are followed with equal probability, then we can get a distribution of probabilities for the distinct canonical clusters obtained—here shown after 7 steps:

One feature of the large random cluster we saw at the beginning is that it has some holes in it. Clusters with holes start developing after 7 steps, with the smallest being:

This cluster can be reached through a subset of the multiway system:

And in fact in the limit of large clusters, the probability for there to be a hole seems to approach 1—even though the total fraction of area covered by holes approaches 0.

One way to characterize the “space of possible clusters” is to create a branchial graph by connecting every pair of clusters that have a common ancestor one step back in the multiway graph:

The connectedness of all these graphs reflects the fact that with the rule we’re using, it’s always possible at any step to go from one cluster to another by a sequence of delete-one-cell/add-one-cell changes.

The branchial graphs here also show a 4-fold symmetry resulting from the symmetry of the underlying lattice. Canonicalizing the states, we get smaller branchial graphs that no longer show any such symmetry:

Totalistically Constrained Growth (4-Cell Neighborhoods)

With the rule we’ve been discussing so far, a new cell to be attached can be anywhere on a cluster. But what if we limit growth, by requiring that new cells must have certain numbers of existing cells around them? Specifically, let’s consider rules that look at the ![]() neighbors around any given position, and allow a new cell there only if there are specified numbers of existing cells in the neighborhood.

neighbors around any given position, and allow a new cell there only if there are specified numbers of existing cells in the neighborhood.

Starting with a cross of black cells, here are some examples of random clusters one gets after 20 steps with all possible rules of this type (the initial “4” designates that these are 4-neighbor rules):

Rules that don’t allow new cells to end up with just one existing neighbor can only fill in corners in their initial conditions, and can’t grow any further. But any rule that allows growth with only one existing neighbor produces clusters that keep growing forever. And here are some random examples of what one can get after 10,000 steps:

The last of these is the unconstrained (Eden model) rule we already discussed above. But let’s look more carefully at the first case—where there’s growth only if a new cell will end up with exactly one neighbor. The canonicalized multiway graph in this case is:

The possible clusters here correspond to polyominoes that are “always one cell wide” (i.e. have no 2×2 blocks), or, equivalently, have perimeter 2t + 2 at step t. The number of such canonicalized clusters grows like:

This is an increasing fraction of the total number of polyominoes—implying that most large polyominoes take this “spindly” form.

A new feature of a rule with constraints is that not all locations around a cluster may allow growth. Here is a version of the multiway system above, with cells around each cluster annotated with green if new growth is allowed there, and red if it never can be:

In a larger random cluster, we can see that with this rule, most of the interior is “dead” in the sense that the constraint of the rule allows no further growth there:

By the way, the clusters generated by this rule can always be directly represented by their “skeleton graphs”:

Looking at random clusters for all the (grow-with-1-neighbor) rules above, we see different patterns of holes in each case:

There are altogether five types of cells being distinguished here, reflecting different neighbor configurations:

Here’s a sample cluster generated with the 4:{1,3} rule:

Cells indicated with ![]() already have too many neighbors, and so can never be added to the cluster. Cells indicated with

already have too many neighbors, and so can never be added to the cluster. Cells indicated with ![]() have exactly the right number of neighbors to be added immediately. Cells indicated with

have exactly the right number of neighbors to be added immediately. Cells indicated with ![]() don’t currently have the right number of neighbors to grow, but if neighbors are filled in, they might be able to be added. Sometimes it will turn out that when neighbors of

don’t currently have the right number of neighbors to grow, but if neighbors are filled in, they might be able to be added. Sometimes it will turn out that when neighbors of ![]() cells get filled in, they will actually prevent the cell from being added (so that it becomes

cells get filled in, they will actually prevent the cell from being added (so that it becomes ![]() )—and in the particular case shown here that happens with the 2×2 blocks of

)—and in the particular case shown here that happens with the 2×2 blocks of ![]() cells.

cells.

The multiway graphs from the rules shown here are all qualitatively similar, but there are detailed differences. In particular, at least for many of the rules, an increasing number of states are “missing” relative to what one gets with the grow-in-all-cases 4:{1,2,3,4} rule—or, in other words, there are an increasing number of polyominoes that can’t be generated given the constraints:

The first polyomino that can’t be reached (which occurs at step 4) is:

At step 6 the polyominoes that can’t be reached for rules 4:{1,3} and 4:{1,3,4} are

while for 4:{1} and 4:{1,4} the additional polyomino

can also not be reached.

At step 8, the polyomino

is reachable with 4:{1} and 4:{1,3} but not with 4:{1,4} and 4:{1,3,4}.

Of some note is that none of the rules that exclude polyominoes can reach:

Totalistically Constrained Growth (8-Cell Neighborhoods)

What happens if one considers diagonal as well orthogonal neighbors, giving a total of 8 neighbors around a cell? There are 256 possible rules in this case, corresponding to the possible subsets of Range[8]. Here are samples of what they do after 200 steps, starting from an initial ![]() cluster:

cluster:

Two cases that at least initially show growth here are (the “8” designates that these are 8-neighbor rules):

In the {2} case, the multiway graph begins with:

One might assume that every branch in this graph would continue forever, and that growth would never “get stuck”. But it turns out that after 9 steps the following cluster is generated:

And with this cluster, no further growth is possible: no positions around the boundary have exactly 2 neighbors. In the multiway graph up to 10 steps, it turns out this is the only “terminal cluster” that can be generated—out of a total of 1115 possible clusters:

So how is that terminal cluster reached? Here’s the fragment of multiway graph that leads to it:

If we don’t prune off all the ways to “go astray”, the fragment appears as part of a larger multiway graph:

And if one follows all paths in the unpruned (and uncanonicalized) multiway graph at random (i.e. at each step, one chooses each branch with equal probability), it turns out that the probability of ever reaching this particular terminal cluster is just:

(And the fact that this number is fairly small implies that the system is far from confluent; there are many paths that, for example, don’t converge to the fixed point corresponding to this terminal cluster.)

If we keep going in the evolution of the multiway system, we’ll reach other terminal clusters; after 12 steps the following have appeared:

For the {3} rule above, the multiway system takes a little longer to “get going”:

Once again there are terminal clusters where the system gets stuck; the first of them appears at step 14:

And also once again the terminal cluster appears as an isolated node in the whole multiway system:

The fragment of multiway graph that leads to it is:

So far we’ve been finding terminal clusters by waiting for them to appear in the evolution of the multiway system. But there’s another approach, similar to what one might use in filling in something like a tiling. The idea is that every cell in a terminal cluster must have neighbors that don’t allow further growth. In other words, the terminal cluster must consist of certain “local tiles” for which the constraints don’t allow growth. But what configurations of local tiles are possible? To determine this, we turn the matching conditions for the tiles into logical expressions whose variables are True and False depending on whether particular positions in the template do or do not contain cells in the cluster. By solving the satisfiability problem for the combination of these logical expressions, one finds configurations of cells that could conceivably correspond to terminal clusters.

Following this procedure for the {2} rules with regions of up to 6×6 cells we find:

But now there’s an additional constraint. Assuming one starts from a connected initial cluster, any subsequent cluster generated must also be connected. Removing the non-connected cases we get:

So given these terminal clusters, what initial conditions can lead to them? To determine this we effectively have to invert the aggregation process—giving in the end a multiway graph that includes all initial conditions that can generate a given terminal cluster. For the smallest terminal cluster we get:

Our 4-cell “T” initial condition appears here—but we see that there are also even smaller 2-cell initial conditions that lead to the same terminal cluster.

For all the terminal clusters we showed before, we can construct the multiway graphs starting with the minimal initial clusters that lead to them:

For terminal clusters like

there’s no nontrivial multiway system to show, since these clusters can only appear as initial conditions; they can never be generated in the evolution.

There are quite a few small clusters that can only appear as initial conditions, and do not have preimages under the aggregation rule. Here are the cases that fit in a 3×3 region:

The case of the {3} rule is fairly similar to the {2} rule. The possible terminal clusters up to 5×5 are:

However, most of these have only a fairly limited set of possible preimages:

For example we have:

And indeed beyond the (size-17) example we already showed above, no other terminal clusters that can be generated from a T initial condition appear here. Sampling further, however, additional terminal clusters appear (beginning at size 25):

The fragments of multiway graphs for the first few of these are:

Random Evolution

We’ve seen above that for the rules we’ve been investigating, terminal clusters are quite rare among possible states in the multiway system. But what happens if we just evolve at random? How often will we wind up with a terminal cluster? When we say “evolve at random”, what we mean is that at each step we’re going to look at all possible positions where a new cell could be added to the cluster that exists so far, and then we’re going to pick with equal probability at which of these to actually add the new cell.

For the 8:{3} rule something surprising happens. Even though terminal clusters are rare in its multiway graph, it turns out that regardless of its initial conditions, it always eventually reaches a terminal cluster—though it often takes a while. And here, for example, are a few possible terminal clusters, annotated with the number of steps it took to reach them (which is also equal to the number of cells they contain):

The distribution of the number of steps to termination seems to be very roughly exponential (here based on a sample of 10,000 random cases)—with mean lifetime around 2300 and half-life around 7400:

Here’s an example of a large terminal cluster—that takes 21,912 steps to generate:

And here’s a map showing when growth in different parts of this cluster occurred (with blue being earliest and red being latest):

This picture suggests that different parts of the cluster “actively grow” at different times, and if we look at a “spacetime” plot of where growth occurs as a function of time, we can confirm this:

And indeed what this suggests is that what’s happening is that different parts of the cluster are at first “fertile”, but later inevitably “burn out”—so that in the end there are no possible positions left where growth can occur.

But what shapes can the final terminal clusters form? We can get some idea by looking at a “compactness measure” (of the kind often used to study gerrymandering) that roughly gives the standard deviation of the distances from the center of each cluster to each of the cells in it. Both “very stringy” and “roughly circular” clusters are fairly rare; most clusters lie somewhere in between:

If we look not at the 8:{3} but instead at the 8:{2} rule, things are very different. Once again, it’s possible to reach a terminal cluster, as the multiway graph shows. But now random evolution almost never reaches a terminal cluster, and instead almost always “runs away” to generate an infinite cluster. The clusters generated in this case are typically much more “compact” than in the 8:{3} case

and this is also reflected in the “spacetime” version:

Parallel Growth and Causal Graphs

In building up our clusters so far, we’ve always been assuming that cells are added sequentially, one at a time. But if two cells are far enough apart, we can actually add them “simultaneously”, in parallel, and end up building the same cluster. We can think of the addition of each cell as being an “event” that updates the state of the cluster. Then—just like in our Physics Project, and other applications of multicomputation—we can define a causal graph that represents the causal dependencies between these events, and then foliations of this causal graph tell us possible overall sequences of updates, including parallel.

As an example, consider this sequence of states in the “always grow” 4:{1,2,3,4} rule—where at each step the cell that’s new is colored red (and we’re including the “nothing” state at the beginning):

Every transition between successive states defines an event:

There’s then causal dependence of one event on another if the cell added in the second event is adjacent to the one added in the first event. So, for example, there are causal dependencies like

and

where in the second case additional “spatially separated” cells have been added that aren’t involved in the causal dependence. Putting all the causal dependencies together, we get the complete causal graph for this evolution:

We can recover our original sequence of states by picking a particular ordering of these events (here indicated by the positions of the cells they add):

This path has the property that it always follows the direction of causal edges—and we can make that more obvious by using a different layout for the causal graph:

But in general we can use any ordering of events consistent with the causal graph. Another ordering (out of a total of 40,320 possibilities in this case) is

which gives the sequence of states

with the same final cluster configuration, but different intermediate states.

But now the point is that the constraints implied by the causal graph do not require all events to be applied sequentially. Some events can be considered “spacelike separated” and so can be applied simultaneously. And in fact, any foliation of the causal graph defines a certain sequence for applying events—either sequentially or in parallel. So, for example, here is one particular foliation of the causal graph (shown with two different renderings for the causal graph):

And here is the corresponding sequence of states obtained:

And since in some slices of this foliation multiple events happen “in parallel”, it’s “faster” to get to the final configuration. (As it happens, this foliation is like a “cosmological rest frame foliation” in our Physics Project, and involves the maximum possible number of events happening on each slice.)

Different foliations (and there are a total of 678,972 possibilities in this case) will give different sequences of states, but always the same final state:

Note that nothing we’ve done here depends on the particular rule we’ve used. So, for example, for the 8:{2} rule with sequence of states

the causal graph is:

It’s worth commenting that everything we’ve done here has been for particular sequences of states, i.e. particular paths in the multiway graph. And in effect what we’re doing is the analog of classical spacetime physics—tracing out causal dependencies in particular evolution histories. But in general we could look at the whole multiway causal graph, with events that are not only timelike or spacelike separated, but also branchlike separated. And if we make foliations of this graph, we’ll end up not only with “classical” spacetime states, but also “quantum” superposition states that would need to be represented by something like multispace (in which at each spatial position, there is a “branchial stack” of possible cell values).

The One-Dimensional Case

So far we’ve been considering aggregation processes in two dimensions. But what about one dimension? In 1D, a “cluster” just consists of a sequence of cells. The simplest rule allows a cell to be added whenever it’s adjacent to a cell that’s already there. Starting from a single cell, here’s a possible random evolution according to such a rule, shown evolving down the page:

We can also construct the multiway system for this rule:

Canonicalizing the states gives the trivial multiway graph:

But just like in the 2D case things get less trivial if there are constraints on growth. For example, assume that before placing a new cell we count the number of cells that lie either distance 1 or distance 2 away. If the number of allowed cells can only be exactly 1 we get behavior like:

The corresponding multiway system is

or after canonicalization:

The number of distinct sequences after t steps here is given by

which can be expressed in terms of Fibonacci numbers, and for large t is about ![]() .

.

The rule in effect generates all possible Morse-code-like sequences, consisting of runs of either 2-cell (“long”) black blocks or 1-cell (“short”) black blocks, interspersed by “gaps” of single white cells.

The branchial graphs for this system have the form:

Looking at random evolutions for all possible rules of this type we get:

The corresponding canonicalized multiway graphs are:

The rules we’ve looked at so far are purely totalistic: whether a new cell can be added depends only on the total number of cells in its neighborhood. But (much like, for example, in cellular automata) it’s also possible to have rules where whether one can add a new cell depends on the complete configuration of cells in a neighborhood. Mostly, however, such rules seem to behave very much like totalistic ones.

Other generalizations include, for example, rules with multiple “colors” of cells, and rules that depend either on the total number of cells of different colors, or their detailed configurations.

The Three-Dimensional Case

The kind of analysis we’ve done for 2D and 1D aggregation systems can readily be extended to 3D. As a first example, consider a rule in which cells can be added along each of the 6 coordinate directions in a 3D grid whenever they are adjacent to an existing cell. Here are some typical examples of random clusters formed in this case:

Taking successive slices through the first of these (and coloring by “age”) we get:

If we allow a cell to be added only when it is adjacent to just one existing cell (corresponding to the rule 6:{1}) we get clusters that from the outside look almost indistinguishable

but which have an “airier” internal structure:

Much like in 2D, with 6 neighbors, there can’t be unbounded growth unless cells can be added when there is just one cell in the neighborhood. But in analogy to what happens in 2D, things get more complicated when we allow “corner adjacency” and have a 26-cell neighborhood.

If cells can be added whenever there’s at least one adjacent cell, the results are similar to the 6-neighbor case, except that now there can be “corner-adjacent outgrowths”

and the whole structure is “still airier”:

Little qualitatively changes for a rule like 26:{2} where growth can occur only with exactly 2 neighbors (here starting with a 3D dimer):

But the general question of when there is growth, and when not, is quite complicated and subtle. In particular, even with a specific rule, there are often some initial conditions that can lead to unbounded growth, and others that cannot.

Sometimes there is growth for a while, but then it stops. For example, with the rule 26:{9}, one possible path of evolution from a 3×3×3 block is:

The full multiway graph in this case terminates, confirming that no unbounded growth is ever possible:

With other initial conditions, however, this rule can grow for longer (here shown every 10 steps):

And from what one can tell, all rules 26:{n} lead to unbounded growth for ![]() , and do not for

, and do not for ![]() .

.

Polygonal Shapes

So far, we’ve been looking at “filling in cells” in grids—in 2D, 1D and 3D. But we can also look at just “placing tiles” without a grid, with each new tile attaching edge to edge to an existing tile.

For square tiles, there isn’t really a difference:

And the multiway system is just the same as for our original “grow anywhere” rule on a 2D grid:

Here’s now what happens for triangular tiles:

The multiway graph now generates all polyiamonds (triangular polyforms):

And since equilateral triangles can tessellate in a regular lattice, we can think of this—like the square case—as “filling in cells in a lattice” rather than just “placing tiles”. Here are some larger examples of random clusters in this case:

Essentially the same happens with regular hexagons:

The multiway graph generates all polyhexes:

Here are some examples of larger clusters—showing somewhat more “tendrils” than the triangular case:

And in an “effectively lattice” case like this we could also go on and impose constraints on neighborhood configurations, much as we did in earlier sections above.

But what happens if we consider shapes that do not tessellate the plane—like regular pentagons? We can still “sequentially place tiles” with the constraint that any new tile can’t overlap an existing one. And with this rule we get for example:

Here are some “randomly grown” larger clusters—showing all sorts of irregularly shaped interstices inside:

(And, yes, generating such pictures correctly is far from trivial. In the “effectively lattice” case, coincidences between polygons are fairly easy to determine exactly. But in something like the pentagon case, doing so requires solving equations in a high-degree algebraic number field.)

The multiway graph, however, does not show any immediately obvious differences from the ones for “effectively lattice” cases:

It makes it slightly easier to see what’s going on if we riffle the results on the last step we show:

The branchial graphs in this case have the form:

Here’s a larger cluster formed from pentagons:

And remember that the way this is built is sequentially to add one pentagon at each step by testing every “exposed edge” and seeing in which cases a pentagon will “fit”. As in all our other examples, there is no preference given to “external” versus “internal” edges.

Note that whereas “effectively lattice” clusters always eventually fill in all their holes, this isn’t true for something like the pentagon case. And in this case it appears that in the limit, about 28% of the overall area is taken up by holes. And, by the way, there’s a definite “zoo” of at least small possible holes, here plotted with their (logarithmic) probabilities:

So what happens with other regular polygons? Here’s an example with octagons (and in this case the limiting total area taken up by holes is about 35%):

And, by the way, here’s the “zoo of holes” in this case:

With pentagons, it’s pretty clear that difficult-to-resolve geometrical situations will arise. And one might have thought that octagons would avoid these. But there are still plenty of strange “mismatches” like

that aren’t easy to characterize or analyze. By the way, one should note that any time a “closed hole” is formed, the vectors corresponding to the edges that form its boundary must sum to zero—in effect defining an equation.

When the number of sides in the regular polygon gets large, our clusters will approximate circle packings. Here’s an example with 12-gons:

But of course because we’re insisting on adding one polygon at a time, the resulting structure is much “airier” than a true circle packing—of the kind that would be obtained (at least in 2D) by “pushing on the edges” of the cluster.

Polyomino Tilings

In the previous section we considered “sequential tilings” constructed from regular polygons. But the methods we used are quite general, and can be applied to sequential tilings formed from any shape—or shapes (or, at least, any shapes for which “attachment edges” can be identified).

As a first example, consider a domino or dimer shape—which we assume can be oriented both vertically and horizontally:

Here’s a somewhat larger cluster formed from dimers:

Here’s the canonicalized multiway graph in this case:

And here are the branchial graphs:

So what about other polyomino shapes? What happens when we try to sequentially tile with these—effectively making “polypolyominoes”?

Here’s an example based on an L-shaped polyomino:

Here’s a larger cluster

and here’s the canonicalized multiway graph after just 1 step

and after 2 steps:

The only other 3-cell polyomino is the tromino:

(For dimers, the limiting fraction of area covered by holes seems to be about 17%, while for L and tromino polyominoes, it’s about 27%.)

Going to 4 cells, there are 5 possible polyominoes—and here are samples of random clusters that can be built with them (note that in the last case shown, we require only that “subcells” of the 2×2 polyomino must align):

The corresponding multiway graphs are:

Continuing for more steps in a few cases:

Some polyominoes are “more awkward” to fit together than others—so these typically give clusters of “lower density”:

So far, we’ve always considered adding new polyominoes so that they “attach” on any “exposed edge”. And the result is that we can often get long “tendrils” in our clusters of polyominoes. But an alternative strategy is to try to add polyominoes as “compactly” as possible, in effect by adding successive “rings” of polyominoes (with “older” rings here colored bluer):

In general there are many ways to add these rings, and eventually one will often get stuck, unable to add polyominoes without leaving holes—as indicated by the red annotation here:

Of course, that doesn’t mean that if one was prepared to “backtrack and try again”, one couldn’t find a way to extend the cluster without leaving holes. And indeed for the polyomino we’re looking at here it’s perfectly possible to end up with “perfect tilings” in which no holes are left:

In general, we could consider all sorts of different strategies for growing clusters by adding polyominoes “in parallel”—just like in our discussion of causal graphs above. And if we add polyominoes “a ring at a time” we’re effectively making a particular choice of foliation—in which the successive “ring states” turn out be directly analogous to what we call “generational states” in our Physics Project.

If we allow holes (and don’t impose other constraints), then it’s inevitable that—just with ordinary, sequential aggregation—we can grow an unboundedly large cluster of polyominoes of any shape, just by always attaching one edge of each new polyomino to an “exposed” edge of the existing cluster. But if we don’t allow holes, it’s a different story—and we’re talking about a traditional tiling problem, where there are ultimately cases where tiling is impossible, and only limited-size clusters can be generated.

As it happens, all polyominoes with 6 or fewer cells do allow infinite tilings. But with 7 cells the following do not:

It’s perfectly possible to grow random clusters with these polyominoes—but they tend not to be at all compact, and to have lots of holes and tendrils:

So what happens if we try to grow clusters in rings? Here are all the possible ways to “surround” the first of these polyominoes with a “single ring”:

And it turns out in every single case, there are edges (indicated here in red) where the cluster can’t be extended—thereby demonstrating that no infinite tiling is possible with this particular polyomino.

By the way, much like we saw with constrained growth on a grid, it’s possible to have “tiling regions” that can extend only a certain limited distance, then always get stuck.

It’s worth mentioning that we’ve considered here the case of single polyominoes. It’s also possible to consider being able to add a whole set of possible polyominoes—“Tetris style”.

Nonperiodic Tilings

We’ve looked at polyominoes—and shapes like pentagons—that don’t tile the plane. But what about shapes that can tile the plane, but only nonperiodically? As an example, let’s consider Penrose tiles. The basic shapes of these tiles are

though there are additional matching conditions (implicitly indicated by the arrows on each tile), which can be enforced either by putting notches in the tiles or by decorating the tiles:

Starting with these individual tiles, we can build up a multiway system by attaching tiles wherever the matching rules are satisfied (note that all edges of both tiles are the same length):

So how can we tell that these tiles can form a nonperiodic tiling? One approach is to generate a multiway system in which at successive steps we surround clusters with rings in all possible ways:

Continuing for another step we get:

Notice that here some of the branches have died out. But the question is what branches exist that will continue forever, and thus lead to an infinite tiling? To answer this we have to do a bit of analysis.

The first step is to see what possible “rings” can have formed around the original tile. And we can read all of these off from the multiway graph:

But now it’s convenient to look not at possible rings around a tile, but instead at possible configurations of tiles that can surround a single vertex. There turns out to be the following limited set:

The last two of these configurations have the feature that they can’t be extended: no tile can be added on the center of their “blue sides”. But it turns out that all the other configurations can be extended—though only to make a nested tiling, not a periodic one.

And a first indication of this is that larger copies of tiles (“supertiles”) can be drawn on top of the first three configurations we just identified, in such a way that the vertices of the supertiles coincide with vertices of the original tiles:

And now we can use this to construct rules for a substitution system:

Applying this substitution system builds up a nested tiling that can be continued forever:

But is such a nested tiling the only one that is possible with our original tiles? We can prove that it is by showing that every tile in every possible configuration occurs within a supertile. We can pull out possible configurations from the multiway system—and then in each case it turns out that we can indeed find a supertile in which the original tile occurs:

And what this all means is that the only infinite paths that can occur in the multiway system are ones that correspond to nested tilings; all other paths must eventually die out.

The Penrose tiling involves two distinct tiles. But in 2022 it was discovered that—if one’s allowed to flip the tile over—just a single (“hat”) tile is sufficient to force a nonperiodic tiling:

The full multiway graph obtained from this tile (and its flip-over) is complicated, but many paths in it lead (at least eventually) to “dead ends” which cannot be further extended. Thus, for example, the following configurations—which appear early in the multiway graph—all have the property that they can’t occur in an infinite tiling:

In the first case here, we can successively add a few rings of tiles:

But after 7 rings, there is a “contradiction” on the boundary, and no further growth is possible (as indicated by the red annotations):

Having eliminated cases that always lead to “dead ends” the resulting simplified multiway graph effectively includes all joins between hat tiles that can ultimately lead to surviving configurations:

Once again we can define a supertile transformation

where the region outlined in red can potentially overlap another supertile. Now we can construct a multiway graph for the supertile (in its “bitten out” and full variant)

and can see that there is a (one-to-one) map from the multiway graph for the original tiles and for these supertiles:

And now from this we can tell that there can be arbitrarily large nested tilings using the hat tile:

Personal Notes

Tucked away on page 979 of my 2002 book A New Kind of Science is a note (written in 1995) on “Generalized aggregation models”:

And in many ways the current piece is a three-decade-later followup to that note—using a new approach based on multiway systems.

In A New Kind of Science I did discuss multiway systems (both abstractly, and in connection with fundamental physics). But what I said about aggregation was mostly in a section called “The Phenomenon of Continuity” which discussed how randomness could on a large scale lead to apparent continuity. That section began by talking about things like random walks, but went on to discuss the same minimal (“Eden model”) example of “random aggregation” that I give here. And then, in an attempt to “spruce up” my discussion of aggregation, I started looking at “aggregation with constraints”. In the main text of the book I gave just two examples:

But then for the footnote I studied a wider range of constraints (enumerating them much as I had cellular automata)—and noticed the surprising phenomenon that with some constraints the aggregation process could end up getting stuck, and not being able to continue.

For years I carried around the idea of investigating that phenomenon further. And it was often on my list as a possible project for a student to explore at the Wolfram Summer School. Occasionally it was picked, and progress was made in various directions. And then a few years ago, with our Physics Project in the offing, the idea arose of investigating it using multiway systems—and there were Summer School projects that made progress on this. Meanwhile, as our Physics Project progressed, our tools for working with multiway systems greatly improved—ultimately making possible what we’ve done here.

By the way, back in the 1990s, one of the many topics I studied for A New Kind of Science was tilings. And in an effort to determine what tilings were possible, I investigated what amounts to aggregation under tiling constraints—which is in fact even a generalization of what I consider here:

Thanks

First and foremost, I’d like to thank Brad Klee for extensive help with this piece, as well as Nik Murzin for additional help. (Thanks also to Catherine Wolfram, Christopher Wolfram and Ed Pegg for specific pointers.) I’d like to thank various Wolfram Summer School students (and their mentors) who’ve worked on aggregation systems and their multiway interpretation in recent years: Kabir Khanna 2019 (mentors: Christopher Wolfram & Jonathan Gorard), Lina M. Ruiz 2021 (mentors: Jesse Galef & Xerxes Arsiwalla), Pietro Pepe 2023 (mentor: Bob Nachbar). (Also related are the Summer School projects on tilings by Bowen Ping 2023 and Johannes Martin 2023.)

See Also

Games and Puzzles as Multicomputational Systems

The Physicalization of Metamathematics and Its Implications for the Foundations of Mathematics

Multicomputation with Numbers: The Case of Simple Multiway Systems

Multicomputation: A Fourth Paradigm for Theoretical Science

Combinators: A Centennial View—Updating Schemes and Multiway Systems